What is Apache Spark? Why, When, How Using Apache Spark..?

Parsapogu Vinay

Data Engineer | Python | SQL | AWS | ETL | Spark | Pyspark | Kafka |Airflow

Apache Spark: A Game Changer for Big Data Processing

In today's data-driven world, efficiently processing large volumes of data is crucial for businesses. Apache Spark has emerged as one of the most powerful big data processing frameworks, offering speed, scalability, and ease of use. But what exactly is Apache Spark? When and why should you use it? And how can you build an ETL (Extract, Transform, Load) pipeline using Spark? I think we should explore.

What is Apache Spark?

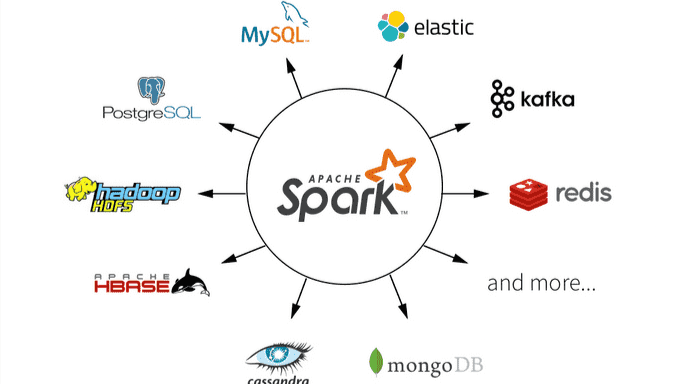

Apache Spark is an open-source, distributed computing system designed for big data processing. It provides in-memory computation, which makes it significantly faster than traditional data processing frameworks like Hadoop MapReduce. Spark supports multiple programming languages, including Python (PySpark), Java, Scala, and R, making it highly versatile.

Why Use Apache Spark?

When to Use Apache Spark?

How to Use Apache Spark?

How to Build an ETL Pipeline Using Apache Spark?

Step 1: Extract Data

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName("ETL Pipeline").getOrCreate()

df = spark.read.csv("s3://my-bucket/data.csv", header=True, inferSchema=True)

Step 2: Transform Data

df = df.dropna().withColumn("new_col", df["existing_col"] * 2)

Step 3: Load Data

df.write.mode("overwrite").parquet("s3://my-bucket/transformed-data")

Conclusion

Apache Spark is a powerful tool for big data processing, offering speed, scalability, and flexibility. Whether you are building ETL pipelines, performing real-time analytics, or training machine learning models, Spark can help streamline your workflow. If you're looking to enter the world of big data, mastering Spark is a great step forward!