Video-Panda: Parameter-efficient Alignment for Encoder-free Video-Language Models

Today's paper introduces Video-Panda, a new encoder-free approach for video-language understanding. The method achieves competitive performance while using significantly fewer parameters than traditional approaches that rely on heavyweight image or video encoders. Through a specialized spatio-temporal alignment block, Video-Panda processes videos directly without pre-trained encoders, reducing computational overhead while maintaining strong performance.

Method Overview

Video-Panda introduces a Spatio-Temporal Alignment Block (STAB) that directly processes video inputs without requiring pre-trained encoders. The method first divides video frames into patches and processes them through local spatio-temporal encoding to capture fine-grained features within small windows.

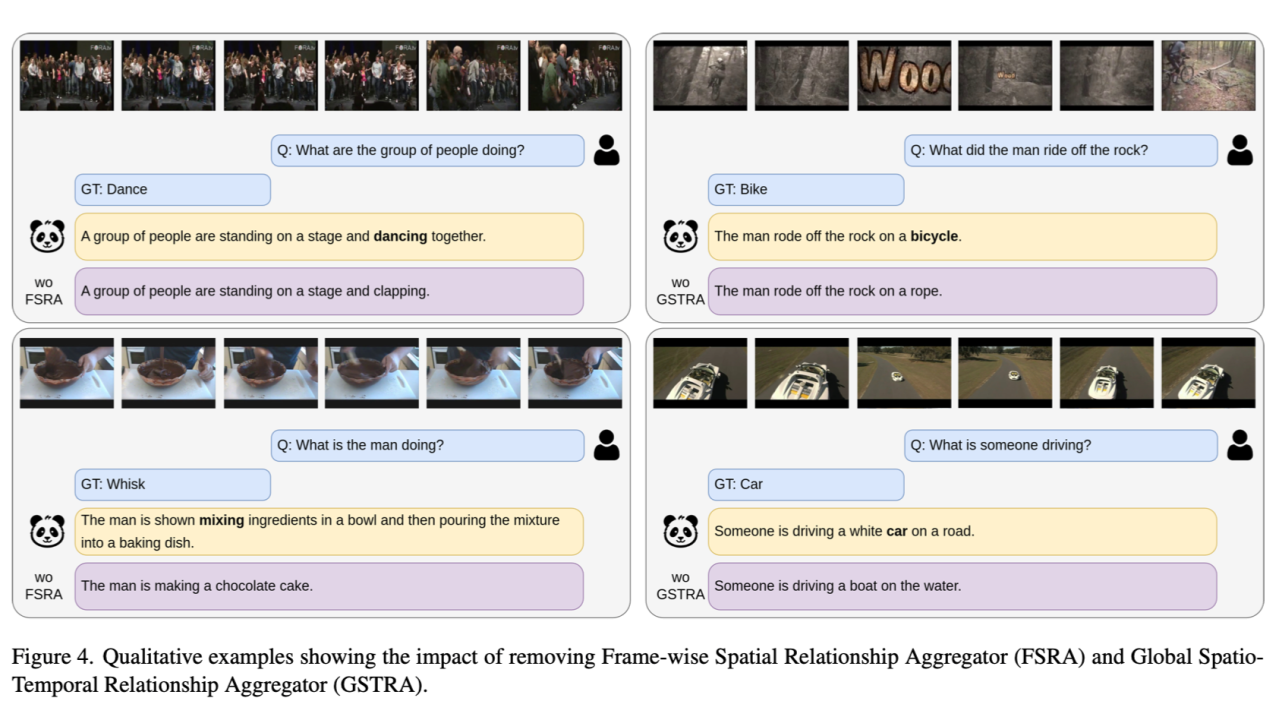

The architecture separates the processing of spatial and temporal information through two main components. The Frame-wise Spatial Relationship Aggregator (FSRA) handles spatial relationships within each frame, while the Global Spatio-Temporal Relationship Aggregator (GSTRA) captures relationships across the entire video. This dual approach allows the model to understand both detailed frame-specific content and broader video context.

To maintain efficiency, the method incorporates a Local Spatial Downsampling mechanism that reduces spatial dimensions while preserving important information. The final step combines the processed information and aligns it with the language model's embedding space, enabling effective video-language understanding.

The training process follows three stages: initial alignment to establish basic video understanding, visual-language integration to develop joint comprehension capabilities, and instruction tuning to enhance response generation for video-based queries.

领英推荐

Results

Video-Panda achieves competitive performance while using only 45M parameters for visual processing - a 6.5× reduction compared to Video-ChatGPT (307M) and 9× reduction compared to Video-LLaVA (425M). The method processes videos 3-4× faster than encoder-based approaches while maintaining strong performance on video question answering benchmarks. On the MSVD-QA dataset, it achieves 64.7% accuracy, comparable to state-of-the-art methods that use significantly more parameters.

Conclusion

The paper demonstrates that efficient video-language understanding is possible without relying on heavyweight encoders. Through careful architectural design and specialized spatio-temporal processing, Video-Panda achieves competitive performance while significantly reducing computational requirements. For more information please consult the full paper.

Congrats to the authors for their work!

Yi, Jinhui, et al. "Video-Panda: Parameter-efficient Alignment for Encoder-free Video-Language Models." arXiv preprint arXiv:2412.18609 (2023).