Unlocking the Power of pgVector: Distance Functions and Indexing Explained

Zahir Shaikh

Lead (Generative AI / Automation) @ T-Systems | Specializing in Automation, Large Language Models (LLM), LLAMA Index, Langchain | Expert in Deep Learning, Machine Learning, NLP, Vector Databases | RPA

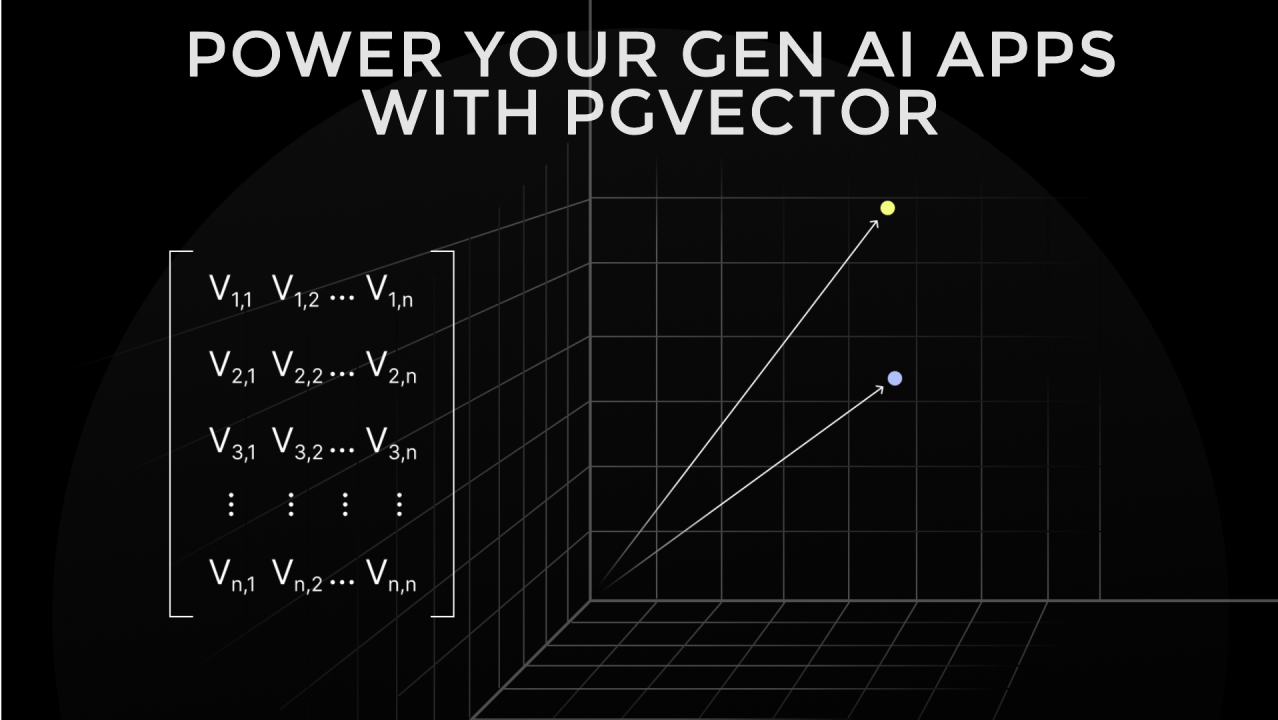

PostgreSQL is a powerhouse for relational data, but with the rise of machine learning and AI, managing and querying vector embeddings has become increasingly important. Enter pgVector, a PostgreSQL extension that adds native support for vectors and enables efficient similarity searches. In this article, we’ll explore the various distance functions provided by pgVector and how indexing can significantly boost query performance.

What is pgVector?

pgVector extends PostgreSQL by introducing a new data type—vector—for storing n-dimensional vectors. It also includes support for similarity searches using various distance metrics, making it a natural choice for applications in recommendation systems, natural language processing, and computer vision.

Distance Functions in pgVector

pgVector supports several distance metrics to measure similarity or dissimilarity between vectors. Here’s an overview of the available functions:

1. L2 Distance (<->)

Example Query:

SELECT id, embedding, embedding <-> '[1, 2, 3]' AS l2_distance

FROM items

ORDER BY l2_distance

LIMIT 5;

2. Negative Inner Product (<#>)

Example Query:

SELECT id, embedding, embedding <#> '[1, 2, 3]' AS negative_inner_product

FROM items

ORDER BY negative_inner_product LIMIT 5;

3. Cosine Distance (<=>)

Example Query:

SELECT id, embedding, embedding <=> '[1, 2, 3]' AS cosine_distance

FROM items

ORDER BY cosine_distance LIMIT 5;

4. L1 Distance (<+>) (Introduced in pgVector 0.7.0)

Example Query:

SELECT id, embedding, embedding <+> '[1, 2, 3]' AS l1_distance

FROM items

ORDER BY l1_distance LIMIT 5;

5. Hamming Distance (<~>) (Introduced in pgVector 0.7.0)

领英推荐

Example Query:

SELECT id, embedding, embedding <~> '[1, 0, 1]' AS hamming_distance

FROM items

ORDER BY hamming_distance LIMIT 5;

6. Jaccard Distance (<%>) (Introduced in pgVector 0.7.0)

Example Query:

SELECT id, embedding, embedding <%> '[1, 0, 1]' AS jaccard_distance

FROM items

ORDER BY jaccard_distance LIMIT 5;

Boosting Query Performance with Indexing

When working with large datasets, indexing is critical for speeding up similarity searches. pgVector supports the following types of indexes:

1. HNSW Index (Hierarchical Navigable Small World)

Example:

CREATE INDEX hnsw_index ON items USING hnsw (embedding);

2. Ivfflat Index (Inverted File Flat)

Example:

CREATE INDEX ivfflat_index ON items USING ivfflat (embedding) WITH (lists = 100);

Choosing the Right Distance Metric and Index

The choice of distance metric and index depends on your application:

Conclusion

pgVector bridges the gap between traditional relational databases and modern AI-driven applications by enabling efficient vector operations directly in PostgreSQL. With its rich support for distance metrics and indexing techniques, it’s a powerful tool for building intelligent, scalable systems.

Explore pgVector for your next AI-powered application and unlock the full potential of vector embeddings within PostgreSQL.

Very informative