Understanding Activation Functions in Neural Networks: A Comprehensive Guide

Introduction

Activation functions play a crucial role in neural networks by helping them learn complex patterns in data. They do this by deciding whether a neuron should be activated or not, effectively introducing non-linear properties to the network. This capability allows neural networks to handle tasks like image recognition, natural language processing, and more with remarkable success.

What is an Activation Function?

In the context of neural networks, an activation function is a mathematical equation that determines the output of a neural network model. The function is attached to each neuron in the network, and it decides whether it should be activated ("fired") or not, based on the input it receives.

Types of Activation Functions

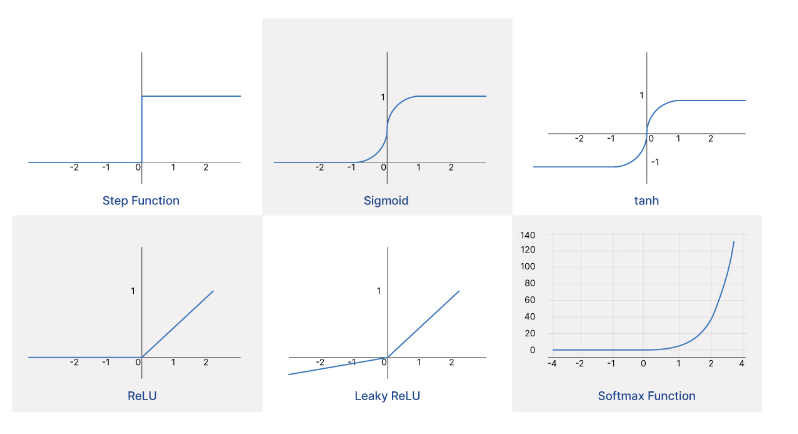

Here’s a closer look at the six fundamental types of activation functions:

Binary Step Function

Linear Activation Function

Sigmoid or Logistic Function

Hyperbolic Tangent (Tanh) Function

Rectified Linear Unit (ReLU)

Softmax Function

Why Use Different Activation Functions?

The choice of activation function can significantly affect the learning and performance of a neural network. Different functions are better suited for certain types of data and networks. For example, ReLU is often preferred for deep learning because it helps with faster convergence during training and reduces the likelihood of vanishing gradients.

Conclusion

Understanding and selecting the right activation function is key to designing effective neural networks. By matching the characteristics of these functions to the specific requirements of your task, you can enhance model performance significantly.

Fractional COO & Project Leadership Expert | Helping Service-Based Design Firms, Construction Companies & Creative Agencies Streamline Operations, Lead Projects Effectively & Scale with Confidence

10 个月That's amazing! Understanding activation functions is key in neural networks. Can't wait to check it out