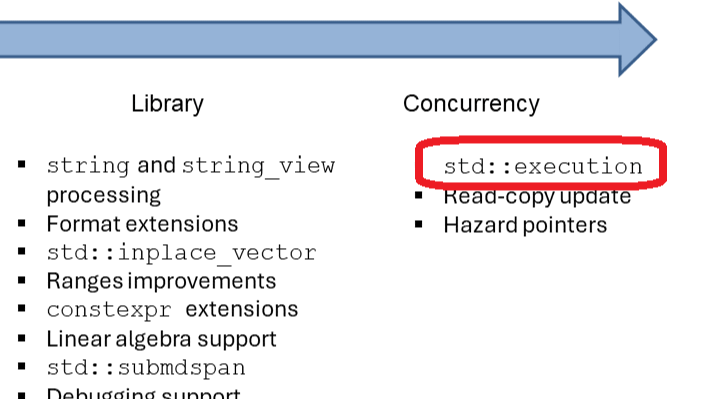

std::execution: Asynchronous Algorithms

std::execution supports many asynchronous algorithms for various workflows. Presenting proposal P2300R10 is not easy. First, it is very powerful, and second, it is very long. So, I'll focus on specific aspects of the proposal.

What are the priorities of this proposal?

Priorities

The terms execution resource, execution agent, and scheduler are essential for the understanding of std::execution. Here are the first simplified definitions.

An execution resource is a program resource entity that manages a set of execution agents. Examples of execution resources include the active thread, a thread pool, or an extra hardware accelerator.

Each function invocation runs in an execution agent.

A scheduler is an abstraction of an execution resource with a uniform, generic interface for scheduling work onto that resource. It is a factory for senders.

Hello World

Here’s once more the “Hello World” of std::execution. You can execute the program on Compiler Explorer this time, and my analysis will go deeper.

// HelloWorldExecution.cpp

#include <exec/static_thread_pool.hpp>

#include <iostream>

#include <stdexec/execution.hpp>

int main() {

exec::static_thread_pool pool(8);

auto sch = pool.get_scheduler();

auto begin = stdexec::schedule(sch);

auto hi = stdexec::then(begin, [] {

std::cout << "Hello world! Have an int.\n";

return 13;

});

auto add_42 = stdexec::then(hi, [](int arg) { return arg + 42; });

auto [i] = stdexec::sync_wait(add_42).value();

std::cout << "i = " << i << '\n';

}

First, here’s the output of the program:

The program begins by including the necessary headers: <exec/static_thread_pool.hpp> for creating a thread pool, and <stdexec/execution.hpp> for execution-related utilities.

?

Modernes C++ Mentoring

Do you want to stay informed: Subscribe.

?

In the main function, a static_thread_pool pool is created with 8 threads.

The get_scheduler member function of the thread pool is called to obtain a lightweight handle for the execution resource scheduler object named sch, which will be used to schedule senders on the thread pool. In this case, the execution resource is a thread pool, but it could also be the main thread, the GPU, or a task framework

The program then creates a series of senders executed by executing agents.

The first sender, begin, is created using the stdexec::schedule function, which schedules a sender on the specified scheduler sch. stdexec::schedule is a so-called sender factory. There are more sender factories. I use the namespace of the upcoming standard:

execution::schedule

execution::just

execution::just_error

execution::just_stopped

execution::read_env

The next sender, hi, uses the sender adaptor stdexec::then, which takes the begin sender and a lambda function. This lambda function prints “Hello world! Have an int.” to the console and returns the integer value 13. The third sender, add_42, is also created using the sender adaptor stdexec::then. The continuation takes the hi task and another lambda, which takes an integer argument arg and returns the result of adding 42 to it. Senders run asynchronously and are generally composable.

std::execution offers more sender adapters:

execution::continues_on

execution::then

execution::upon_*

execution::let_*

execution::starts_on

execution::into_variant

execution::stopped_as_optional

execution::stopped_as_error

execution::bulk

execution::split

execution::when_all

In contrast to the senders, runs the sender consumer synchronously. The stdexec::sync_wait call waits for the completion of the add_42 sender. The value method is called on the result of sync_wait to obtain the value produced by the sender, which is unpacked into the variable i.

this_thread::sync_wait is the only sender consumer in the execution framework.

What’s Next?

In my next post, I will analyze a more challenging algorithm.