A Second Brain. How Large Multi-modal Models (LMMs) Will Redefine Wearable Tech

Using chatGPT for the last 6 months made me realize I need a second brain.

My second brain should be LLM. But not one that I should type a prompt to get something out of it. That's very limiting.

I have 3 simple asks from my second brain:

In other words, I want to provide my LLM eyes and ears and have it on me as a wearable device.

The Online vs. Offline Challenge

Interacting with LLMs presents two contrasting experiences. On one hand, I’m highly impressed by their reasoning capabilities—summarization, classification, inference, and chain-of-thought reasoning. Unlike Convolutional Neural Networks (CNNs), which had only a shallow understanding, these models are remarkable. However, there's also the frustration of the cutoff date—the model only knows what it was exposed to during training. It feels like, "I’m incredibly smart but don’t know anything about the current world." In essence, the model operates offline.

To be truly useful, models need to be connected to real-time data. This is the key to unlocking their full potential for enterprises and individuals alike.

In the human body, the brain relies on real-time data from our senses. Similarly, we need to provide real-time input to LLMs to transition them from offline to online functionality.

LMMs: Large Multi-modal Models

So they are all here now. GPT4o, Google Gemini Live, Meta Llama 3.2 and more. In the last year large language models evolved into multi-modal. The fundamental capability is that the input & output modalities are now voice, image, video, and text.

Multimodal prompting typically refers to a prompt composed of an image and a text question that relates to it. There are a few cool examples of Gemini here.

This is where voice UI becomes interesting, taking multimodal prompting to the next level. I can ask the LMM anything, and it will understand the context.

Voice UI - Kill the Skill, It's Time For a Conversation

Amazon pioneered the voice UI with estimated investments of billions of dollars over the years. There are roughly 130,000 skills in the Alexa ecosystem. I stopped around 3: turning on / off the lights, playing music, and putting a 9-minute timer for the pasta. Some folks may get to 10. But it was too complex UI, for simple tasks, with little value.

But talking natively to get anything done? we are at an inflection point in how we interact with machines.

LMMs & Smart Glasses?

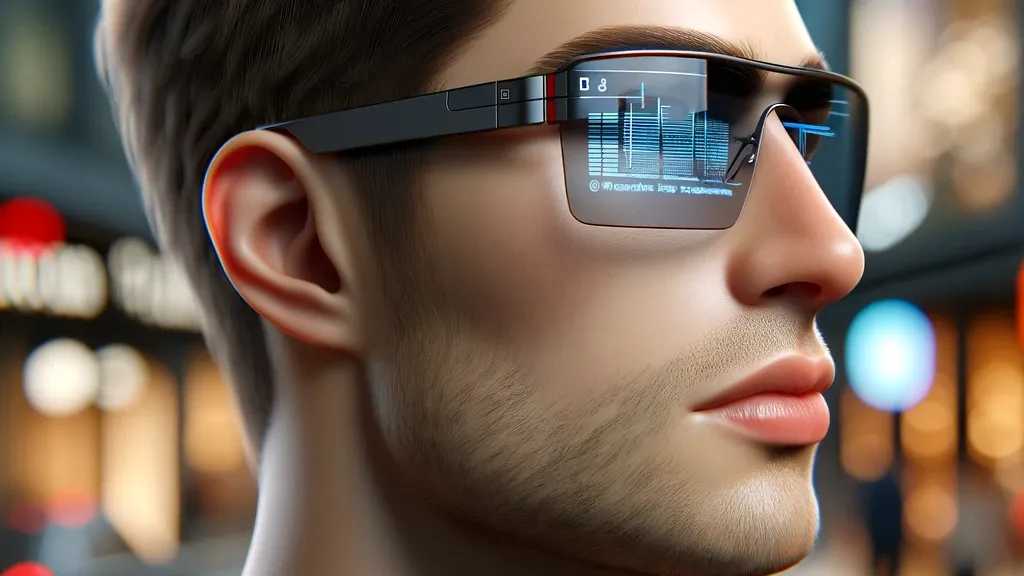

Here’s my theory: LMMs are the key technological enabler to make smart glasses truly useful.

Smart glasses equipped with microphones and cameras allow LMMs to "see" and "hear" what I do, providing real-time responses through voice or visual output on a heads-up display. Glasses are the perfect form factor to communicate with LMMs.

This will be an evolutionary process, similar to the journey of smartwatches.

Yes, Google Glass was a failure a decade ago, but I believe now is the right time due to these new factors:

Use Cases: Brand-New Hands-Free Experiences

Here are a few examples:

Information:

"When was this building built?" – "You are looking at the Coit Tower, built in 1933."

"Can I find these shoes elsewhere at a better price?" - "You are looking at Nike Jordan Air 1 low, the price on Nike.com is higher than 139 dollars".

"Remind me of this guy's name." – "This is Joseph Hall, based on images from your contacts."

领英推荐

The common theme is conversational UI about "here and now" and receiving an immediate, relevant response.

Navigation: "How do I get to The Brothers' Restaurant?" Immediately, Google Maps directions appear on my heads-up display. But while driving, I can ask "Take me to the nearest grocery store on the route with free parking". Hands-free, conversational UI, on my glasses.

Productivity & Application Integration:

"Set up a meeting with Johana Smith and send Zoom invites. The topic is the internal design of floor 5."

The Tech Behind It All: What’s Needed

So, what do we need to make this happen?

There are about 20 early-stage smart glasses products shipping today, but most focus on just one of these features—whether it's music (Amazon Eco Frames, Bose Frames, Carrera, Razor Anzu...), cameras (Meta Rayban, Snapchat Spectacles 3), or heads-up displays (Xreal, Vuzix, Everysigh Maverick). What LMMs need is all of these technologies to work together.

Design

Finally, we can't overlook design. Google Glasses were geeky and unattractive—a clear warning sign for potential mates. In contrast, Meta Ray-Ban smart glasses set a new standard by integrating advanced technology with the classic Ray-Ban design. I believe future smart glasses will not compromise on aesthetics and will continue in this direction.

The Real Barrier: A Day Use @ 50 grams

Ultimately, it all comes down to the weight and battery life of these glasses. The technologies mentioned above are ready today. The real challenge? Keeping the glasses under 50 grams, the maximum weight for all-day wearability, while maintaining sufficient battery life for a day.

I believe it’s achievable with the right architecture. Simply shrinking a mobile processor isn’t the answer. Running DDRs and Linux introduces too much overhead. This challenge requires a far more energy-efficient solution in both compute power and memory architecture. I’ll delve into this more in a future post.

Predictions

I will take the risk to predict how it can play out.

Meta has established itself as a key player in both large language models (LLMs) and device technologies, particularly with its Meta Rayban smart glasses. They’ve already announced the integration of these glasses with their LLMs, signaling an exciting direction for wearable AI. However, Meta faces a strategic ecosystem disadvantage—they don’t own the smartphone platform. As a result, their strategy appears to make smart glasses the next personal computing platform.

Unfortunately, the technology hasn't fully matured yet. Even with the Snapdragon W5+ at 4nm SoC powering these devices, we are still several years away from realizing that vision. The recent launch of the Orion prototype smart glasses further illustrates this point.

Google is currently the player that has all the right pieces in place: Gemini Live, Pixel phones, Android OS, and earbuds. I’m particularly optimistic about Google's approach here. With the Pixel Buds Pro 2 already integrating with Gemini Live, the “conversational experience” is well underway.

The next logical step for Google is smart glasses. My intuition suggests that the first generation will be more of an Android accessory rather than a fully standalone device. It’s possible that Gemini Live could run primarily on the phone, which would still provide low latency and offer better battery life—a sensible trade-off.

Apple and Open.ai - a marriage made in heaven.

OpenAI is poised to reach roughly one billion people worldwide, many of whom belong to the wealthier demographic. Meanwhile, Apple stands to gain access to one of the best AI engines available without needing to invest $10B (or more). I believe this partnership will extend beyond iOS to mobile. We’re likely to see LMMs integrated into future iterations of AirPods and smart glasses.

While Apple may not be the first brand to launch smart glasses, they are very likely to be the first to get it right—nailing the form factor, system architecture, sleek design, and battery life.

Achieving all of this in a 50-gram device will require many intelligent trade-offs. This challenge plays directly to Apple's core strengths: highly optimized hardware, best-in-class software integration, and a focus on targeted use cases.

Samsung - typically a fast follower with new product categories. They will likely take an excellent Google Android-based design as their reference point and scale it up. Expect a version that’s sleeker, lighter, faster, and available across hundreds of thousands of retail locations globally.

As we await these breakthroughs, one thing is certain: the integration of large multi-modal models into wearables is not just a possibility—it’s inevitable. When it arrives, our interactions with technology will be transformed forever. This is my vision - a second brain packed in smart glasses.

Until then, I’ll continue to rely on my own brain.

R&D Chief

1 个月check out the 'Rabbit R1' device and the TED talk by Imran Chaudhri https://www.youtube.com/watch?v=gMsQO5u7-NQ. So far - a total failure...

Technical Director, Broadcast Producer and streaming solutions architect

1 个月Not same implementation but same topic, I have got in to the habit of having voice conversations with GPT on long drives (or when the mood strikes or need arises). Topic range from information on specific subjects, brainstorming ideas, and even philosophical discussions on topics I like (yes, I know, I am weird like that). So while not exactly a second brain, it definitely is a way to increase the knowledge throughput and upskilling for my own.

Thanks Elad, we already have many 'brains' used daily, so I expect the 'low hanging fruit' for using LLMs is first for existing connected #edgeai devices, such as #tws, watches,rings, In Car and similar - each per the sensors available to it and around it (aka phone).