Scaling Isn’t Dead: How Reasoning Models and Synthetic Data Are Redefining AI Progress

Tony Grayson

VADM Stockdale Leadership Award Recipient | Tech Executive | Ex-Submarine Captain | Top 10 Datacenter Influencer | Veteran Advocate

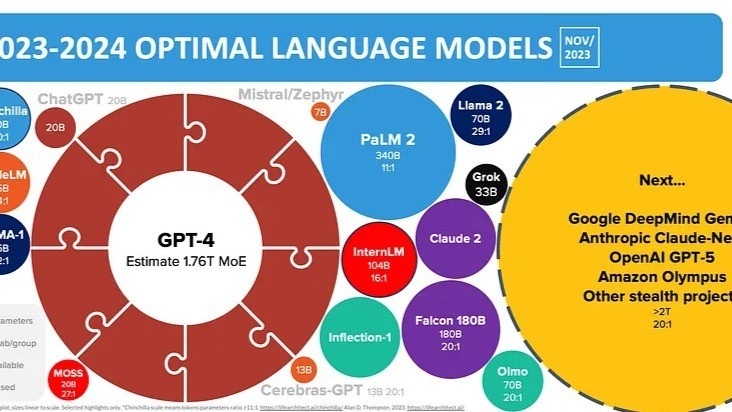

Recent debates in the AI community have questioned the relevance of scaling laws—the principle that increasing data and computational power leads to ever-better AI models. While some have pointed to challenges faced by advanced models like OpenAI's Orion or Anthropic's Claude 3.5 Opus as evidence of diminishing returns, these conclusions may be shortsighted. Innovations such as reasoning models and synthetic data usage are redefining what scaling means, paving the way for a more sustainable and effective AI development trajectory.

Reasoning Models: A Paradigm Shift in Scaling

The traditional approach to scaling often emphasized size—more parameters, larger datasets, and greater computing power. However, models like OpenAI's o1 demonstrate a critical shift: focusing on reasoning capabilities rather than raw data and parameter increases.

Reasoning models aim to improve logical inference, generalization, and problem-solving skills. Unlike their predecessors, these models are trained to bridge gaps in logical reasoning, enabling them to perform well on tasks requiring multi-step deductions. For instance, o1’s architecture incorporates mechanisms to reason through structured problems like math proofs or stepwise decision-making, which are beyond the reach of purely scale-based improvements.

Synthetic Data: Post-Training Optimization at Scale

Another key innovation in reshaping scaling is the strategic use of synthetic data in post-training. Unlike traditional datasets, synthetic data is algorithmically generated to simulate specific scenarios or address edge cases that may be underrepresented in natural data.

Advantages of Synthetic Data

Case Study: Reinforcement Learning

In areas like reinforcement learning, synthetic environments have revolutionized training. For example:

领英推荐

Quantifiable Impact

OpenAI and other leaders have reported that post-training with synthetic datasets can improve model accuracy by up to 20% in specialized domains like medical image analysis or natural disaster prediction.

Broader Implications of Evolving Scaling Approaches

Combining reasoning models and synthetic data is not just an improvement but a redefinition of scaling itself. Scaling is no longer about brute force but leveraging smarter techniques to extract more value from existing computing and data.

Cross-Industry Examples

Scaling Law Misconceptions: Why the Doomsayers Are Wrong

While challenges like infrastructure costs and model training plateaus have emerged, they do not signify the end of scaling laws. Instead, they point to the need for a nuanced understanding of how scaling manifests in the modern AI era.

Some Data:

Recent benchmarks indicate:

The perception that AI scaling laws have reached their limits is rooted in an outdated view of what scaling entails. Innovations like reasoning models and synthetic data have expanded the definition of scaling, moving the focus from size to sophistication. OpenAI's o1 and similar efforts exemplify this shift, showing that AI progress remains robust and dynamic.

Scaling isn’t dead; it’s evolving. This evolution is making AI smarter, more adaptable, and better equipped to tackle real-world challenges. As the industry continues to innovate, scaling doomsayers may be proven wrong.