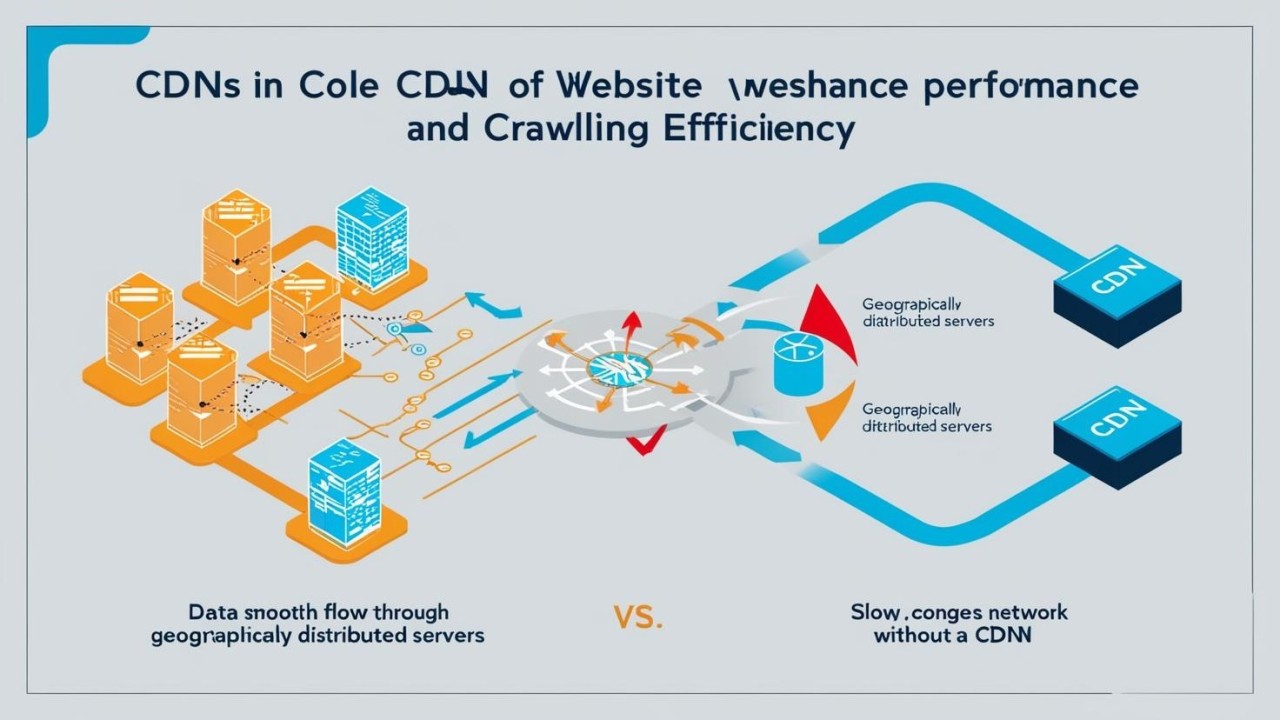

The Role of CDNs in Enhancing Website Performance and Crawling Efficiency

Saurabh Anand

AI Marketer || Top Voice 2024 II Google Digital Marketer II AI Marketer II SEO II LINK-Building II Keywords Researcher II Social Media Analyst II LinkedIn Creator II Content Connection India

In the digital era, where website speed and user experience are paramount, Content Delivery Networks (CDNs) play an indispensable role. CDNs reduce latency, improve load times, and mitigate web traffic issues by ensuring fast delivery of content, even during traffic surges. In this article, we’ll delve into the significance of CDNs for website optimization, their impact on crawling, and potential challenges they might introduce.

What is a CDN?

At its core, a CDN is an intermediary between your website's origin server and its users. By distributing content across a network of servers worldwide, CDNs enhance content delivery speed, reduce server load, and provide a layer of protection against malicious attacks.

Key Functions of CDNs:

A notable example is Cloudflare, which mitigated a record-breaking 4.2 Tbps DDoS attack on October 21, 2024, demonstrating the robustness of modern CDNs.

How CDNs Improve Website Efficiency

1. Enhanced User Experience

CDNs ensure that users access content quickly, regardless of their geographic location. This leads to faster page loads, which are directly correlated with higher engagement and conversion rates.

2. Reduced Server Load

By serving cached resources, CDNs offload the origin server, freeing up bandwidth and compute power for other tasks.

3. Traffic Filtering and Blocking

Advanced traffic management tools allow CDNs to:

领英推荐

4. Support During High-Traffic Events

Whether it’s a product launch or a viral campaign, CDNs distribute the load, preventing server crashes and downtime.

CDNs and Website Crawling

While CDNs enhance user experience, they also affect how search engine crawlers interact with websites.

Crawling Efficiency with CDNs

Rendering Considerations

Overprotective CDNs

Occasionally, CDNs may block legitimate crawlers due to aggressive flood protection mechanisms. This can lead to issues such as:

Best Practices for Managing CDNs and Crawling

Conclusion

CDNs are a powerful tool for enhancing website performance, protecting against cyber threats, and optimizing crawling. By leveraging CDN capabilities effectively and addressing potential challenges, website owners can ensure a seamless user experience and robust search engine presence.

Whether you’re managing a high-traffic site or preparing for rapid scaling, adopting a CDN is a strategic move that pays dividends in speed, security, and scalability.