RAG (Retrieval Augmented Generation) 101

Udara Nilupul

Machine Learning Engineer @ Ascentic | Former MLE @ Exedee | MSc. in DS and AI (R) - UOM |BSc. (Hons) in Engineering - UOJ

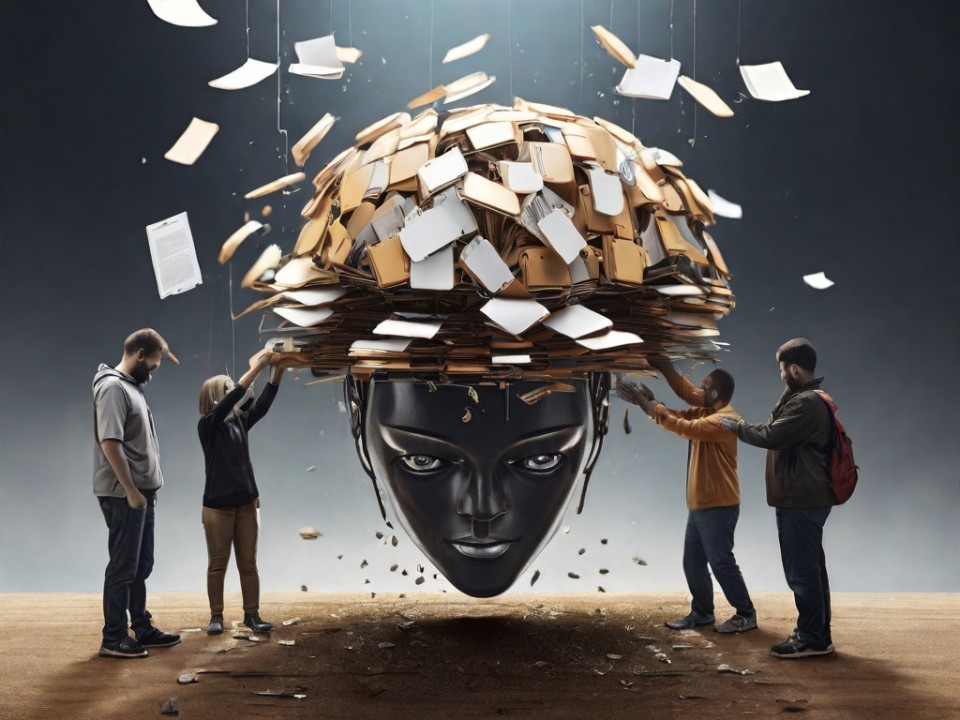

Large Language Models (LLMs) have been a huge trend on building AI based solutions recently. This is obvious because they have been trained on a large text corpus covering almost all areas that a human can possibly cover so they have a wide range of knowledge base. But sometimes they tend to provide made-up facts,simply lies or complete nonsense when they encounter something they don't know. This is due to many factors. Some of them are,

This phenomena is often called as "Hallucination" and this have been the most common issue with almost all LLMs when they are used to a downstream task. For an example ChatGPT and Google Bard (now Gemini) has recorded 10% and 29% of Hallucination rate respectively in the PHM Knowledge examination.

There are several ways to encounter hallucination and following are some common methods.

RAG or Retrieval Augmented Generation lies under the Domain adaptation and augmentation and it is a proved and sustainable way to encounter the hallucination issue while using a LLM for a downstream task.

The concept of RAG is pretty simple. The LLM is simply incorporated/ connected to your specific dataset. So LLM's parameters are kept intact but the knowledge base connection allows the LLM to refer them and provide better results, reducing the vulnerability towards hallucination.

How does this happen ? In a RAG pipeline the LLM dynamically incorporate the KB(Knowledge Base) data during the generation process. This is done by allowing the model to access and utilize the data in the KB in real-time without altering it. So the model's results will be more contextually relevant to the request.

In a RAG pipeline, the reference data/ KB data initially should be "indexed". Indexing in the sense is preparing the data so they would be compatible for querying. So when a user query for a specific data-point the index should be able to filter down the most contextually relevant data. Then the LLM uses the filtered data along with user query and an instructive prompt (often called as the system prompt) to provide the response.

Creating a RAG pipeline involves few steps.

领英推荐

Myth-buster : Not just for text data, RAG can be implemented for other data types such as audio and images also. All you need an embedding model that supports those formats and LLM which has multi-modality features.

Following are some tools/tech-stack that can be used to implement RAG pipeline for your needs.

Following is a more advanced multi-modal RAG pipeline which allows users to query both text and images.

Refernces :