RAG Foundry: A Framework for Enhancing LLMs for Retrieval Augmented Generation

Today's paper introduces RAG Foundry, an open-source framework for enhancing large language models (LLMs) for retrieval augmented generation (RAG) tasks. RAG Foundry integrates data creation, training, inference and evaluation into a single workflow, allowing rapidly prototyping and experimenting with various RAG techniques. The framework aims to address the complexities of implementing RAG systems and evaluating their performance.

Method Overview

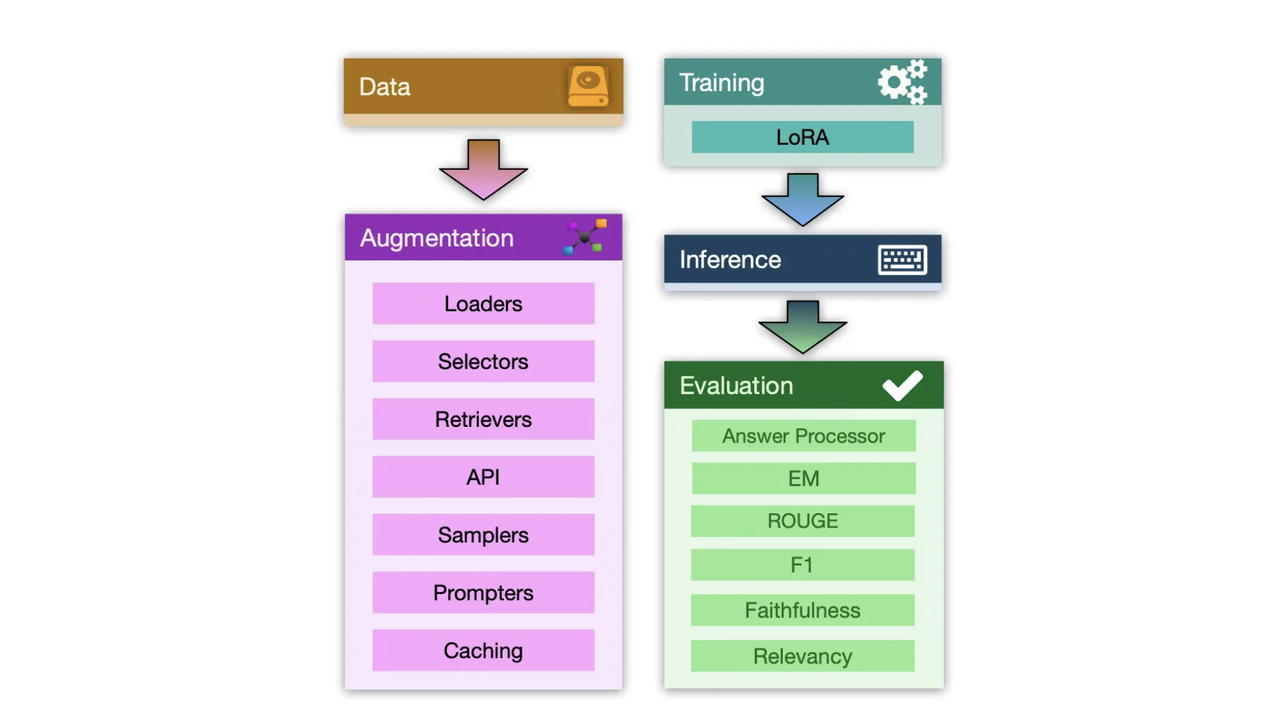

RAG Foundry provides an end-to-end experimentation environment for developing RAG-enhanced language models. The framework consists of four main modules: data creation, training, inference, and evaluation.

The data creation module allows users to create context-enhanced datasets by persisting RAG interactions. It supports various processing steps like dataset loading, information retrieval, prompt creation, and pre-processing. The module uses a pipeline structure with customizable steps that can be configured using YAML files like the one below:

The training module enables fine-tuning of models using the datasets created in the previous step. It supports techniques like LoRA (Low-Rank Adaptation) for efficient training.

The inference module generates predictions using the processed datasets and trained models. It is separated from evaluation to allow multiple evaluations on a single set of inference results.

The evaluation module runs configurable metrics to assess RAG techniques and tuning processes. It supports both local metrics (run on individual examples) and global metrics (run on the entire dataset). The module also includes an answer processor for custom output processing.

领英推荐

Results

The paper demonstrates the effectiveness of RAG Foundry by conducting experiments on three knowledge-intensive question-answering datasets: TriviaQA, PubmedQA, and ASQA. They compare two baseline models (Llama-3 and Phi-3) using various RAG enhancement methods.

Key findings include:

The results highlight the importance of carefully evaluating different aspects of RAG systems across diverse datasets, as there is no one-size-fits-all solution.

Conclusion

RAG Foundry provides a comprehensive framework for developing and evaluating RAG-enhanced language models. By integrating data creation, training, inference, and evaluation into a single workflow, it enables quick experimentation with different RAG techniques. For more information please consult the?full paper.

Congrats to the authors for their work!

Fleischer, Daniel, et al. "RAG Foundry: A Framework for Enhancing LLMs for Retrieval Augmented Generation." arXiv preprint arXiv:2408.02545 (2024).

Researcher, Working on Microgrid Energy Management and Power Converter

1 个月Intriguing innovation democratizing RAG capabilities. Simplifying prototyping empowers diverse exploration. Standardized evaluation fosters objective comparisons.