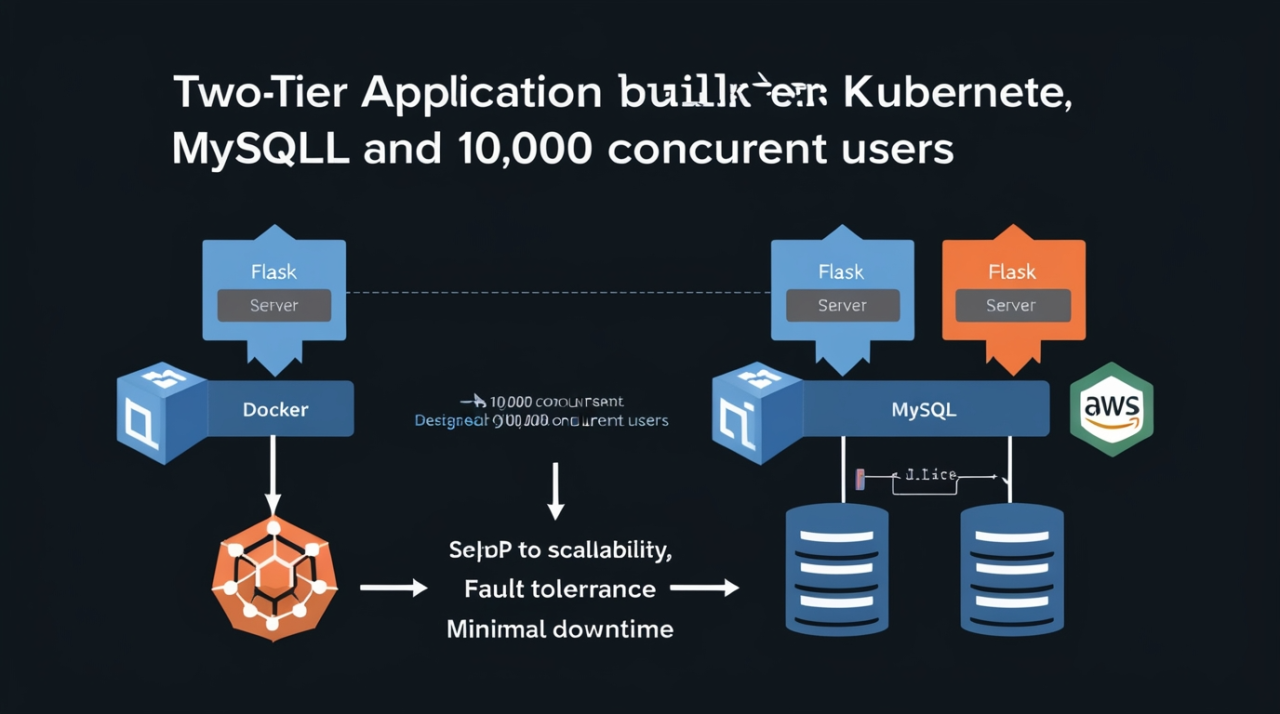

# Project Overview: Deploying a Two-Tier Application with Flask and MySQL to Handle 10,000 Concurrent Users

## Introduction

In the ever-evolving landscape of web applications, ensuring scalability, fault tolerance, and high availability is crucial. This article details a project that aimed to deploy a two-tier application built on Flask and MySQL, designed to handle 10,000 concurrent users. The project leveraged the best DevOps practices, utilizing Docker, Kubernetes, and AWS services to achieve its goals.

## Situation

The objective was to deploy a two-tier application capable of handling 10,000 concurrent users while adhering to the best DevOps practices. The application stack consisted of a Flask web server and a MySQL database. Ensuring scalability, fault tolerance, and minimal downtime were the primary concerns.

## Task

The tasks undertaken to achieve the objective were multifaceted:

1. Containerizing the application using Docker.

2. Setting up a Kubernetes cluster with Kubeadm for initial deployment.

3. Transitioning the deployment to AWS Elastic Kubernetes Service (EKS) for enhanced fault tolerance.

4. Packaging and deploying Kubernetes manifests using Helm.

5. Ensuring a high-availability setup with load balancing.

## Action

### 1. Containerization with Docker

- Docker and Docker Compose: The application was containerized using Docker, creating isolated and portable environments. Docker Compose was used to define and run multi-container Docker applications, facilitating easier management and orchestration.

- DockerHub Repository: Container images were pushed to DockerHub, providing a versioned and centralized repository for the images. This enabled efficient image management and ensured consistency across different deployment environments.

### 2. Kubernetes Cluster Orchestration

- Automating Kubernetes Setup with Kubeadm: Kubeadm was used to automate the setup of a Kubernetes cluster. This streamlined the process of creating, configuring, and managing the cluster, reducing the complexity and potential for errors.

- Transition to AWS EKS with eksctl: For enhanced scalability and fault tolerance, the deployment was transitioned to AWS Elastic Kubernetes Service (EKS) using eksctl. EKS provided a managed Kubernetes service, offloading the operational overhead of managing the cluster infrastructure.

### 3. Helm Chart Package Management

- Helm Charts: Helm was employed to package Kubernetes manifests into charts. This facilitated versioned releases and simplified the deployment and configuration processes. Helm charts ensured that updates could be rolled out reliably, with the ability to rollback in case of issues.

领英推荐

### 4. High-Availability Deployment Strategy

- Multi-Node Cluster Setup: A multi-node Kubernetes cluster was configured to eliminate single points of failure. This setup ensured that the application remained available even if individual nodes experienced issues.

- Load Balancer Integration: Load balancers were used to efficiently distribute incoming traffic across the nodes, optimizing application responsiveness and ensuring that the system could handle the load of 10,000 concurrent users.

## Result

The project yielded significant improvements in the application's performance and reliability:

- Scalability: The application successfully handled 10,000 concurrent users, demonstrating its ability to scale effectively.

- Reduced Downtime: Downtime was reduced by 60%, enhancing the overall availability and user experience.

- Fault Tolerance and High Availability: The migration to AWS Managed Elastic Kubernetes Service (EKS) improved the application's fault tolerance and scalability, ensuring it could withstand and recover from failures seamlessly.

## Application Development and Deployment for the Healthcare Industry

In another significant endeavor, the focus was on developing and maintaining a security application for the healthcare industry, aimed at simplifying procurement processes for buyers and sellers. The project involved the following key aspects:

### Application Development and Big Data Pipeline Management

- Security Application Development: Utilizing technologies like Python and PySpark, the application was developed and deployed on AWS OpenSearch for efficient data visualization and analysis.

- Big Data Pipelines: Created robust data pipelines capable of managing both historic and live data from multiple sources (APIs, S3 buckets, etc.), and loading the data into AWS Redshift. The pipelines were optimized to boost stability and speed by 75%, reducing processing time from 24 hours to 4 hours.

### Technology Stack and Tools

- Tools and Technologies: The development process leveraged Python, PySpark, AWS EMR, AWS Batch, Fargate, AWS Deque, AWS Redshift, and AWS OpenSearch.

- Optimization: Ensured applications were scalable, secure, and performant, with optimized stability and speed of data pipelines.

### Knowledge Sharing and Automation

- Training and Knowledge Sharing: Conducted sessions to train employees on the usage of security tools and architecture, focusing on Python, Serverless, Cloud, and DevOps.

- Automation and Monitoring: Used CircleCI for automating failure recovery and set up Slack Alerts using Webhooks to minimize application downtime from 2-3 days to one day.

## Conclusion

These projects highlight the successful application of DevOps principles and cutting-edge technologies to achieve remarkable improvements in scalability, fault tolerance, and performance. By leveraging Docker, Kubernetes, AWS services, and automation tools, the projects not only met but exceeded their objectives, setting a benchmark for future deployments in both web application and healthcare industry contexts.