OpenAI o1: The One Chart That Explains Why This Is a Big Deal and 3 Predictions for the Near Future of AI

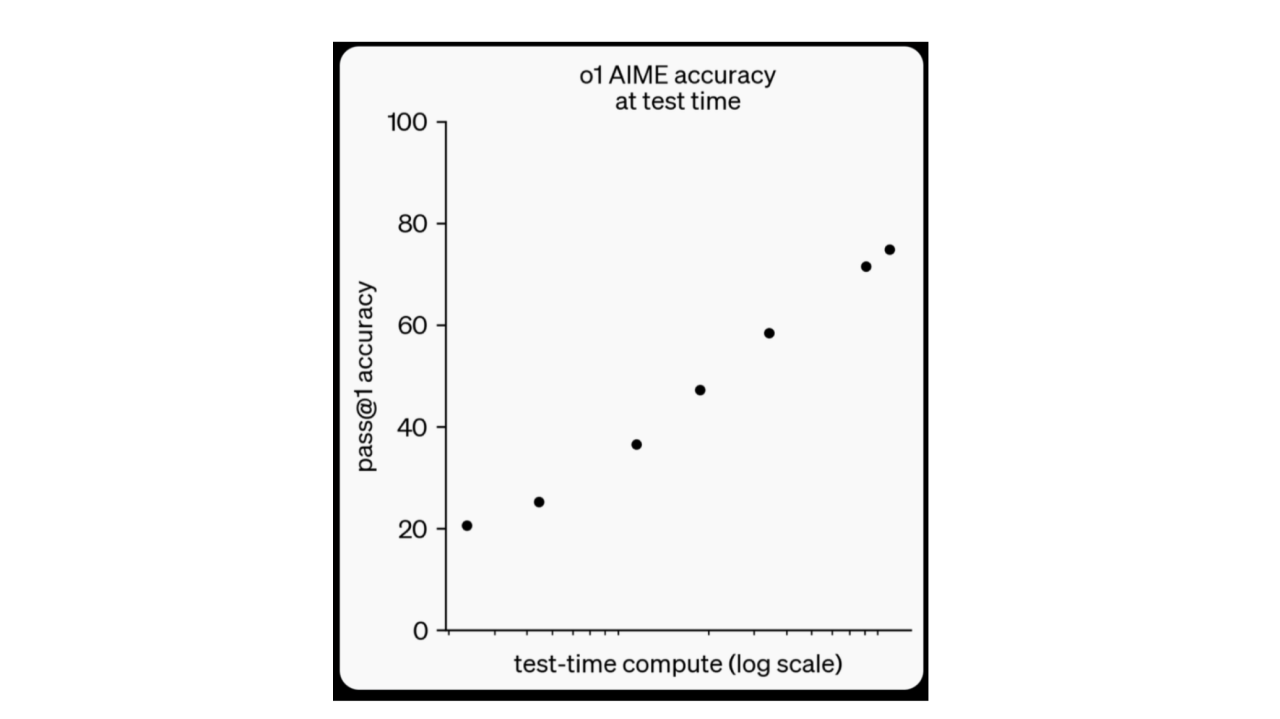

Noam Brown, a researcher on the OpenAI team, recently published this chart:

In my opinion, this is a very big deal.

Here’s what it means:

Don’t get me wrong—I think pretraining will continue to be crucial, and we’ll continue to see major investments from the largest foundation-model providers.

(And as a smaller detail, the log scale of this model does suggest diminishing returns—but it remains very powerful.)

While reinforcement learning (RL) has existed for some time, OpenAI o1 in many ways, represents the first major public launch of such a model.?

When combined with the power of (soon publicly accessible) multi-trillion parameter LLMs, we are onto something truly special.

We now have two powerful forces rowing in the same direction—not only the immense resources spent on pretraining but also the ability to improve model accuracy at inference time.

In simple terms, we not only have an extremely smart "brain" (arguably near superhuman intelligence in many domains), but the more time/cycles we give this brain to reason, the better it performs.

What Does This Mean for the Near Future of AI? Three Predictions:

1. Expect New Applications and Breakthroughs—Both Publicly and Behind Closed Doors??

What the general public can access now is a scaled-down version of the o1 model.

Running many inference cycles is costly (e.g., OpenAI wants to limit the inference time it spends on requests, as its economic incentives are to maximize its margin).?

Therefore, you will likely see limited reasoning capacity for the low-cost version and a much higher-priced enterprise/developer version.

However, a superhuman brain that can run many inference cycles and yield better accuracy will result in massive breakthroughs on hard problems we haven't been able to solve yet.?

Since you can parallel-process capacity (i.e., use more "brains" simultaneously), expect major companies and government/intelligence agencies to:

???- Secure special agreements with OpenAI to run these models without constraints.

???- Engage in parallel processing (i.e., running many brains at once).

???- Invest heavily (in the form of inference time) to tackle tough problems.

Imagine just a few major use cases:

Of course, nefarious actors (both state and private) with access to these resources and the budget to pay for extended inference compute could cause significant harm by applying this power with ill intentions.

领英推荐

2. Let the RL Wars Begin—As Agentic AI Comes Online?

Just like OpenAI led the way with ChatGPT, prompting a flood of competitors, the RL/reasoning unlock will also inspire many to catch up.

This competition will ultimately benefit the end consumer.

Moving forward, the focus will be on improving RL by developing better intermediate prompts, exploring different techniques, and continuing to vie for leadership in the world's top models.

This comes as we approach the public release of agentic models and more advanced robotics. While we are still in the early stages, it will be fascinating to see how RL-based models impact the speed and usability of agentic AI and robotics.

We are heading toward a world where super-intelligent robots and agents become ubiquitous and eventually affordable for middle-class households. The question is how fast we will get there.

Highly capable RL models like o1—and the competitive tide they will create—will undeniably accelerate this journey.

3. The Innovator’s Dilemma Still Applies

OpenAI's success shows that not only is it driving model innovation, but it is also well-organized for continuous innovation.

After leading the way with large foundation models like ChatGPT (essentially opening the floodgates of LLM competitors), OpenAI now also leads the next generation of chain-of-thought driven AI models.

In order to launch o1 this month, OpenAI made a strategic decision, about a year ago (around October 2023, when Ilya Sutskever left the team, along with its very public management re-shuffle), to commit significant money, time, and resources into developing different models and approaches (Q*), even while their primary approach was yielding results (e.g., investing heavily in pretraining).

Today, OpenAI is likely pursuing both strategies—expanding pretraining and innovating on reasoning/chain-of-thought RL models.

Why? Management wasn’t afraid to disrupt themselves.

This is why Google, despite pioneering key breakthroughs, isn't leading in public model performance.

In fact, the massive breakthroughs that allowed OpenAI to become a leader were initially pioneered by a Google research team – the team that kicked things off with the "Attention Is All You Need" paper.

Yet Google hasn’t taken the lead because of the innovator’s dilemma. They had the research teams but lacked the bravery/prescience to disrupt their own business model. Now, they are playing catch-up.

So what can we learn?

1. Run multiple competitive projects, regardless of alignment with your core business model

Taking multiple shots on goal via multiple competing research projects are critical. You need to support multiple non-orthogonal research initiatives (including ideas that originally seem like crazy long shots), including approaches that fundamentally disrupt your core business model, to stay competitive.

From a cost perspective, the key is to be able to test different ideas - with smaller pods of researchers - before ramping up team sizes and allocating outsized compute.?Focus on minimizing time to get early signal that the approach is promising.?

2. Choose to disrupt your core business (even if it means lower margins) or face becoming irrelevant

Having disruptive technologies isn't enough. You need a strategy to release to the public quickly and at scale, which may require going against shareholder pressure and internal risk controls.

Google excelled at #1 but has thus far struggled with #2.

Just as Polaroid faded into irrelevance, the same paradigm applies to AI today.

Testing many different approaches — even seemingly crazy or low probability — can lead to breakthroughs. When they do, don't hesitate to invest.

Lastly, as RL technology continues to advance rapidly, we'll see many more emergent "creative" solutions where the ML solve diverges from traditional human approaches – including approaches we've never seen before.

Safety, alignment, and a leadership approach that is truly driven by a "do no evil" ethos, is critical when testing, launching, and scaling these systems.

Building something new ?? - Ex Rappi | Ex Cloud Kitchens

4 个月Great article! Uri Pomerantz

Founder of Arrow AI | Unlocking Portfolio Value Through Strategic Marketing | Private Equity Advisor

5 个月Interesting insights on OpenAI's chart. I'm curious about your three predictions. Can you elaborate on how you think these will shape AI development?