Moshi: A Game-Changing Open-Source Speech-Text Model on the Verge of Global Adoption

I've recently been diving into an amazing new model called Moshi, and we are incredibly close to seeing it transform how we interact with technology in everyday life. This open-source model, freely available under the Apache 2.0 license, is designed to make conversations with computers and devices more natural and fluid than ever before.

For years, voice assistants like Alexa, Siri, and Google Assistant have been using voice to interact with us. However, these systems break down conversations into separate steps. First, they listen to us and turn what we say into text. Then, they try to understand the meaning of our words, generate a text-based response, and finally turn that response back into speech. While this process works, it isn't smooth—there are delays, and it can miss some of the subtle, human elements that make conversations feel natural, like emotions, interruptions, or when people talk over each other.

Moshi changes all of this by focusing on speech directly—meaning it doesn’t need to convert everything to text first. Think of it as skipping the middle steps and allowing conversations to flow much more naturally, just like when two people talk. This makes interactions with technology faster, more human-like, and capable of handling things like overlapping conversations or the tones and feelings behind what people say. So, when you interrupt it or speak with excitement or frustration, Moshi can understand and respond appropriately in real-time.

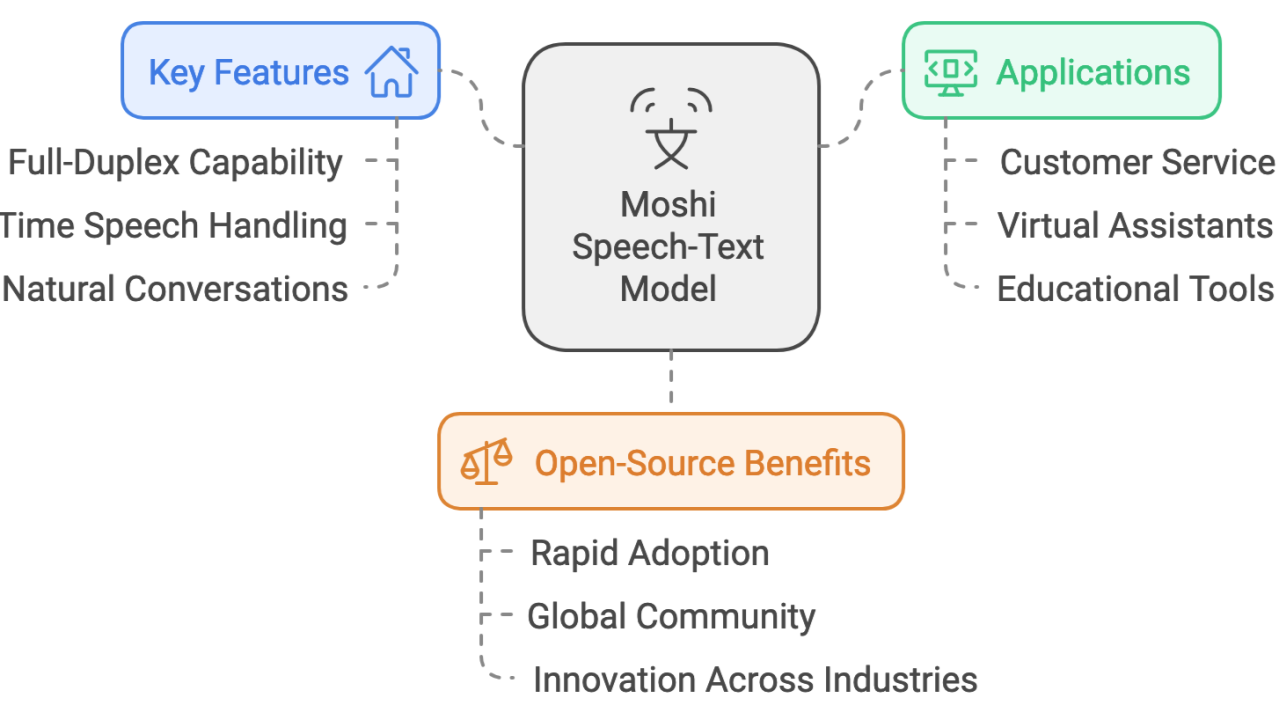

One of the most exciting aspects of Moshi is its ability to do all this while listening and speaking at the same time. This is what we call “full-duplex,” and it means Moshi can always listen to you while it’s responding, just like how you can talk to someone while also listening to them. It’s this full-duplex capability that takes Moshi beyond the typical back-and-forth feel of current voice assistants, which wait for you to finish speaking before they respond.

While I’ve been playing with Moshi, I’ve noticed how incredibly close we are to seeing it implemented in everyday applications. Whether it’s integrated into customer service bots, virtual assistants, or used in educational tools, this model will allow for smoother, more intuitive interactions. Imagine calling a company and interacting with a bot that speaks to you as naturally as a person, without long pauses or misunderstandings. Or think about how this could revolutionize personal AI companions, making them feel more human in conversation.

What makes Moshi truly powerful in my mind is that it's open-source, meaning it's free for companies and developers to use and adapt to their needs. Yes I know about the technical burden but I think that's worthwhile. Released under the Apache 2.0 license, Moshi’s availability is a game-changer because it allows businesses around the world to adopt and modify it quickly without worrying about legal or financial barriers. When technology like this is made widely accessible, it accelerates innovation across industries, from healthcare to education, entertainment, and beyond.

This open availability, combined with Moshi’s groundbreaking ability to handle real-time speech, could lead to rapid adoption by companies everywhere. They won’t have to build these kinds of models from scratch—Moshi is ready to go, and because it’s open-source, the global community of developers can continuously improve and adapt it for new uses.

So why is this so important? Well, we all interact with machines and devices every day—whether through customer service calls, virtual assistants, or even apps on our phones. Moshi promises to make these interactions smoother, faster, and more like talking to a real person. And because it’s open-source, we’re likely to see businesses quickly incorporating it into their products, making voice technology an even bigger part of our everyday lives.

As I’ve been experimenting with Moshi, it’s clear that we are on the verge of something big. With the backing of the open-source community, I expect we’ll soon see this kind of technology widely adopted around the world. It’s an exciting time to be working with AI, and I’m eager to see how Moshi reshapes the way we interact with the devices around us.

领英推荐

Teaching Faculty at UW-Madison iSchool, Co-Chair of GEE! Learning Game Awards

6 个月Cool! Do you use an installer like Pinokio to track all the models you try out or just install directly?