Mastering Rate Limiting and Throttling: Token Bucket and Leaky Bucket Algorithms

Gadhavajula Surya Satya Nikhil

Software Developer @ iQuadra Information Services LLC | Creator @ Topmate | Smart India Hackathon 2023 Finalist

What is Rate Limiting and Why Does It Matter?

Rate limiting is a mechanism to control the number of requests a system accepts within a given time. It protects servers from being overwhelmed by sudden traffic spikes and prevents abuse like brute-force attacks or API scraping.

Throttling, on the other hand, delays or queues excessive requests instead of rejecting them outright, ensuring a smoother user experience.

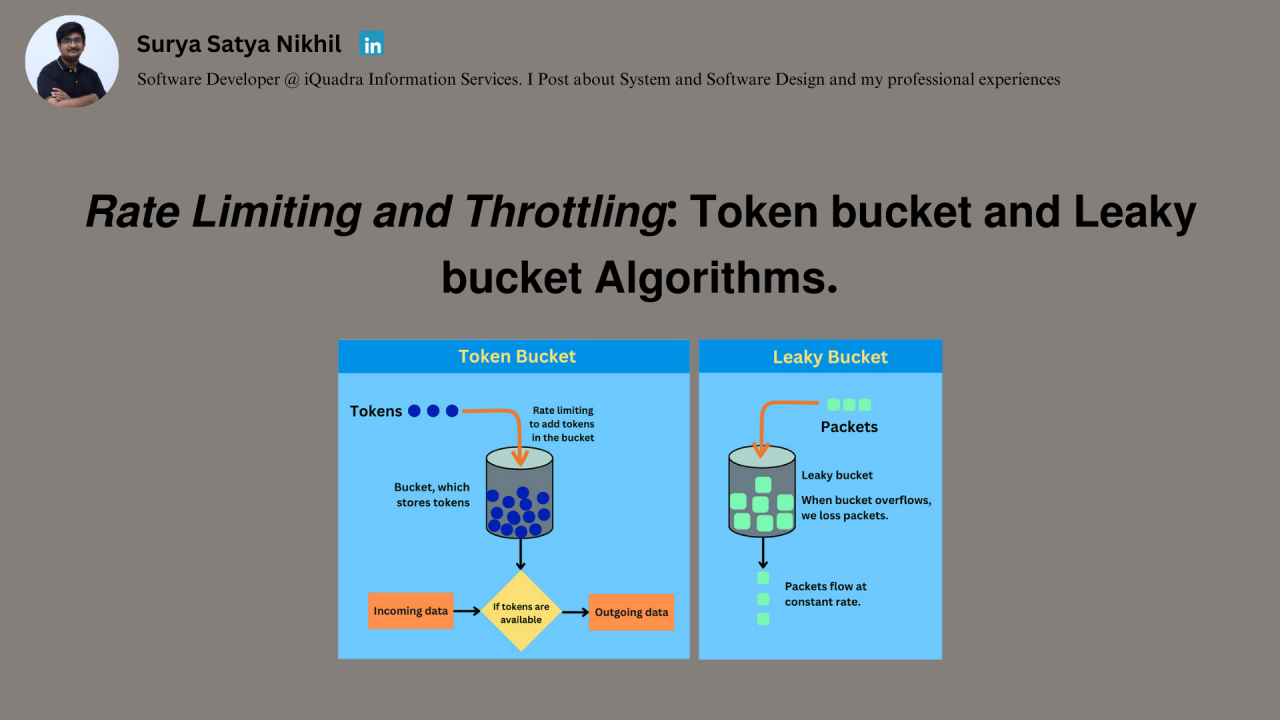

Token Bucket Algorithm

The Token Bucket algorithm is widely used for rate limiting because of its flexibility. Here’s how it works:

1.) The Bucket and Tokens: Imagine a bucket that holds tokens. Each token represents a request. Tokens are added to the bucket at a fixed rate, but the bucket has a maximum capacity.

2.) Request Handling: When a user sends a request, they need a token to proceed. If tokens are available in the bucket, one is consumed, and the request is processed. If the bucket is empty, the request is either rejected or delayed until a token is available.

3.) Burst Control: The bucket’s capacity allows for short bursts of traffic, as tokens can accumulate when not immediately used. This makes the algorithm ideal for systems that need to handle sudden traffic spikes while enforcing a steady overall rate.

Real-World Example

Think of streaming platforms like Netflix.

Leaky Bucket Algorithm

The Leaky Bucket algorithm ensures a consistent and predictable flow of requests. It’s simple yet effective. Here’s how it works:

1.) The Bucket and Leaking: Imagine a bucket with a small hole at the bottom. Requests enter the bucket as water, and they leak out at a fixed rate.

2.) Overflow Control: If the bucket overflows because requests are arriving faster than they can leak out, the excess is discarded. This ensures that the system processes requests at a constant rate, no matter how many arrive at once.

领英推荐

Real-World Example

Think of customer support ticket systems like Zendesk.

Key Differences: Token Bucket vs. Leaky Bucket

Best Practices for Using These Algorithms

1.) Combine Algorithms: Use the Token Bucket for handling bursty traffic at the application layer and the Leaky Bucket for ensuring consistent processing in the backend.

2.) Dynamic Limits: Adjust the bucket size and token rate based on user tiers (e.g., free users vs. premium users).

3.) Clear Feedback: Provide users with clear error messages when rate limits are hit, like “You’ve exceeded your limit. Please wait a few seconds before trying again.”

Conclusion

Rate limiting and throttling are fundamental to maintaining system stability and a fair user experience. Whether you’re managing API traffic, controlling network bandwidth, or regulating service usage, understanding the Token Bucket and Leaky Bucket algorithms is essential.

These algorithms, while simple in concept, solve complex real-world challenges efficiently. By implementing them thoughtfully, you can build robust systems that scale seamlessly while staying resilient under pressure.