Mastering Data Cleaning with Pandas: Essential Functions and Examples

Introduction to Pandas Data Cleaning

The Pandas library has established itself as an essential tool for data engineers and analysts in the realm of data analysis and manipulation. It offers robust data structures and a variety of functions that facilitate the process of data cleaning. Working with raw data poses numerous challenges, including missing values, inconsistencies, and inaccuracies that can lead to erroneous insights if not addressed properly. This is where Pandas shines, providing a suite of tools designed specifically for data cleaning tasks.

Data cleaning is a critical step in the data analysis workflow. Before any meaningful analysis can be performed, raw data must be transformed into a clean and usable format. This includes handling missing data, identifying duplicates, and converting data types. If the data is not meticulously cleaned, subsequent analyses may yield misleading results, thus negating the purpose of the analysis itself. By employing Pandas, data engineers can systematically address these challenges, ensuring the integrity and reliability of their datasets.

The importance of mastering data cleaning functions in Pandas cannot be overstated. For aspiring data analysts and engineers, acquiring proficiency in these functions translates to improved efficiency and accuracy in their work. The library's flexible API allows users to effortlessly filter and preprocess data, making it easier to uncover valuable insights. Overall, understanding how to effectively use Pandas for data cleaning is vital for anyone looking to thrive in a data-driven environment, as it sets a solid foundation for comprehensive data analysis.

Understanding Data Quality Issues

Data quality is paramount for any data engineer, as it directly affects the insights that can be derived from datasets. There are several indicators of poor data quality that can compromise analysis outcomes. Among these issues, missing values are one of the most prevalent. A missing value is a data point that is absent in a dataset, which can lead to misleading insights if not addressed properly. For instance, consider a dataset containing customer information where the age column has several missing entries. In this scenario, failing to handle the missing data could skew demographic analysis, leading to erroneous business decisions based on incomplete information.

Another common data quality issue is the presence of duplicate entries. Duplicate records can arise from various sources, such as data entry errors or system migration processes. For example, if a customer record is entered multiple times due to system synchronization errors, it may lead a data engineer to falsely conclude that a particular customer has made more purchases than actually recorded. Managing duplicates is essential to ensure accurate reporting and analysis.

Additionally, incorrect data types can also hinder effective data analysis. If a dataset contains textual data in a numerical column, this can cause issues during analysis. For example, a 'price' column should ideally hold numerical values; however, if it contains strings such as "N/A" or currency symbols, any mathematical operations on this column could fail. Identifying and rectifying these discrepancies is crucial in ensuring that the dataset is compatible with the analytical processes.

In conclusion, recognizing these indicators of poor data quality—such as missing values, duplicates, and incorrect data types—empowers data engineers to take the necessary actions to amend them. This proactive approach is essential for maintaining the integrity of data analyses and informed decision-making in any organization.

Key Data Cleaning Functions in Pandas

Pandas is an essential tool for data engineers seeking to streamline data cleaning processes. The library offers various functions tailored to address common data issues. Understanding these functions can significantly enhance a data engineer’s efficiency and effectiveness in handling datasets.

One of the primary functions is dropna(), which is utilized to remove missing values from a DataFrame. This function can be particularly useful when dealing with incomplete data. The syntax is straightforward: DataFrame.dropna(axis=0, how='any', thresh=None, subset=None, inplace=False). The parameters allow you to specify the axis to drop along, the condition under which to drop, and whether to perform the operation in place, ensuring flexibility depending on the dataset's context.

Another important function is fillna(), which serves the purpose of replacing missing values with a specified value or method. This function is crucial when you want to maintain the dataset size while addressing gaps. The syntax is DataFrame.fillna(value=None, method=None, axis=None, inplace=False). Here, you can set a fixed value or use methods like forward filling, making it versatile for different data cleaning scenarios.

To ensure data integrity, drop_duplicates() is often employed to eliminate duplicate records from a DataFrame. This function helps maintain a clean and accurate dataset, and its syntax is DataFrame.drop_duplicates(subset=None, keep='first', inplace=False). Parameters such as subset allow targeting specific columns, which is vital when duplicates are not globally relevant.

Finally, converting data types is crucial, and the astype() function facilitates this process. This function can change a column's data type, enhancing the usability of data for analysis. The syntax is DataFrame.astype(arg, copy=True, errors='raise'), with parameters enabling precise adjustments to data types according to requirements.

Handling Missing Data

In data engineering, handling missing data is a crucial aspect that can significantly impact the quality of analysis. Pandas, a powerful data manipulation library in Python, offers several techniques to address the issue of missing values within datasets. One of the fundamental approaches is to remove rows or columns that contain null values. This can be accomplished using the dropna() method, which allows data engineers to specify the axis along which to drop, either rows or columns. For instance, using df.dropna(axis=0) will remove any rows containing missing values, thereby ensuring that subsequent data analyses are not skewed by incomplete information.

Another effective strategy is imputation, where missing values are replaced with specific values to maintain dataset integrity. This can be performed using the fillna() function. For example, replacing missing integer values with the mean of the column can be achieved with df['column_name'].fillna(df['column_name'].mean(), inplace=True). This approach allows data engineers to retain all the data without excluding potentially valuable information from their analysis.

Moreover, forward and backward filling are additional methods that can be employed when dealing with time series data. Forward filling propagates the last observed value forward until a new value is encountered. This can be implemented with the fillna(method='ffill') function, while backward filling uses the next valid observation to fill gaps with fillna(method='bfill'). Both methods are particularly useful in maintaining continuity in time-dependent data.

In applying these techniques, it is vital for data engineers to choose the method that best suits their specific dataset and the overall analysis goals. Each technique has its implications and can significantly affect the outcomes of the data processing tasks undertaken using Pandas.

Identifying and Removing Duplicates

Data quality is paramount for any data engineer working with large datasets in Python, as duplicates can lead to misleading analyses and suboptimal model performances. Pandas, a powerful data manipulation library, provides simple yet effective tools to identify and eliminate these redundancies within DataFrames. The duplicated() and drop_duplicates() functions are essential for managing duplicate records efficiently.

The duplicated() function is a boolean mask that indicates whether each row in the DataFrame is a duplicate of a previous row. This function offers parameters that allow for customization, such as specifying certain columns to consider for duplication checks, and a 'keep' parameter that determines which duplicates to mark as True. For instance, invoking df.duplicated(subset=['column_name'], keep='first') will flag all but the first occurrence of each duplicate entry in the specified column.

Once duplicates are identified, the next step is to remove them. This can be accomplished using the drop_duplicates() function, which not only removes duplicate rows but also allows you to specify which subset of columns to evaluate. For example, df.drop_duplicates(subset=['column_name'], inplace=True) will refine the DataFrame by dropping duplicates based solely on the specified column. This function can also integrate options for retaining the first or last occurrence of duplicates based on the user’s intent.

In practical scenarios, consider a situation where a data engineer is analyzing customer transactions. If there are multiple entries for the same transaction due to data entry errors, utilizing these functions ensures that only unique transactions are analyzed, thereby enhancing the integrity of reporting. Both the duplicated() and drop_duplicates() functions are vital tools in the Pandas arsenal for maintaining high-quality datasets, allowing for more accurate insights and effective decision-making.

Converting Data Types

Data types are integral to data analysis, particularly for professionals such as data engineers who leverage the capabilities of Pandas in Python. Each data type represents a particular kind of data—a string, integer, float, or datetime object, for instance. Properly defining and converting data types is crucial since incorrect types can lead to errors or inaccuracies in analyses, potentially skewing results and decisions made from that data.

The astype() function in Pandas serves as a powerful tool for converting data types effectively. This function allows users to change the type of a series or an entire DataFrame column to a specified type. For example, if a column of dates is stored as strings, it may impede operations that require date functionality, such as finding the difference between dates or filtering data based on a date range. Employing the astype() function can rectify this issue, facilitating accurate calculations and analyses.

Consider a scenario where a dataset includes date information as strings formatted as "YYYY-MM-DD." To convert these strings into datetime objects, one could utilize the pd.to_datetime() function from the Pandas library, which is specifically designed for such conversions. Here’s an example:

import pandas as pd# Sample datadata = {'date_strings': ['2023-01-01', '2023-02-01', '2023-03-01']}df = pd.DataFrame(data)# Convert string date to datetimedf['dates'] = pd.to_datetime(df['date_strings'])print(df)

This code snippet illustrates how to transform a column of date strings into datetime objects, enabling enhanced functionality in subsequent data analysis processes. In the context of data engineering, understanding data type conversion can significantly improve data manipulation and increase the effectiveness of analyses conducted with Pandas.

Standardizing Text Data

Standardizing text data is a crucial step in the data cleaning process, especially for data engineers working with large datasets. Inconsistencies in text can lead to complications during data analysis, such as difficulties in matching records or inaccurate summaries. Common issues in text data include variations in case (uppercase vs. lowercase), extra whitespace, special characters, and typographical errors. Therefore, adopting consistent formatting and spelling is essential to ensure data integrity.

A primary technique to address inconsistencies is lowercasing, which converts all text to a uniform case, thus reducing the chance of mismatching data entries. In the Pandas library, this can be achieved using the str.lower() function. For instance, if you have a column containing country names, applying df['country'].str.lower() will standardize all entries to lowercase. This approach is vital when analyzing categorical data and can significantly enhance the accuracy of merging operations or comparisons.

Another important aspect of standardizing text data is eliminating extraneous whitespace or special characters that may inadvertently be included in entries. The str.strip() function in Pandas is particularly useful for this purpose, as it removes leading and trailing spaces from text. For example, df['name'].str.strip() can be utilized to ensure that each name does not have unnecessary spaces before or after it, thus facilitating more reliable data processing.

Lastly, addressing common typos or spelling discrepancies also contributes to the standardization of text data. While this may require more manual effort or the development of a custom function, it is vital for ensuring that all entries of similar entities align correctly. By employing these standardization techniques using Pandas, data engineers can significantly enhance the overall quality of their datasets, which ultimately improves the outcomes of subsequent analyses.

Practical Examples of Data Cleaning with Pandas

Data cleaning is a crucial step for any data engineer working with datasets in Python. Pandas provides various built-in functions that can greatly facilitate the data cleaning process. In this section, we will explore practical examples demonstrating how to utilize these functions effectively in real-world scenarios.

To begin, let’s consider a sample dataset that includes information about customers in a retail business. The dataset consists of several columns, including customer ID, name, email, purchase history, and city. However, upon inspection, we may find several issues such as missing data, incorrect formats, and inconsistent entries. For instance, some email addresses may be missing the domain, while others could have typos, making them unreliable for analysis.

First, using Pandas, we can identify missing values in the dataset with the isnull() function. This will help us understand the extent of the missing data. Subsequently, we can choose to drop rows with missing values using dropna() or fill them with a default value or mean using fillna(), depending on the context and requirements of our analysis.

Next, we can address the formatting issues. For example, to standardize city names, we can apply the str.title() method which will convert city names into title case. This ensures consistent formatting across the dataset. Furthermore, a commonly encountered problem is duplicate records. To manage this, the drop_duplicates() function is invaluable, allowing data engineers to maintain a clean dataset efficiently.

In conclusion, mastering these data cleaning techniques in Pandas not only enhances the reliability of your datasets but also ensures that subsequent analyses yield accurate and actionable insights. Familiarity with these functions will equip data engineers to tackle various data integrity challenges effectively.

Best Practices for Data Cleaning in Pandas

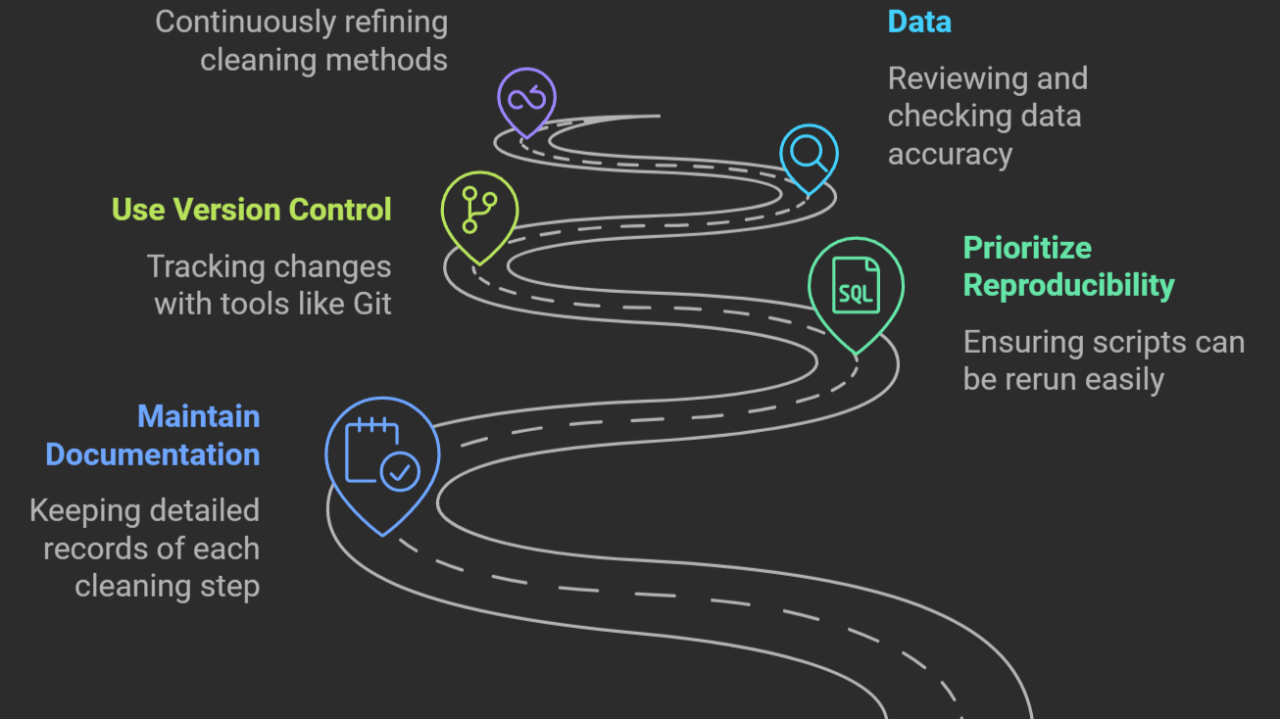

Effective data cleaning is a crucial step in the role of a data engineer, as it ensures that datasets are accurate, consistent, and ready for analysis. When working with Pandas, it is advisable to implement several best practices to streamline and improve the data cleaning process. The first best practice is to maintain clear documentation of all cleaning steps undertaken. By carefully noting each transformation made to the dataset using Pandas, a data engineer can ensure that any future modifications or adjustments are well-informed and accurately applied.

Another essential practice is to prioritize reproducibility. It is vital to design cleaning scripts that can be easily executed again, should any changes to the dataset occur or if the data engineer needs to reassess the cleaning steps. Version control systems, like Git, can be invaluable in this regard, allowing engineers to track changes over time, facilitating easier collaboration, and ensuring that previous data cleaning approaches can be revisited when necessary.

Validation of the cleaned data is also a critical component of best practices in data cleaning. Engineers should spend time reviewing summaries of the cleaned data, running statistical checks, and performing visualization techniques to verify that the cleaning process has resulted in the desired outcome. Employing additional libraries alongside Pandas, such as NumPy or Matplotlib, can bolster these validation efforts. Finally, embracing an iterative process is vital; continuously refining methods and developing cleaning strategies in response to the introduction of new datasets can lead to improved efficiency and effectiveness in cleaning efforts.

By adhering to these best practices, data engineers can maximize their use of Pandas, ensuring thorough and reliable data cleaning processes while also enhancing their overall workflow.

Data Analytics | Power BI | PL/SQL | Python | Software Architecture | DevOps

2 小时前Great contribution. Thanks for sharing!

Administrativa

6 天前Great!

Senior Fullstack Engineer | Typescript Developer | Nodejs | Reactjs | Typescript | AWS | Rust

1 周Very helpful

Senior Front-end Engineer | Fullstack developer | React | Next.js | Vue | Typescript | Node | Laravel | .NET | Azure

1 周Mastering data cleaning with Pandas is crucial!

Senior Software Engineer | Fullstack Developer | .NET & C# | Angular & Javascript | Azure | SQL Server

1 周I appreciate you taking the time to share this, it adds a lot of value.