TKG Cluster creation and CNF Onboarding

Kubernetes Cluster creation and CNF Onboarding

CNF Creation and Onboarding Requirements

Cloud-native functions offer improved flexibility and scale over traditional VM-based network functions.

The ability to leverage cloud-native constructs allows the service provider to simplify network service offerings while offering improved integration into existing CI/CD pipelines.

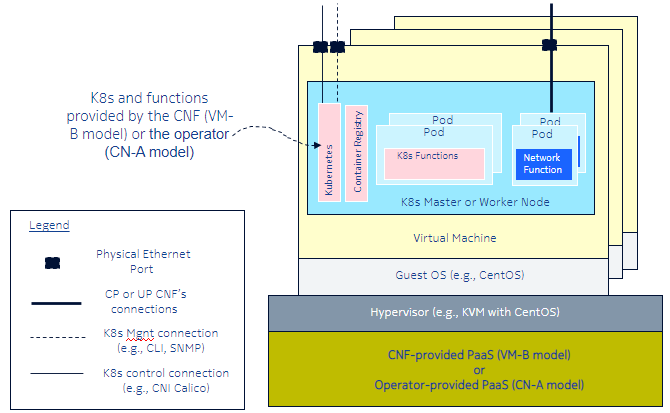

The process of creating, onboarding, and instantiating a Containerized Network Function (CNF) can appear to be a daunting task. How to ensure that the relevant Kubernetes constructs are available? How to ensure the guest operating system is provisioned with all necessary configurations?

To answer these questions, VMware Telco Cloud Automation offers a unique methodology for ensuring the smoothest possible onboarding experience.

At a high level, Figure 1 represents stages for successful deployment of a CNF of a Network Service, using VMware Telco Cloud Automation.

If you would like more information about designing and building the Telco Cloud Platform for 5G or the Telco Cloud Platform for RAN refer to the appropriate reference architecture documents.

K8s:

? Kubernetes Cluster Design

? Kubernetes Cluster Deployment

Artifacts:

? Upload Helm Charts and OCI images to Harbor

? Onboard (upload or design) NF / NS CSARs into VMware Telco Cloud Automation

Insatiate:

? Creation of custom requirements for NF / NS instantiation

? Instantiation of NF

Before starting to work with Kubernetes and the cloud-native application constructs, a working deployment of VMware Telco Cloud Automation is required. The process to achieve a working deployment of VMware Telco Cloud Automation is outside the scope of this document.

Telco Cloud Automation deployment guide:

VMware Telco Cloud Automation – Cluster Creation and Deployment

Before instantiating a CNF, both a Tanzu Kubernetes Grid (TKG) management and Tanzu Kubernetes Grid (TKG) workload cluster must exist within VMware Telco Cloud Automation. The steps required to create a workload cluster are listed in this section.

The workload cluster is where the actual Containerized Network Functions (and/or PaaS components) are deployed. Workload clusters can also be broken into multiple node pools for more granular management.

In the CaaS Infrastructure, the first task is to create a Kubernetes Cluster Template. This task allows us to provide the initial configuration for the Kubernetes infrastructure that will be used to host the Containerized (Cloud-Native) Network Function.

Basic Cluster Template

In step 1, provide the Kubernetes cluster name and select the Cluster Type as Workload Cluster.

Note: To successfully instantiate a workload cluster, a management cluster must already be created.

TKG Cluster Configuration

Step 2 involves the configuration of the cluster. At this stage, the following three main items can be configured from a base cluster perspective:

Kubernetes Version – The supported TKG versions vary depending on the release of VMware Telco Cloud Automation. A matrix of the supported TKG / Kubernetes deployments for each VMware Telco Cloud Automation release is attached at the end of this document.

Container Networking Interface – Here, we can specify the desired networking plugins that are required to be deployed into the cluster. o For the Primary CNI, we can select Calico or Antrea. The exact versioning of these and the underlying implementation is not configurable. Only a single Primary CNI can be selected.

For the secondary CNI, MULTUS is supported. MULTUS allows the attachment of multiple network interfaces to the worker node/pod level.

Container Storage Interface – Here, the desired storage configuration can be applied. There are two options for the CSI: o vSphere-CSI – This option uses the vSphere CSI plugin for Kubernetes, effectively allowing TKG to leverage any supported Datastore within the vSphere ecosystem.

nfs_client – This option uses an NFS client to mount an NFS share directly to each worker node. The NFS share needs to be provided by either vSAN 8 file services or an external NFS share.

Control Node Configuration

Step 3 involves the configuration of the control nodes. The control nodes are responsible for making decisions regarding the cluster state (scheduling, ensuring alignment of intent vs actual). Kubernetes elements including the scheduler,

etcd, the controller-manager, and the kube-API are deployed in the control nodes. In VMware Telco Cloud Automation, the TKG control nodes are not used for running workloads, only worker nodes are used for running workloads.

Here, we specify the size of control nodes in terms of CPU, memory, and storage. Typically, a minimum of three nodes are deployed for a highly available set of control nodes. The number must always be an odd count (3,5,7) for increasing availability and scale.

Typically, the only network attached to the control nodes is the management network. This is the interface against which the primary CNI will run and is used for all control-worker node communication.

Worker Node Configuration

Step 4 involves creating and configuring the worker nodes or node-pools against which the network functions and services will be instantiated

Onboarding Cloud-Native Network Functions

Network function onboarding incorporates multiple elements. Not all these elements are bound to VMware Telco Cloud Automation.

CSAR – This is the Cloud Service Archive – this holds the NF descriptor and other components specific to the application and is uploaded to VMware Telco Cloud Automation.

HELM Charts – the HELM chart is a standardized way of packaging Kubernetes manifests together in a structured format. These are uploaded to Harbor.

Values.yaml – The values.yaml file is used during instantiation and overrides the default configuration from the HELM chart. This allows a common HELM configuration to be pre-packed, but deployment specifics are supplied during deployment through this file.

Container Images – These are docker/OCI-compliant container images that are tagged and uploaded to a Harbor deployment.

HELM Charts

HELM charts are a common way to package and manage Kubernetes applications. HELM charts allow a simple methodology to define, configure, and instantiate cloud-native applications.

CNF Onboarding Overview

Onboarding the network function completes the action of loading the Network Function CSAR into the VMware Telco Cloud Automation Catalog.

Two catalogs exist in VMware Telco Cloud Automation - one for network functions and one for network services. If a CSAR has already been created, the user can simply upload the supplied artifact directly into the relevant catalog.

领英推荐

Design Canvas

The CNF design canvas is where the Network Function properties are configured. Function workflows can be uploaded, and HELM charts can be configured here.

Initially the user will be presented with the Network Function Catalog Properties page as shown in Figure 14, here the function designer can enter some base values for the Network function itself:

Descriptor Version: The descriptor version associated with this NF.

Provider: The NEP/ISV responsible for the software.

Product Name: The Network Function name as provided by the NEP / ISV.

Version: The release # of the overall network function.

Software Version: The NF Software version.

As well as functional properties, the designer can select which operations are available from the standard set of operations. Lastly, the ability to add workflows to the CSAR is supported. These workflows can be uploaded at one of the pre-defined workflow points.

Once the data is entered appropriately, click Topology to return to the canvas. If these properties need to be edited again, the General Properties selector at the top of the canvas allows the user to edit these properties.

Adding Charts

Once the function properties have been configured, the next step is to add HELM charts to the canvas. This is achieved by dragging the Helm icon onto the canvas.

Once the HELM is dropped on the canvas, the user can provide the relevant configuration as shown in the below screenshot.

Name: Specify the internal name for this NF component.

Description: An internal description of this chart.

Chart Name: This is the name of the chart. This MUST match the name of the Helm chart in Harbor.

Chart Version: This is the chart version. Again, this MUST match the version of the Helm chart in Harbor.

Note: The combination of Chart Name + Version is what is used to pull/install the chart from Harbor. The project path does not need to be configured at this point.

ID: - The Helm ID (in the CSAR).

Multiple helm charts can be dropped onto the canvas and configured in this way. If there are multiple charts, the Depends On section allows a dependency matrix to be built (starting the app service before the web-UI, for example).

In addition to configuring the Helm chart, the properties can be configured. This is where additional elements can be requested as part of the instantiation process.

The example shows a request for a ‘values.yaml’ as type file. During instantiation, the user will be prompted to upload the corresponding file, and this is a required step.

Additional information can be requested. These can be used as variables within the network function workflow operations.

As an example, the helm property overrides can be used to not only request a values.yaml file, but can be used to override specific items via an individual property item If the specific entry in the values.yaml file is structured as follows

image:

registry: harbor.domain.com

Then a single entry in the helm overrides called image.registry – value <replacement harbor domain> could be used to override a single value without uploading a values.yaml file.

The following example in Figure 16 shows multiple property values being overridden from the default values.yaml that is supplied within the helm chart, this image shows 6 properties of type string and 2 of type Boolean (true/false).

Then click onboard package

Infrastructure Requirements

When the TKG clusters are created, aside from the CSI/CNI and machine spec, the configuration can be considered a generic cluster, ready for consumption.

However not all CNFs are developed equally, and some require additional configuration. This can be achieved through the infrastructure requirements configuration within VMware Telco Cloud Automation.

The infrastructure requirements are an extension to the standard TOSCA framework that creates Custom Resource Definitions for the NodeConfig and VMConfig Kubernetes operators that are deployed by VMware Telco Cloud Automation.

Network functions from different vendors have their own unique set of infrastructure requirements. Defining these requirements in the functional spec (vnfd) ensures that these customizations are separated from the cluster design. This allows the application to have the Kubernetes environment be shaped for purpose.

Through the CSAR, the infrastructure requirements (infra_requirements) section allows the reconfiguration of the following components in an automated fashion in a process known as Late-Binding.

Late-Binding is the process of configuring the CaaS layer (TKG worker nodes) with the requirements of the application. This addresses the issue of balancing consistent cluster operations (through templating) with the Application specific requirements demanded by 5G Core/RAN deployments.

The following are components that can be configured through the late-binding process.

Kernel level configurations:

Kernel Type / Release

Kernel Arguments

Kernel modules

Custom packages

Custom artifacts

Tuned profile.

NUMA alignment parameters

Latency sensitivity requirements

Network adapters – as part of the secondary CSI, SRIOV / DPDK connected interfaces are created using these components.

PCI Pass through – for components requirement PT, such as FPGA for FEC offload or NIC PT for PTP timings are specified through the PCI-PT infra requirements.

Absolutely, I'm glad you appreciate the guidance. Utilizing official product documentation is indeed the best practice for obtaining accurate and detailed information, especially when dealing with complex systems like VMware Telco Cloud and DevOps tools. The documentation typically provides in-depth insights, troubleshooting steps, and best practices to ensure successful deployment and operation.

If you encounter any specific challenges or have more questions while working with VMware products or any other topics, feel free to reach out. I'm here to help with general information and guidance to the best of my ability. Remember, for product-specific details and support, always refer to the official documentation and support channels provided by the respective vendors.

Wishing you success in your endeavors, and don't hesitate to ask if you have further inquiries!

Eslam Amin …….

Professional with extensive experience in implementing, maintaining, and automating - VMware TelcoCloudAutomation(TCA), CNF/VNF, vSphere, NSX-T, AVI, VRA Suite, Tanzu, Openshift, and Cloud.

1 年Well written post.

VMware Senior Consultant | Telco Cloud | VCIX-DCV | CKA | GCP | Tanzu | VCD | VCDA | Cloud Native | Hybrid Cloud | NFVi, NFV, VNF,CNF |2*VCP [DCV, AM] |

1 年Great job bro, please don't stop and continue sharing such a great posts. ???? ???? ??? ? ???? ?? ????? ?????? ?? ?????

Cloud/DevSecOps Lead Architect and Delivery Manager

1 年thanks bro for sharing. please continue such posts.

Cloud/DevSecOps Lead Architect and Delivery Manager

1 年thanks bro for sharing. please continue such posts.