Introduction to Weight Quantization

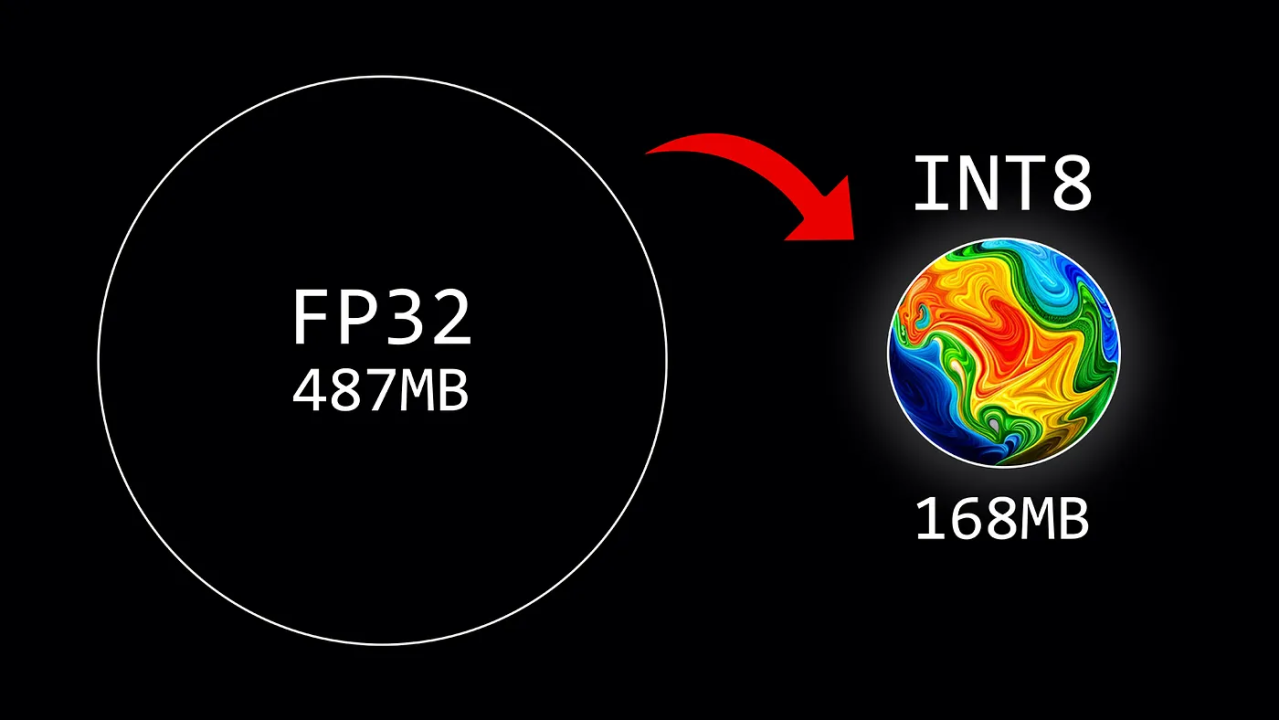

One well-known drawback of large language models (LLMs) is their high computational overhead. A model's size is often determined by multiplying its size (number of parameters) by the accuracy of its values (data type). However, weights can be quantized a technique that uses lower-precision data types to hold information in order to conserve memory.

We distinguish two main families of weight quantization techniques in the literature:

Background on Floating Point Representation

The choice of data type dictates the quantity of computational resources required, affecting the speed and efficiency of the model. In deep learning applications, balancing precision and computational performance becomes a vital exercise as higher precision often implies greater computational demands.

Among various data types, floating point numbers are predominantly employed in deep learning due to their ability to represent a wide range of values with high precision. Typically, a floating point number uses n bits to store a numerical value. These n bits are further partitioned into three distinct components:

This design allows floating point numbers to cover a wide range of values with varying levels of precision. The formula used for this representation is:

领英推荐

To understand this better, let’s delve into some of the most commonly used data types in deep learning: float32 (FP32), float16 (FP16), and bfloat16 (BF16):

In this section, we will implement two quantization techniques: a symmetric one with absolute maximum (absmax) quantization and an asymmetric one with zero-point quantization. In both cases, the goal is to map an FP32 tensor X (original weights) to an INT8 tensor X_quant (quantized weights).

With absmax quantization, the original number is divided by the absolute maximum value of the tensor and multiplied by a scaling factor (127) to map inputs into the range [-127, 127]. To retrieve the original FP16 values, the INT8 number is divided by the quantization factor, acknowledging some loss of precision due to rounding.

For instance, let’s say we have an absolution maximum value of 3.2. A weight of 0.1 would be quantized to round(0.1 × 127/3.2) = 4. If we want to dequantize it, we would get 4 × 3.2/127 = 0.1008, which implies an error of 0.008.