Implicit type casting is an easy way to shoot yourself in the foot

The phrase "Implicit type casting is an easy way to shoot yourself in the foot" refers to the potential dangers and pitfalls associated with automatic type conversions in programming. In this context, it specifically pertains to PySpark's handling of data types, particularly when working with dates and times.

What is Implicit Type Casting?

Implicit type casting (also known as implicit conversion) occurs when a programming language automatically converts one data type to another without explicit instructions from the programmer. For example, in some situations, PySpark might automatically convert a string to a date, or a number to a string, based on the operations being performed or the context.

The Dangers of Implicit Type Casting

The warning in the statement highlights a few key issues:

领英推荐

Explicit Parsing and Conversion

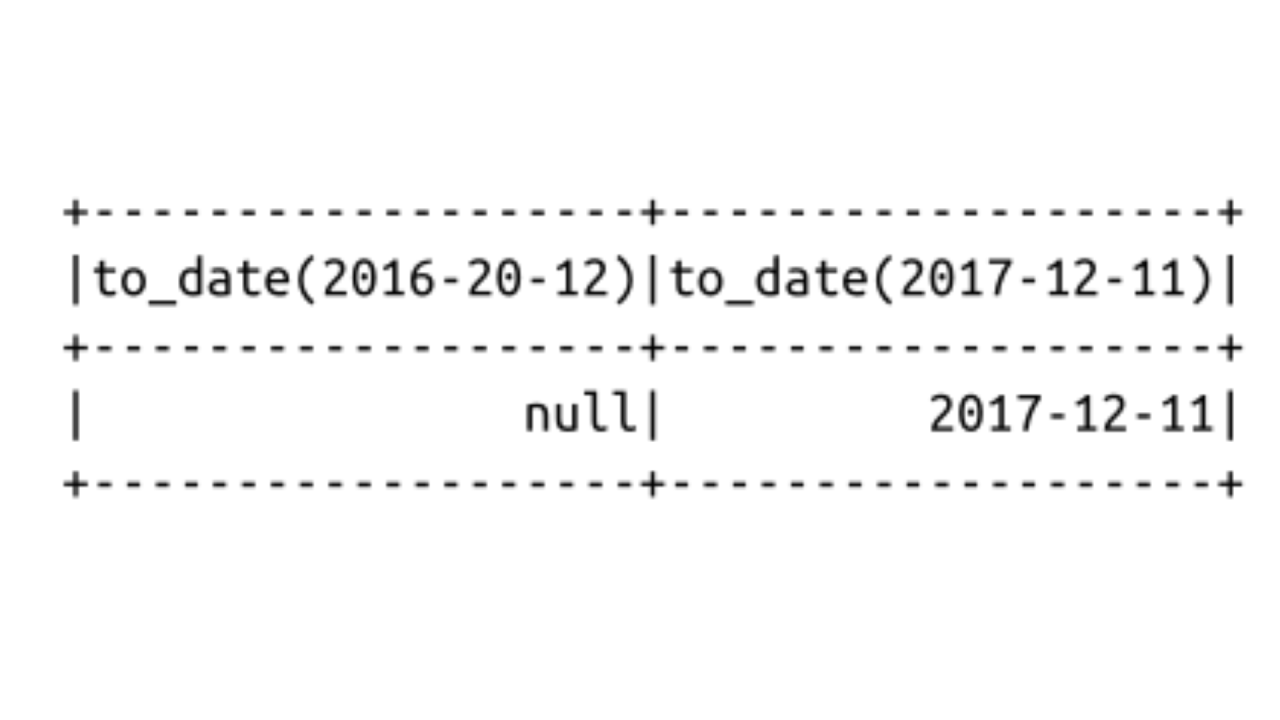

To avoid these issues, the recommendation is to explicitly parse and convert data types. This means that instead of relying on PySpark to automatically infer and convert types, you should use specific functions and specify the desired format. For example, using functions like to_date or to_timestamp with a specified date format ensures that the conversion happens as expected.

Example

Instead of relying on PySpark to automatically convert a string to a date, you should explicitly specify the format:

from pyspark.sql.functions import to_date, lit

dateFormat = "yyyy-MM-dd"

df = spark.createDataFrame([("2023-01-01",), ("01/01/2023",)], ["date_str"])

# Explicitly specifying the format for date conversion

df_with_dates = df.select(to_date(col("date_str"), dateFormat).alias("date"))

df_with_dates.show()

By explicitly specifying the format, you reduce the risk of incorrect conversions and ensure that your data pipeline is robust and reliable.