?? Implementing Celery in a Django Project??

MD ABDUL ALIM

Software Engineer | Python, Django, React, mysql, postgresql, celery, rabbitmq, aws, digitalocean

Celery is a powerful distributed task queue that allows you to handle long-running tasks in background without blocking the main application.

How to Implement Celery in Your Django Project:

Step 1: Configure Celery in #django settings.py

# Celery settings

CELERY_BROKER_URL = env('CELERY_BROKER_URL') #env('redis://localhost:6379/5'). Here 5 is redis database number.

CELERY_RESULT_BACKEND = env('CELERY_RESULT_BACKEND') #env('redis://localhost:6379/5')

CELERY_BROKER_CONNECTION_RETRY_ON_STARTUP = env('CELERY_BROKER_CONNECTION_RETRY_ON_STARTUP') #True

CELERY_TIMEZONE = "Asia/Dhaka"

CELERY_TASK_TRACK_STARTED = env('CELERY_TASK_TRACK_STARTED') #True

CELERY_TASK_TIME_LIMIT = 30 * 30

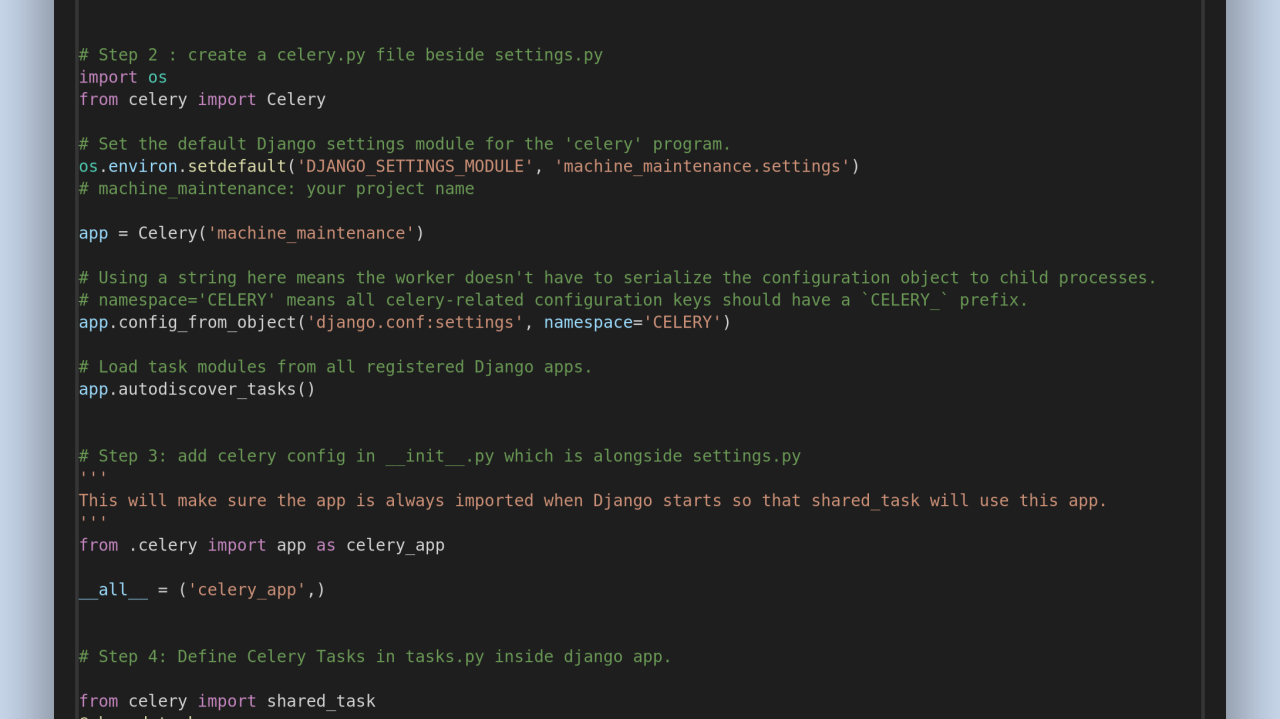

Step 2: Create celery.py

import os

from celery import Celery

# Set the default Django settings module for the 'celery' program.

os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'machine_maintenance.settings')

app = Celery('machine_maintenance')

# Using a string here means the worker doesn't have to serialize the configuration object to child processes.

# - namespace='CELERY' means all celery-related configuration keys should have a `CELERY_` prefix.

app.config_from_object('django.conf:settings', namespace='CELERY')

# Load task modules from all registered Django apps.

app.autodiscover_tasks()

Step 3: Add celery app configuration in _ _init_ _.py

领英推荐

# This will make sure the app is always imported when

# Django starts so that shared_task will use this app.

from .celery import app as celery_app

__all__ = ('celery_app',)

Step 4: Define Celery Tasks in tasks.py

# tasks.py from celery import shared_task

from celery import shared_task

@shared_task

def update_rent_billing_status_task(rmblm_id, ids_for_is_billed, machine_reference):

# ... your task logic here ...

Step 5: Call your celery task wherever you need.

update_rent_billing_status_task.delay_on_commit(

rmblm_id=rmblm.id,

ids_for_is_billed=rmbl_item['ids_for_is_billed'],

machine_reference=rmbl_item['machine_reference']

)

Step 5: Run celery locally

celery -A machine_maintenance worker --loglevel=info

Here `machine_maintenance` is django project name.

#django #python #celery #asynchronous #python_developer #django_developer #software_engineer #TaskQueue #backend_developer #webDevelopment

Associate- Product and Pre-Sales | Cybersecurity | Enterprise Solutions

7 个月Very helpful!