How Tensorflow Calculates Gradients

Let's reveal the magic behind TF backward pass ! This is very important to know if you are to build complex deep learning modules with TF .

In deep learning models there are two phases

- Forward Pass

- Backward Pass

In the forward pass you will do bunch of operations and obtain some predictions or a scores .

For example in a convolutional neural net we should write functions for following in a stacking manner

- Input Tensor

- Convolution Operation (Sliding a K*K filter)

- Applying Non-Linearity to neurons (RELU) in the feature maps

- Max Pooling

- Softmax for get logits

- Cross Entropy Error

Then what ? We need to come backward

Now if you are playing with a CNN made of python numpy as in CS231 assignments you need to write a backward API too ;) .

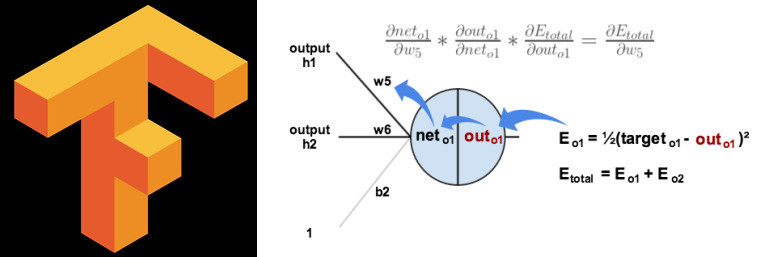

Basically you have to implement the chain rule

Making sure you know differentiation :D :D

It's like for each above mentioned operations you have to implement their derivatives

Yes ! Yes ! I know it's sucks !

But in TF we only consider about writing forward pass API . How cool ..

What is the magic ?

There's no magic someone has implemented them for you ;)

Let's explore , how they do it !

When thinking of execution of a TF programme we all are familiar with following ,

Basically first one is for building the model and second one is for feeding the data IN and getting results . You can read more on them .

Always remember TF does each and everything on C++ engine.

Even a little multiplication is not executed on python.

Python is just a wrapper

The most important thing in Tensorflow graph is the Backward Pass Magic which we call

Auto Differentiation

There are two types of Auto Differentiation Methods

- Reverse mode - Derivation of single output w.r.t all inputs .

- Forward Mode – Derivation of all outputs w.r.t one input .

The basic unit of above two methods is the Computational Graphs

This is a simple computation graph for each operation .

I found a perfect introduction to Computational Graphs in Colah's Blog .

Calculus on Computational Graphs: Backpropagation

So basically for the each operation in the forward pass we mention tensorflow create it's graph connecting operations top to bottom .

Here's a simple TF computational graph visualized with Tensor-Board

The above graph is corresponding to simple equation ,

Output =dropout(Sigmoid(Wx+b))

Now this is simple! Even though we don't care about the backward pass TF automatically create derivatives for all the operations top to bottom (Loss Function to Weights )

when we start a session , TF automatically calculates gradients for all the deferential operations in the graph and use them in chain rule.

Basically TF c++ engine consist of following two things .

- Efficient implementations for operations like convolution , max pool ,sigmoid etc .

- Derivatives of forward mode operation .

Finally here's a nice graph I found in cs224 - Deep Learning for NLP .

Forward Pass is consist of this .

So the forward pass consists of ,

- Variables , place holders (weights -w, input-x , bias -b)

- Operations (nonlinear operation -ReLU , Softmax , Cross Entropy Loss etc)

In following graph You can clearly see how TF automatically creates backward pass

- Left - Forward Pass Graph (This is what we create with TF )

- Right - Backward Pass Graph (TF automatically create this )

You can see the connection lines between two graphs . It is also automatically get generated (Reverse Mode Auto Differentiation) .

They make the training process running by transferring data when doing Chain Rule .

Staff Data Scientist @ALDI SüD | Data Science, ML, MLOps, LLMs, Knowledge Graphs | Opinions are my own

7 年Fantastic article! Very useful.

Electrical Project Engineer, Sentinel Power Services, Tulsa

7 年ohh man! Well done..

CGI Partner | I assist organisations to accelerate enterprise Automation & AI. Strategy, People, Processes, Technology, Value

7 年Brilliant work, entertaining too!

AI Expert || Procurement Digitalization

7 年Nice explanations! keep up the good work.