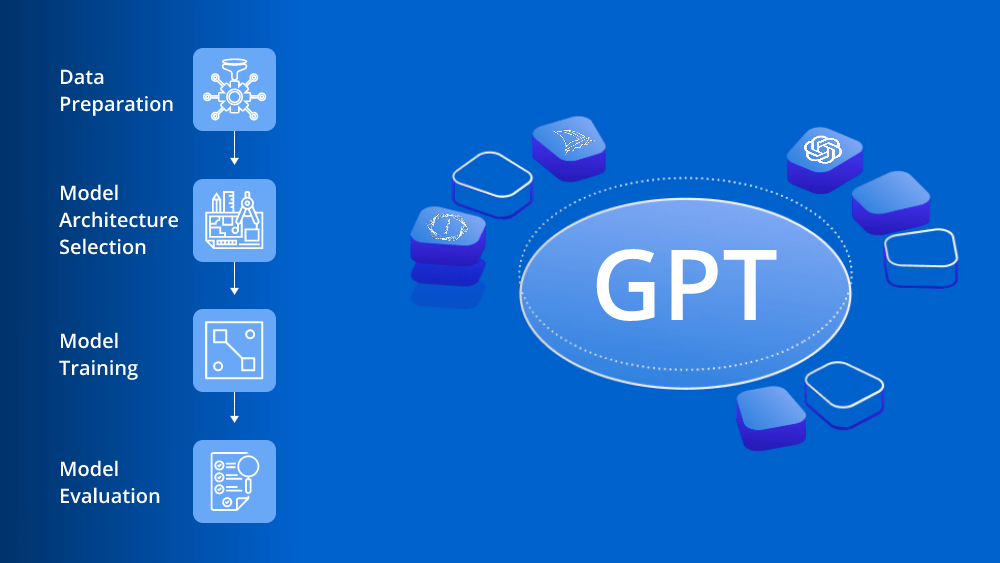

How to build a GPT model?

GPT models, short for Generative Pretrained Transformers, represent cutting-edge deep learning technology tailored for producing text that resembles human language. Developed by OpenAI, these models have undergone several iterations, including GPT-1, GPT-2, GPT-3, and the latest addition, GPT-4.

Debuting in 2018, GPT-1 pioneered the series with its innovative Transformer architecture, boasting 117 million parameters and trained on a blend of datasets sourced from Common Crawl and BookCorpus. While capable of generating coherent text given context, GPT-1 had drawbacks such as text repetition and struggles with intricate dialogue and long-term dependencies.

In 2019, OpenAI unveiled GPT-2, a significantly larger model with 1.5 billion parameters trained on an even broader dataset. Its notable strength lay in crafting realistic text and human-like responses, albeit with challenges in maintaining coherence over extended passages.

The arrival of GPT-3 in 2020 represented a monumental advancement. With an unprecedented 175 billion parameters and extensive training data, GPT-3 demonstrated remarkable proficiency across diverse tasks, from generating text to coding, artistic creation, and beyond. Despite its versatility, GPT-3 exhibited biases and inaccuracies.

Subsequent to GPT-3, OpenAI launched an enhanced iteration, GPT-3.5, followed by the release of GPT-4 in March 2023. GPT-4 stands as OpenAI's most recent and sophisticated language model, boasting multimodal capabilities. It excels in producing precise statements and can process image inputs for tasks such as captioning, classification, and analysis. Additionally, GPT-4 showcases creativity by composing music and crafting screenplays. Available in two variants—gpt-4-8K and gpt-4-32K—differing in context window size, GPT-4 demonstrates a significant stride in understanding complex prompts and achieving human-like performance across various domains.

However, the potent capabilities of GPT-4 raise valid concerns regarding potential misuse and ethical implications. It remains imperative to approach the exploration of GPT models with a mindful consideration of these factors.

Use cases of GPT models

GPT models have garnered recognition for their multifaceted applications, delivering substantial benefits across various sectors. Here, we'll delve into three primary use cases: Language Understanding, Content Generation for UI Design, and Natural Language Processing Applications.

Language Understanding through NLP

GPT models play a pivotal role in advancing computers' comprehension of human language, spanning two crucial domains:

Content Generation for User Interface Design

GPT models are utilized to generate content for user interface design, simplifying tasks such as creating web pages where users can easily upload various content forms with minimal effort. This includes adding basic elements like captions, titles, descriptions, and alt tags, as well as interactive components like buttons, quizzes, and cards. Such automation reduces the need for additional development resources and investment.

领英推荐

Applications in Computer Vision Systems for Image Recognition

Beyond textual processing, GPT models find applications in computer vision systems for tasks such as image recognition. These systems adeptly identify and categorize specific elements within images, including faces, colors, and landmarks, leveraging the transformer architecture of GPT-3 effectively.

Enhancing Customer Support with AI-powered Chatbots

AI-powered chatbots, powered by GPT models, are revolutionizing customer support. Empowered by GPT-4, these chatbots comprehend and address customer queries accurately, simulating human-like conversations. Providing detailed responses and round-the-clock assistance significantly enhances customer service, leading to improved satisfaction and loyalty.

Bridging Language Barriers with Accurate Translation

GPT-4 excels in language translation, accurately translating text across multiple languages while preserving nuances and context. This capability is invaluable in bridging language barriers and facilitating global communication, making information accessible to diverse audiences.

Considerations while Building GPT Models

Conclusion

GPT models mark a significant advancement in AI development, within the broader trajectory of LLM trends poised for future growth. OpenAI's pioneering decision to offer API access aligns with its model-as-a-service business strategy. Moreover, GPT's language-centric capabilities facilitate the creation of innovative products, excelling in tasks like text summarization, classification, and interaction. These models are anticipated to significantly influence the future landscape of the internet and our utilization of technology and software. While building a GPT model may present challenges, adopting the appropriate approach and tools transforms it into a gratifying endeavor, unlocking novel opportunities for NLP applications.

Source Url: https://www.leewayhertz.com/build-a-gpt-model/

Co-Founder of Altrosyn and DIrector at CDTECH | Inventor | Manufacturer

1 年Building a GPT model is an exciting journey. You mentioned a diverse range of hashtags, reflecting the vast landscape of AI. Historical data shows the evolution from GPT-3 to potentially GPT-5, indicating a continuous innovation cycle. To parallel this, consider historical breakthroughs like the transition from GPT-2 to GPT-3, emphasizing the iterative nature of AI advancements. Now, diving into the specifics, have you explored the latest techniques such as prompt engineering or fine-tuning for custom applications? Understanding these nuances could significantly impact the performance and adaptability of your GPT model.