Hierarchical Clustering: A Comprehensive Guide to Understanding and Applying This Powerful Data Analysis Technique

Massimo Re

孙子是公元前672年出生的中国将军、作家和哲学家。 他的著作《孙子兵法》是战争史上最古老、影响最大的著作之一。 孙子相信一个好的将军会守住自己的国家的边界,但会攻击敌人。 他还认为,一个将军应该用他的军队包围他的敌人,这样他的对手就没有机会逃脱。 下面的孙子引用使用包围你的敌人的技术来解释如何接管。

Keywords and Keyphrases: Hierarchical clustering, cluster analysis, dendrogram, agglomerative hierarchical clustering, divisive hierarchical clustering, distance measure, applications of hierarchical clustering

Meta Description: Delve into the world of hierarchical clustering, a versatile data analysis technique that organizes data into a hierarchy of clusters. Discover its types, applications, and step-by-step implementation using agglomerative hierarchical clustering.

Index

- Representation-based

- Density-based regression

Classification

- Logistic regression

- Naive Bayes and Bayesian Belief Network

- k-nearest neighbor

- Decision trees

- Ensemble methods advanced Topics

- Time series

Hierarchical clustering

Hierarchical clustering is a type of cluster analysis that aims to organize data into a hierarchy of clusters.?

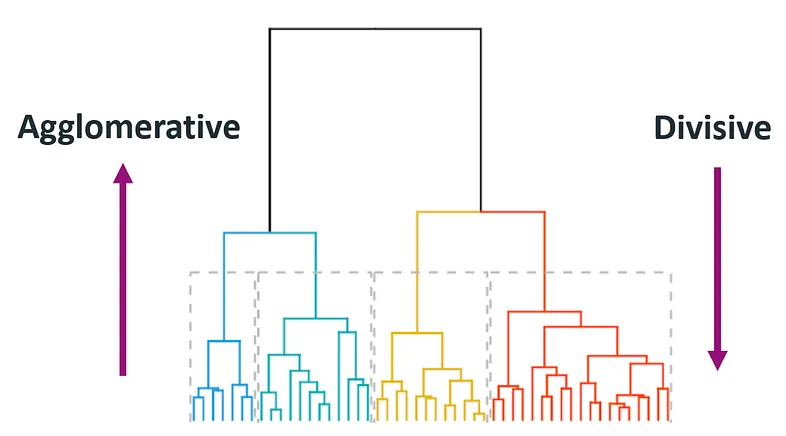

Often, the representation process is by a tree-like structure called a dendrogram.?

In hierarchical clustering, similar data points are grouped and then successively combined into larger clusters.

There are two main types of hierarchical clustering:

Hierarchical clustering has various applications, including:

The advantage of hierarchical clustering is that it provides a hierarchy of clusters, allowing for different levels of granularity in the analysis. However, it can be computationally expensive, especially for large datasets. The choice between agglomerative and divisive clustering depends on the specific requirements of the analysis.

Exercise: Agglomerative Hierarchical Clustering

Imagine you have a dataset containing the two-dimensional coordinates of some points:

A(2,3),B(5,4),C(9,6),D(8,2),E(7,5)

Use agglomerative hierarchical clustering to create a hierarchy of clusters. For the similarity metric, assume you are using the Euclidean distance.

Solution:

1. Calculate Distances:

Calculate the Euclidean distance between all points and create a distance matrix:

2.? Add Clusters One by One:

3. Visualize as a Dendrogram:

This example illustrates how to implement agglomerative hierarchical clustering step by step on a small dataset. You can use programming tools or specialized software to automate this process on larger datasets.

Contact Us: for information or collaborations

landline: +39 02 8718 8731

telefax: +39 0287162462

mobile phone: +39 331 4868930;

or text us on LinkedIn.

Live or video conference meetings are by appointment only,

Monday to Friday from 9:00 AM to 4:30 PM CET.

We can arrange appointments between other time zone