Handling Imbalanced Datasets by Oversampling and Undersampling with Python Implementation

Lakshmi Prabha Ramesh

IoT Operations Management| Machine Learning | Data Science | Cybersecurity

What is Imbalanced data?

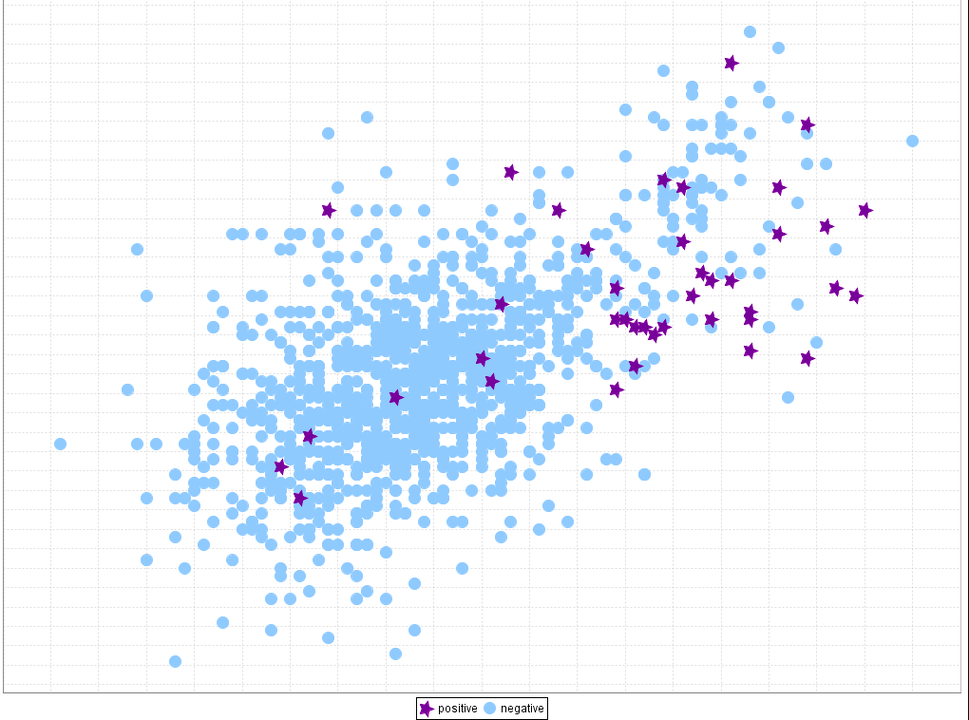

In general, most of the classification type datasets will have highly skewed or biased data. That is, there will be a majority class and a minority class. This is generally is called imbalanced dataset. The ratio of these classes is called the imbalanced ratio.

Issue with Imbalanced data

The output determined by working with such imbalanced data is biased to the majority class which has a higher number of examples. Greater the imbalanced ratio the output is more biased to the majority class. And about 99% of the samples in the dataset will not represent the minority class and the resulting performance measure accuracy will be very high. Hence in order to get unbiased results we need to balance out the dataset.

Handling Biased or Imbalanced Dataset

We need to first balance the dataset. In order to do so, the resampling technique is commonly used to reduce the bias. There are two ways of resmapling

In oversampling the simplest way to increase the minority class is by duplication of the random data points, which may lead to overfitting.

In undersampling the simplest way to decrease the majority class is by removing the random data points which may lead to loss of relevant information.

Let us see the implementation of both using python code. We require a python package imbalanced-learn which has to be installed.

pip install imbalanced-learn

Oversampling Methods

SMOTE (Synthetic Minority Oversampling TEchnique)

Undersampling Methods

Tomek Links- Tlinks

Cluster Centroid based undersampling

Python Implementation

Here we will use the pima indian diabetes dataset from Kaggle for seeing both the implemenation of SMOTE and RandomUnderSampler ( It involves sampling any random class with or without any replacement.)

Imports

# To help with reading and manipulation of data

import numpy as np

from numpy import array

import pandas as pd

# To split the data

from sklearn.model_selection import train_test_split

# To build a decision tree model

from sklearn.tree import DecisionTreeClassifier

# To get different performance metrics

import sklearn.metrics as metrics

from sklearn.metrics import recall_score,

# To undersample and oversample the data

from imblearn.over_sampling import SMOTE

from imblearn.under_sampling import RandomUnderSampler

Load and view Data

X= pdata.drop(["class"],axis=1)

y= pdata["class"]

# splitting the dataset

X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.30,random_state=1)

# Seeing the value counts in minority class

print(y_train.value_counts(1))

print("*" * 80)

print(y_test.value_counts(1))

print("*" * 80)

领英推荐

Splitting the Dataset and viewing the counts

X= pdata.drop(["class"],axis=1)

y= pdata["class"]

# splitting the dataset

X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.30,random_state=1)

# Seeing the value counts in minority class

print(y_train.value_counts(1))

print("*" * 80)

print(y_test.value_counts(1))

print("*" * 80)

Building a normal Decision Tree Model and checking the Recall score

dtree2 = DecisionTreeClassifier(random_state=1, max_depth=4)

# training the decision tree model with oversampled training set

dtree2.fit(X_train_un, y_train_un)

# Predicting the target for train and validation set

pred_train = dtree2.predict(X_train_un)

pred_test = dtree2.predict(X_test)

# Checking recall score on oversampled train and validation set

print(recall_score(y_train_un, pred_train))

print(recall_score(y_test, pred_test))

Oversampling train data using SMOTE

sm = SMOTE( sampling_strategy="auto", random_state=1, k_neighbors=5)

X_train_over , y_train_over = sm.fit_resample(X_train,y_train)

print("Before OverSampling, count of label '1': {}".format(sum(y_train == 1)))

print("Before OverSampling, count of label '0': {} \n".format(sum(y_train == 0)))

print("After OverSampling, count of label '1': {}".format(sum(y_train_over == 1)))

print("After OverSampling, count of label '0': {} \n".format(sum(y_train_over == 0)))

print("After OverSampling, the shape of train_X: {}".format(X_train_over.shape))

print("After OverSampling, the shape of train_y: {} \n".format(y_train_over.shape))

Building a Decision Tree Model with Oversampled Data and checking the Recall score

dtree2 = DecisionTreeClassifier(random_state=1, max_depth=4)

# training the decision tree model with oversampled training set

dtree2.fit(X_train_un, y_train_un)

# Predicting the target for train and validation set

pred_train = dtree2.predict(X_train_un)

pred_test = dtree2.predict(X_test)

# Checking recall score on oversampled train and validation set

print(recall_score(y_train_un, pred_train))

print(recall_score(y_test, pred_test))

Undersampling train data using RandomUnderSampler

rm = RandomUnderSampler(sampling_strategy=1,

random_state=1)

X_train_un,y_train_un = rm.fit_resample(X_train,y_train)

print("Before Under Sampling, count of label '1': {}".format(sum(y_train == 1)))

print("Before Under Sampling, count of label '0': {} \n".format(sum(y_train == 0)))

print("After Under Sampling, count of label '1': {}".format(sum(y_train_un == 1)))

print("After Under Sampling, count of label '0': {} \n".format(sum(y_train_un == 0)))

print("After Under Sampling, the shape of train_X: {}".format(X_train_un.shape))

print("After Under Sampling, the shape of train_y: {} \n".format(y_train_un.shape))

Building a Decision Tree Model with Undersampled Data and checking the Recall score

dtree2 = DecisionTreeClassifier(random_state=1, max_depth=4)

# training the decision tree model with oversampled training set

dtree2.fit(X_train_un, y_train_un)

# Predicting the target for train and validation set

pred_train = dtree2.predict(X_train_un)

pred_test = dtree2.predict(X_test)

# Checking recall score on oversampled train and validation set

print(recall_score(y_train_un, pred_train))

print(recall_score(y_test, pred_test))

Observations

We can see a change in the recall score after oversampling and undersampling of the data.

The undersampled version gave the best Recall score when compared to the remaining two decision tree models.

Further performance can be improved by using hyperparameter tuning.

Summary

Thus we saw how Imbalanced data can be handled by oversampling and undersampling of datasets along with the implementations.

Thanks for your time.

References: