A Guide to Integrating Pythia API with RAG-based Systems Using Wisecube Python SDK

Retrieval Augmented Generation (RAG) systems generate outputs from an external knowledge base to enhance the accuracy of generative AI. Despite their suitability in various applications, including customer service, risk management, and research, RAG systems are prone to AI hallucinations.?

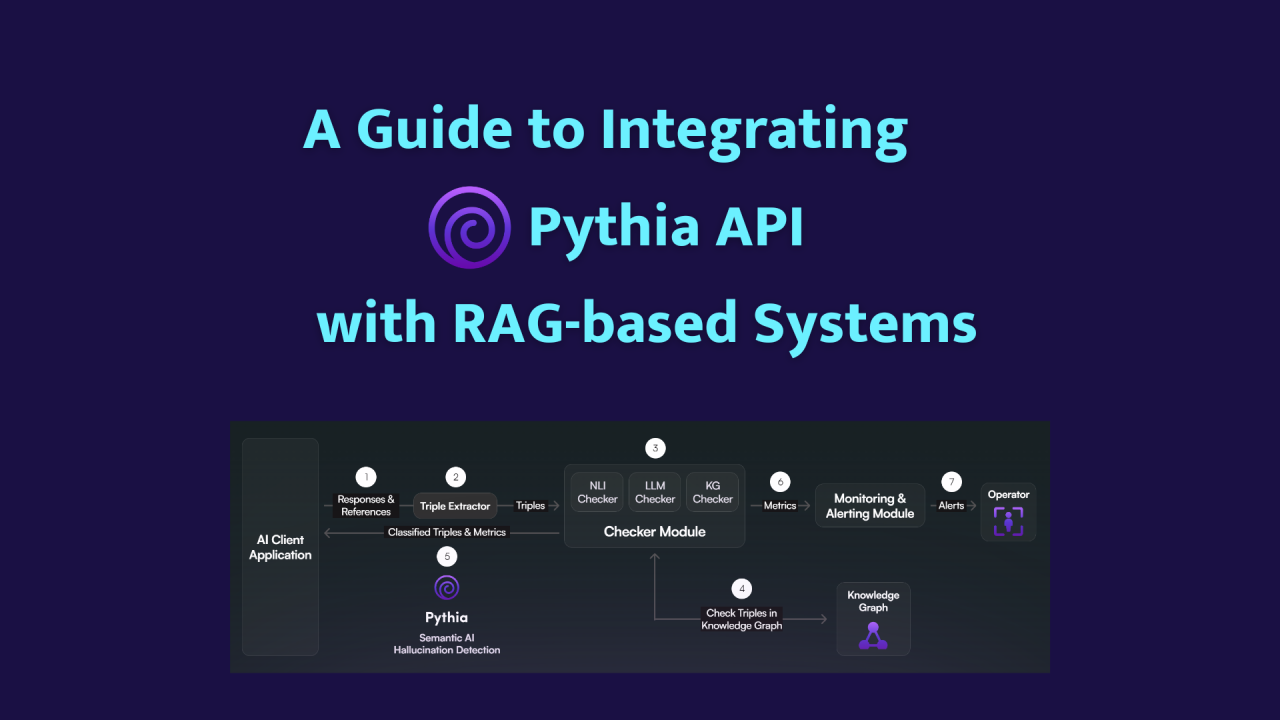

Wisecube's Pythia is a hallucination detection tool which detects hallucinations in real time and promises continuous improvement of RAG outputs, resulting in reliable outputs. Pythia easily integrates with RAG-based systems and generates hallucination reports for RAG outputs that guide developers in taking corrective measures on time.?

In this blog post, we’ll explore the step-by-step process of integrating Pythia in RAG systems. We’ll also have a look at the benefits of using Pythia for hallucination detection in RAG systems.

What is RAG?

RAG systems improve the accuracy of LLMs by referencing an external knowledge base outside of their training data. The external knowledge base makes RAG systems context-aware and provides a source of factual information. RAG systems usually use vector databases to store massive data and retrieve relevant information quickly.

Since RAG-based systems rely on external knowledge bases, the accuracy of knowledge base can significantly impact the quality of RAG outputs. Biased knowledge bases can lead to non-sensical outputs and perpetuate bias, which leads to unfair and misleading LLM responses.?

Let's have a look at the step-by-step process of integrating Pythia with RAG-based systems to detect hallucinations in RAG outputs.

Getting an API Key

You need a unique API key to authenticate Wisecube Pythia and integrate it into RAG systems. Fill out the API key request form to get your unique Wisecube API key.?

Installing Wisecube Python SDK

Next, you need to install Wisecube Python SDK in your machine or cloud-based Python IDE, depending on what you’re using. Copy the following command in your Python console and run the code to install Wisecube:

pip install wisecube

Install Relevant Libraries from LangChain

Developing an RAG system requires language processing libraries and a vector database from LangChain. Run the following code to install the necessary libraries in your Python console:

%pip install --upgrade --quiet? wisecube langchain langchain-community

langchainhub langchain-openai langchain-chroma bs4

Authenticate API Key

The API key needs to be authenticated before you begin using it. Since we’re using ChatGPT, we also need an OpenAI API key to implement an LLM in our RAG system. os and getpass Python modules help you save and authenticate the API keys securely:

import os

from getpass import getpass

API_KEY = getpass("Wisecube API Key:")

OPENAI_API_KEY = getpass("Open API Key:")

os.environ["OPENAI_API_KEY"] = OPENAI_API_KEY

Creating an OpenAI Instance

Next, we create a ChatOpenAI instance and specify the model. In the following code, we set the OpenAI instance to llm variable and specify the gpt-3.5-turbo-0125 model for our system. You can use any model from GPT-4 and GPT-4 Turbo, DALL-E, TTS, Whisper, Embeddings, Moderation, and deprecated models.

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model="gpt-3.5-turbo-0125")

Creating a RAG-based System in Python

Since this tutorial focuses on integrating Pythia with RAG systems, we’ll implement a simple RAG using Langchain. However, using the same approach, you can use Pythia for hallucination detection in complex RAG systems.

Below is the breakdown of the RAG system in the following code snippet:

# Load, chunk and index the contents of the blog.

loader =

WebBaseLoader("https://my.clevelandclinic.org/health/diseases/7104-diabetes")

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000,

chunk_overlap=200)

splits = text_splitter.split_documents(docs)

vectorstore = Chroma.from_documents(documents=splits,

embedding=OpenAIEmbeddings())

# Retrieve and generate using the relevant snippets of the blog.

retriever = vectorstore.as_retriever()

prompt = hub.pull("rlm/rag-prompt")

def format_docs(docs):? ?

return "\n\n".join(doc.page_content for doc in docs)

rag_chain = (? ?

{"context": retriever | format_docs, "question":

RunnablePassthrough()}? ?

| prompt? ?

| llm? ?

| StrOutputParser()

)

Using RAG to Generate Output

You can query your RAG system to generate relevant output now. The following code defines a variable question that stores user queries and extracts references and responses from the retriever and rag_chain function defined in the previous step:

question = "What is diabetes?"

reference = retriever.invoke(question)

response = rag_chain.invoke(question)

Using Pythia to Detect Hallucinations

Finally, you can use Pythia to detect hallucinations in your RAG-generated outputs. You just need to provide ask_pythia with a reference and response extracted in the previous step, along with the question. Pythia will detect and categorize hallucinations among entailment, contradiction, neutral, and missing facts:

qa_client = WisecubeClient(API_KEY).client

response_from_sdk = qa_client.ask_pythia(reference[0].page_content,

response, question)

Pythia’s response after hallucination detection in RAG output is in the screenshot below. It extracts claims as knowledge triplets and flags claims into relevant classes, including entailment, contradiction, neutral, and missing facts.?

Finally, it highlights the accuracy of the response and the percentage contribution of each class.

Benefits of Integrating Pythia with RAG-based Systems

Pythia’s ability to seamlessly integrate with RAG-based systems ensures real-time hallucination detection in RAG outputs, enhancing user trust and speeding up the research. Integration of Pythia with RAG-based systems offers the following benefits:

Advanced Hallucination Detection

Pythia divides user queries into knowledge triplets, making AI context-aware and accurate. Once Pythia detects hallucinations in RAG, it generates an audit report to guide developers towards its improvement.

Seamless Integration With Langchain

Pythia easily integrates with the Langchain ecosystem. This empowers developers to leverage Pythia's full potential with effortless interoperability.?

Customizable Detection

Pythia can be configured to suit specific use cases using the LangChain ecosystem, allowing improved flexibility and increased accuracy in tailored RAG systems.?

Real-time Analysis

Pythia detects and flags hallucinations in real-time. Real-time monitoring and analysis allow immediate corrective actions, ensuring the improvement of AI systems over time.?

Enhanced Trust in AI

Pythia reduces the risk of misinformation in AI responses, ensuring accurate outputs and strengthened user trust in AI.?

Advanced Privacy

Pythia protects user information so RAG developers can leverage its capabilities without worrying about their data security.

Request your API key today and uncover the true potential of your RAG-based systems with continuous hallucination monitoring and analysis.

* The article was originally published on Pythia's website.

** Recommended Reading:

If you’re interested in exploring how to use Pythia in other scenarios, check out our related articles:

Each of these articles provides unique insights and examples of integration to help you unlock the full potential of Pythia.