Global Stock Price Prediction using QDeep Learning

Aniruddha Kasar

Jr Data Scientist | Ex-SCS Technology | Applied Machine Learning |Data analytics |Business Analysis

Stock Price Prediction Using Q-Deep Learning: An In-Depth Analysis

Introduction

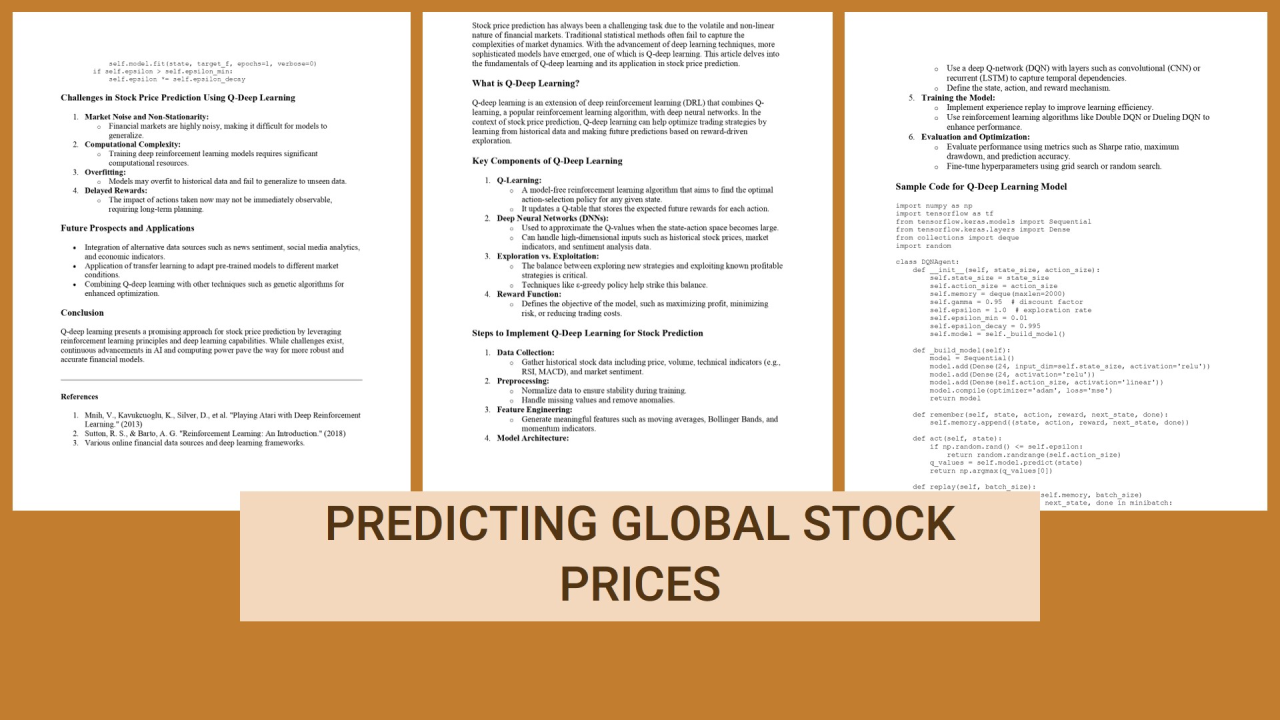

Stock price prediction has always been a challenging task due to the volatile and non-linear nature of financial markets. Traditional statistical methods often fail to capture the complexities of market dynamics. With the advancement of deep learning techniques, more sophisticated models have emerged, one of which is Q-deep learning. This article delves into the fundamentals of Q-deep learning and its application in stock price prediction.

What is Q-Deep Learning?

Q-deep learning is an extension of deep reinforcement learning (DRL) that combines Q-learning, a popular reinforcement learning algorithm, with deep neural networks. In the context of stock price prediction, Q-deep learning can help optimize trading strategies by learning from historical data and making future predictions based on reward-driven exploration.

Key Components of Q-Deep Learning

Steps to Implement Q-Deep Learning for Stock Prediction

Sample Code for Q-Deep Learning Model

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from collections import deque

import random

?

class DQNAgent:

??? def init(self, state_size, action_size):

??????? self.state_size = state_size

??????? self.action_size = action_size

??????? self.memory = deque(maxlen=2000)

??????? self.gamma = 0.95? # discount factor

??????? self.epsilon = 1.0? # exploration rate

??????? self.epsilon_min = 0.01

??????? self.epsilon_decay = 0.995

??????? self.model = self._build_model()

?

??? def buildmodel(self):

??????? model = Sequential()

??????? model.add(Dense(24, input_dim=self.state_size, activation='relu'))

??????? model.add(Dense(24, activation='relu'))

??????? model.add(Dense(self.action_size, activation='linear'))

领英推荐

??????? model.compile(optimizer='adam', loss='mse')

??????? return model

?

?? ?def remember(self, state, action, reward, next_state, done):

??????? self.memory.append((state, action, reward, next_state, done))

?

??? def act(self, state):

??????? if np.random.rand() <= self.epsilon:

??????????? return random.randrange(self.action_size)

??????? q_values = self.model.predict(state)

??????? return np.argmax(q_values[0])

?

??? def replay(self, batch_size):

??????? minibatch = random.sample(self.memory, batch_size)

??????? for state, action, reward, next_state, done in minibatch:

?????????? ?target = reward

??????????? if not done:

??????????????? target = (reward + self.gamma * np.amax(self.model.predict(next_state)[0]))

??????????? target_f = self.model.predict(state)

??????????? target_f[0][action] = target

??????????? self.model.fit(state, target_f, epochs=1, verbose=0)

??????? if self.epsilon > self.epsilon_min:

??????????? self.epsilon *= self.epsilon_decay

Challenges in Stock Price Prediction Using Q-Deep Learning

Future Prospects and Applications

Conclusion

Q-deep learning presents a promising approach for stock price prediction by leveraging reinforcement learning principles and deep learning capabilities. While challenges exist, continuous advancements in AI and computing power pave the way for more robust and accurate financial models.

References

?