Fast Full-Text Search: VectorDB + Apache Iceberg

Product launch with interactive, hands-on demos. Register to this free virtual event, here.

Overview

Today, organizations estimate that more than 90% of their enterprise data is “frozen†in data lakes (like Iceberg tables) and is unusable for powering fast analytics or intelligent applications. This leads to frustrated users dealing with sluggish analytics and application performance when dealing with data from Iceberg.

So, how do you unfreeze the data locked in lakehouses?

Join us on June 26, where we will be unveiling new product features and capabilities in SingleStore — including a novel solution to ingest and process data directly from Iceberg tables — powering your fast, intelligent applications.

Say goodbye to complex ETL, data thawing or replication. Users can now drive subsecond analytics and power low-latency applications all while ensuring security, reliability and data governance.

We will feature interactive, hands-on demos on how to:

- Unfreeze your lakehouse to power low-latency applications with native integration for Apache Iceberg

- Perform faster vector search and improved full-text search

- Scale your apps and decrease complexity with Autoscaling

- Deploy SingleStore in your own virtual private cloud (VPC)

And much more. Mark your calendars for this virtual event — and get ahead in building fast, intelligent applications.

Axiom Mathematics - Research Scientist - Creator of The Aeon Ship

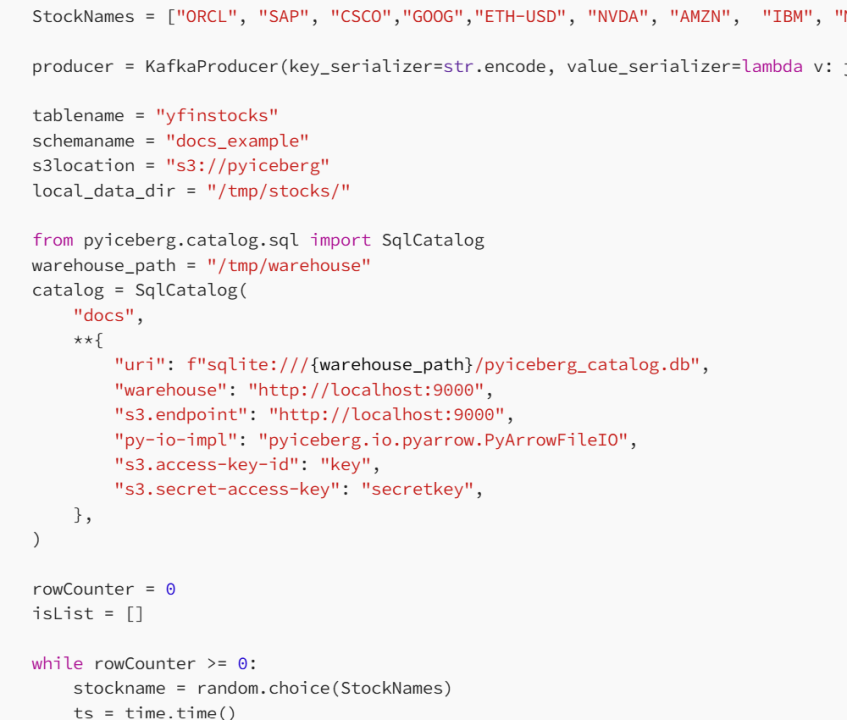

10 个月Customizing the Script Table and Output Paths: Adjust the table_path and output_path variables to point to your actual data lake paths. Transformations: Customize the transform_data() function to apply specific transformations relevant to your dataset. Data Format: Change the data format in load_data() and save_data() functions if you are working with different formats. This modular approach allows you to extend and adapt the script for more complex data transformations and processing tasks, making it a powerful tool for unfreezing and analyzing enterprise data locked in lakehouses. Would you also like the code in Mathematica?

Axiom Mathematics - Research Scientist - Creator of The Aeon Ship

10 个月Explanation Initialize Spark Session: initialize_spark(): Initializes a Spark session with a specified application name. Load Data from Iceberg Tables: load_data(): Loads data from the specified Iceberg table path using PySpark. Transform Data: transform_data(): Applies data transformations, such as filling null values, adding new columns, and computing new values based on existing columns. Save Transformed Data: save_data(): Saves the transformed data to the specified output path in Parquet format. Main Function: main(): Orchestrates the process by calling the initialization, data loading, transformation, and saving functions sequentially.

Axiom Mathematics - Research Scientist - Creator of The Aeon Ship

10 个月Part 2 # Save Transformed Data def save_data(df, output_path): ??df.write.format("parquet").mode("overwrite").save(output_path) # Main Function def main(table_path, output_path): ??# Initialize Spark ??spark = initialize_spark() ??? ??# Load Data ??df = load_data(spark, table_path) ??? ??# Transform Data ??df_transformed = transform_data(df) ??? ??# Save Transformed Data ??save_data(df_transformed, output_path) ??? ??# Stop Spark Session ??spark.stop() # Define Paths table_path = "s3://your-datalake-path/iceberg-table" output_path = "s3://your-datalake-path/transformed-data" # Execute Main Function if __name__ == "__main__": ??main(table_path, output_path)

Axiom Mathematics - Research Scientist - Creator of The Aeon Ship

10 个月Part 1 from pyspark.sql import SparkSession from pyspark.sql.functions import col, when, lit from pyspark.sql.types import * # Initialize Spark Session def initialize_spark(app_name="UnfreezeDataLake"): ??spark = SparkSession.builder \ ????.appName(app_name) \ ????.getOrCreate() ??return spark # Load Data from Iceberg Tables def load_data(spark, table_path): ??df = spark.read.format("iceberg").load(table_path) ??return df # Transform Data def transform_data(df): ??# Example transformation: Fill null values, add computed columns, etc. ??df_transformed = df.fillna({'column1': 0, 'column2': 'unknown'}) \ ????.withColumn('new_column', col('column1') * 2) \ ????.withColumn('status', when(col('column1') > 0, lit('active')).otherwise(lit('inactive'))) ??return df_transformed

Axiom Mathematics - Research Scientist - Creator of The Aeon Ship

10 个月To address the problem of frozen data in data lakes and iceberg tables, we can develop a Python script using modular formulas and efficient data processing techniques. Here’s a Python code example using the PySpark library, which is commonly used for processing large datasets in data lakes. We will employ modular functions to structure the code for readability and maintainability.