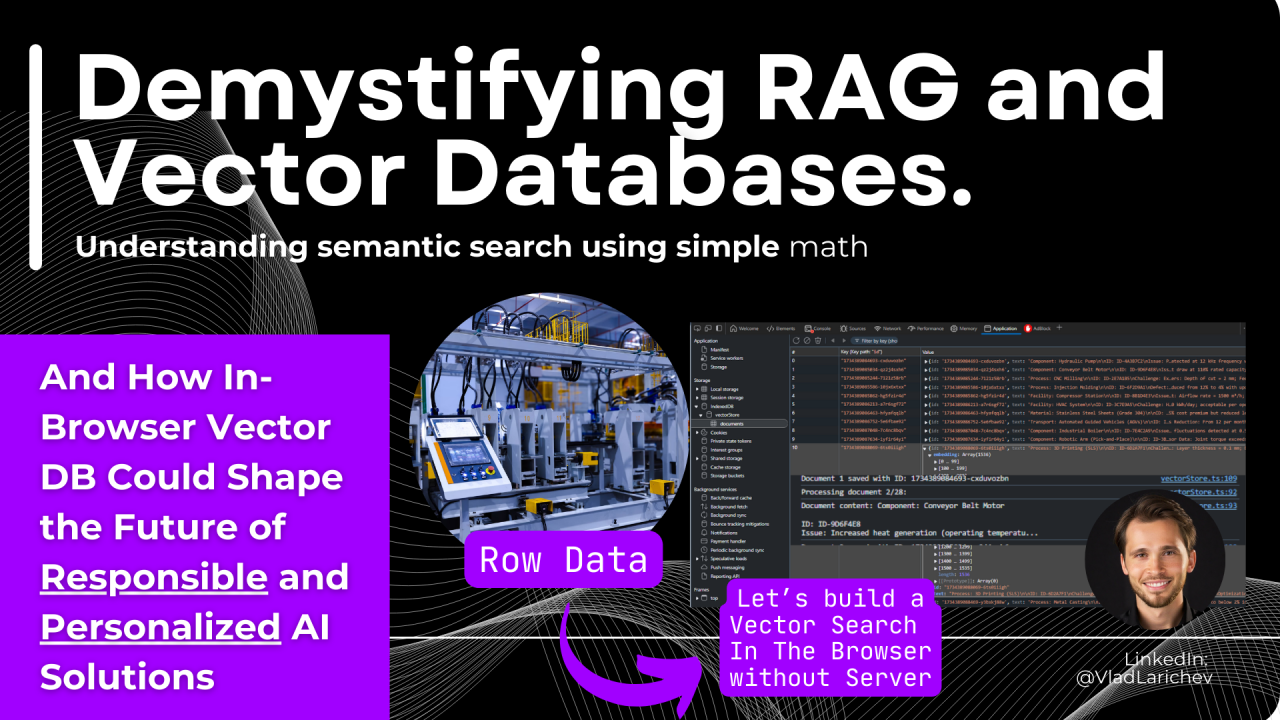

Demystifying RAG and Vector Databases: How Browser-Based Vector Search Can Shape the Future of Personalized and Responsible AI-Powered Applications

Vlad Larichev

Industrial AI Lead @Accenture IX | Software Engineer | Keynote Speaker | Research Enthusiast ??| Building with LLMs since 2020 | Helping Industries Scale AI with Impact - Beyond the Hype and Marketing Fluff.

2024 was the year of Retrieval Augmented Generation (RAG) dominated most expert discussions, changing the way how we interact with AI by combining large language models (LLMs) with external knowledge sources.

Many people still view RAG and vector search as highly technical and complex topic. In reality, vector search is a simple yet elegant solution and understanding its core principles reveals both the strengths and weaknesses of current RAG-based applications.

This article is a journey, where we will break down the key concepts behind RAG and vector databases and implement a complete vector search from scratch directly in YOUR browser using a new browser API IndexedDB, to better understand how it works.

Finally, I’d like to explore together a future where vector database becomes a standard browser feature. It could offer an alternative to the "OpenAI's Memory" concept, securely managing your personal memory fingerprint and connecting AI-powered applications from different vendors, while prioritizing privacy and security.

Ready? ??

Understanding RAG and Vector Databases: How Retrieval Augmented Generation Finds the Data

RAG pairs a language model with an external repository of information. Instead of the model relying solely on what it has “memorized” during training, RAG dynamically fetches the most relevant documents or data points from a stored knowledge base.

To efficiently handle this, we need a system that translates words into numbers, allowing machines to work with them effectively. This translation involves creating embeddings—mathematical representations of text as a vector in a multi-dimensional space, stored in the vector databases:

Running a query, the vector database compares the query’s embedding to the embeddings of your documents. The result is a “semantic search” that finds contextually similar content, even if the exact keywords don’t match.

To simplify, imagine creating vector representations for the words "fire" and "truck." When adding these two vectors mathematically, the resulting vector might approximate the vector representation of a "firetruck." (amazing, right?)

However, it could also represent a "truck on fire", showing that how words are translated into numbers (or embeddings) plays a crucial role in determining meaning. Other techniques can use these tokens to understand the context of the sentences, but for now, let's just focus on the meaning of the individual words.

The beauty of vector databases is their ability to group data by the “meaning” behind words. For instance, if you search “guidelines for financial compliance,” a vector database can find documents discussing “banking regulations” and “financial oversight” even if those exact words aren’t used.

This semantic power lies at the heart of modern RAG systems. ??

Pretty similar process happening from the other side, when you are asking something - embedding model will be used, to translate your query to a vector representation, and find for matching vectors in the database and feed it to the LLM as context:

?? Let's build our own vector search solution - directly in the browser in 5 steps.

Enough theory - let’s have implement it in the browser in a speedrun.

In the second article, we will implement it step by step together, but for now, let’s cover the main components.

To realize this process, we will need two pipelines:

For the browser implementation, we can easily build both pipelines in just a few lines of code using { pipeline } from the Hugging Face Transformers.js library. Pipelines make it straightforward to handle complex AI tasks directly in the browser: Pipelines

1) Selecting Models and creating the Pipelines

We will use two models: one for feature extraction and another for embedding generation. These lightweight models ensure efficient operation in a browser environment without relying on server-side infrastructure:

2) Creating a Vector Database in the Browser

Now we need a database.

Instead of a traditional server-based vector database, we’ll use the browser’s local storage with the IndexedDM. IndexedDB is a low-level API for client-side storage of significant amounts of structured data, including files/blobs.

By leveraging the extractor pipeline, we create embeddings and store chunks of text along with their vector representations in our DB as a simple table format with two columns: the text and its vector representation - Yes, that's all we'll need!

Let’s call this our vectorStore:

3) Implementing Similarity Search ??

Now comes the fun part: implementing similarity search using simple school math!

Let’s define a cosineSimilarity function to measure how similar two vectors are by calculating the cosine of the angle between them:

Here’s how cosine similarity works step by step:

What does this similarity mean?

1 ?? Our embeddings are identical (point in the same direction),

0 ?? the vectors are completely unrelated (90° apart),

-1 ?? The vectors are opposites (point in opposite directions).

In a vector database, cosine similarity is used to compare a query vector to all other vectors in the database or find vectors (items) that are most similar to the query based on the cosine similarity score.

?? Isn't it amazing that our AI agents use simple school math to understand our language?

4) Uploading and Testing with Synthetic Data ??

To test it, I’ve built a simple web project VectorVault - where you can upload synthetic data like machine reports or incident logs. (more about it in a following article)

The documents will be processed and stored in the local vectorStore database we just created.

When adding new data, it is split, and we can see in the browser console how all items in the local database are stored in the browser, one by one, with appropriate embeddings, in my case in a 1536-dimensional array as a vector space:

5) Testing!

Now we can test, if searching for some specific data will result in similar files, which later could be consumed by the LLM.

Let’s search for something with “heat transfer efficiency” and the most similar documents should appear in the results...

It works!

This simple and efficient solution runs entirely in the browser, requiring just few lines of code for the main algorithm—all based on straightforward school math!??

A look into the future: The future of responsible personalized AI and the role of vector databases in the browser

Imagine a future where your browser is also your personal and secure AI hub, with function, similar to password manager.

An optimized version of a vector store could become a standard browser feature—always running locally, indexing on your demand what you need, and serving as a “personal memory” for all your AI-powered agents, giving YOU the control what the AI-Copilots knows about you.

Different applications and websites could tap into this shared vector database to provide deeply personalized experiences, understanding your interests, goals, and needs at a semantic level you allows.

Like a password manager, but for your personal digital fingerprint, with you owning your data and deciding who can access it.

Of course, this raises important questions about responsible AI practices: How do we ensure privacy and security when sharing a common vector database across applications? How can we guarantee fairness and prevent biases from creeping into this shared memory?

While this idea might sound unusual at first, it’s arguably less strange than many things we already do today, like handing all your data to OpenAI or relying on password managers to store sensitive details.

What do you think about the future of personalized AI and where would you store this data? Would you try such a personalized memory fingerprint?

In the next article, we will go through all elements of building VectorVault, a client-side vector search application that demonstrates how RAG works implementing it directly in your browser. By exploring this project together, we will learn how to optimize RAG results you’ll, strategies of splitting documents into chunks and creating embeddings, storing and retrieving embeddings and using them to power AI-driven applications with privacy in mind.

Stay tuned as we dive deeper into the technical implementation and looking forward to your feedback!

A second strain in this article is agent memory. I believe this is a big open question. On important aspect is probably captured by MemGPT and Letta: https://github.com/letta-ai/letta A second aspect that I reason about is how agents are allowed to use information across application boundaries, potentially scrutenizing information security or privacy. A third aspect is how the state of the agent is synchronied with the cloud and a local system in real time. Any ideas on these issues?

One strain in this article is to run Vector Search in the browser. I find this interesting by itself and I intend to that myself. This would make it easy to run search on any site locally, without requiring a backend service. In many cases I believe that semantic search is enough. A site could deliver the index data and allow search to be excecuted in the browser. Great!! Any idea to go further on this?

Tech Innovation Manager at Accenture

3 个月Great article - thanks! I would add that the idea of personal browser search and persons search AI Agent is similar to Digital Wallet concept at Web3 where personal data and shared data is solved through distribution, public - private keys combination and consensus. I think to enable it distribution data and digital wallet / AI personal wallet concepts might help

AI/ML @ Accenture | Educator

3 个月Excellent share here Vlad, this is what the community needs heading into the new year!

Automation in Manufacturing | Robotics & Industrial Metaverse

3 个月Really cool! Next step is to combine this with a small model running also on the browser and avoid open-ai api dependence