Decoding Blue/Green Deployment: A Comprehensive ReactJS + NodeJS + AWS Approach

Background

For startups navigating the tech terrain, managing downtime during the rollout of a new version of a full-stack web application can pose a formidable challenge.

Consider a scenario where ReactJS serves as the client and NodeJS as the server, orchestrated by AWS ECS through task definitions. With each deployment, the current services running ReactJS and NodeJS are brought down on ECS to be replaced by their updated counterparts, leveraging new task definitions and ECS images. This process demands a substantial time investment for thorough end-to-end testing on the new version. The resulting downtime not only affects end-users but also presents potential setbacks to the overall business.

Addressing this predicament is pivotal, and herein lies the significance of exploring the blue/green deployment strategy. I want to share my experience on how to handle this issue.

However, I won't delve into the conceptual details of Blue and Green Deployment in this essay; if you're interested, you can explore that aspect through this informative article: https://candost.blog/the-blue-green-deployment-strategy/. Instead, my focus is dedicated to elucidating the practical implementation of the Blue and Green strategy for ReactJS and NodeJS, intricately woven into the fabric of AWS services.

Steps to implement

Let's start with a common ReactJS and NodeJS web app. Both client and server are hosted by ECS as Docker containers.

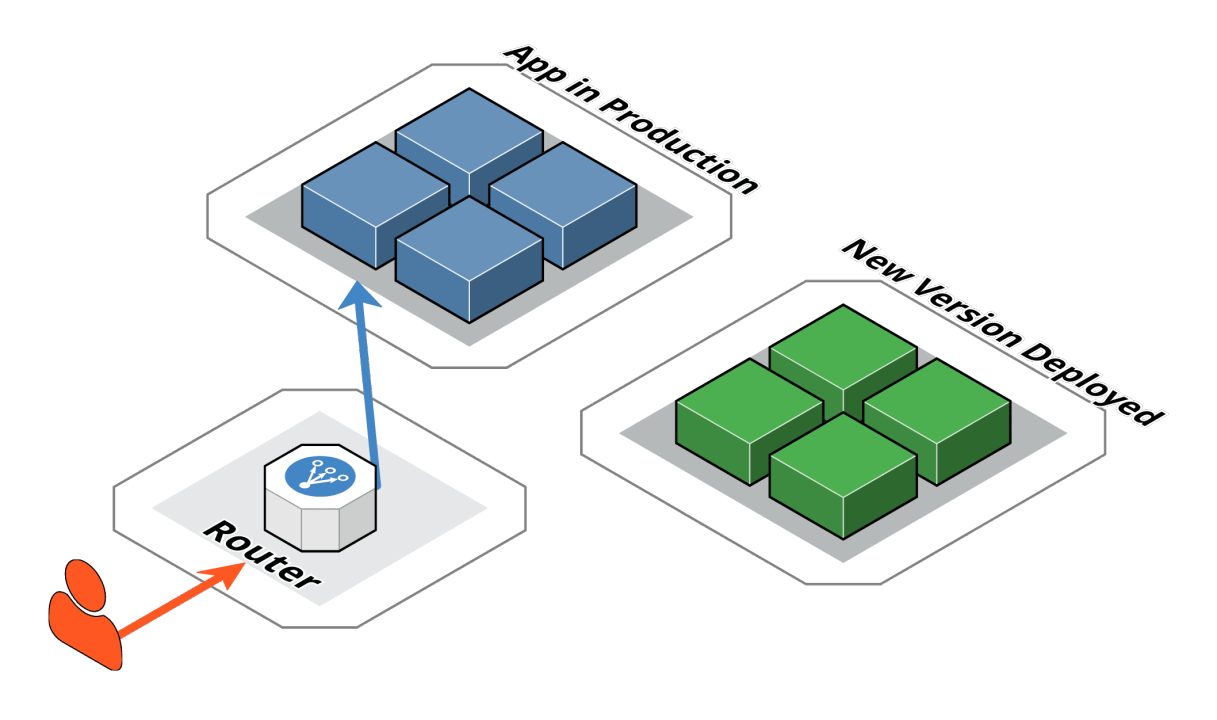

If users access www.example.com, they will encounter the live web application. However, when the need arises to deploy a new version, the challenge emerges in seamlessly transitioning users to the updated version without causing disruption or downtime. This is where the Blue/Green Deployment strategy becomes instrumental, allowing for a smooth migration from the current running version (Blue) to the newly deployed version (Green) while ensuring uninterrupted access for users. The strategy involves directing traffic to the Green environment only after thorough testing, thereby minimizing any potential adverse impact on the user experience.

The best approach I figured out is deploying a second ECS with the new version of both the client and server and implementing listener rules to forward the tester's IP to the new version (Green) while keeping public users on the old version (Blue). This method not only ensures a smooth transition during deployment but also allows for comprehensive testing without impacting the entire user base.

By segregating the testing traffic to the Green environment, you provide a controlled environment for thorough validation of the new version. QA team can start conducting end-to-end testing on the new version. Meanwhile, the Blue environment continues to serve the existing users, maintaining business continuity and minimizing potential disruptions.

The final step of switching the listener rule to forward all traffic to the new version once testing is completed is a crucial element of the Blue/Green Deployment strategy. This seamless transition ensures that the updated version (Green) becomes the primary environment for all users, effectively replacing the older version (Blue). You can even remove the old version entirely afterward to save cost or keep it as a backup. The choice is up to you.

By flipping the listener rule, you orchestrate a smooth switch from the controlled testing phase to the full deployment of the new version. This approach minimizes downtime and user impact, providing a robust mechanism for updating and evolving your web application. The meticulous planning and execution of this transition exemplify an efficient utilization of the Blue/Green Deployment strategy, balancing the need for thorough testing with the imperative of maintaining a seamless user experience.

领英推荐

Integrating SQS and Lambda functions

Integrating SQS (Simple Queue Service) and Lambda functions into the Blue/Green deployment architecture indeed adds another layer of complexity but also enhances the overall capabilities of your deployment strategy. Your consideration of two separate SQS queues and Lambda functions or the use of aliased Lambda functions to handle requests from different environments showcases a thoughtful approach.

Having separate SQS queues and corresponding Lambda functions for each environment allows for clear and independent processing of messages specific to the Blue and Green environments. This ensures that the deployment changes not only at the application level but also seamlessly propagate through the underlying event-driven architecture.

On the other hand, using aliased Lambda functions provides a more streamlined and consolidated solution, allowing a single function to handle requests from both environments based on aliases that can be updated during the deployment process. This approach simplifies management and reduces the need for duplication while effectively maintaining the integrity of your Blue/Green strategy.

The unexpected challenges

Using the same domain and forward traffic based on listener rules rather than opting for different domains or ports to avoid CORS errors is a thoughtful approach. CORS (Cross-Origin Resource Sharing) restrictions can indeed pose challenges when working with multiple domains or ports.

Another challenge was to use CloudFront CDN, while also accurately forwarding traffic based on source IP. My solution is to leverage the `X-Forwarded-For` header in AWS is a well-considered approach.

The X-Forwarded-For request header is automatically added and helps you identify the IP address of a client when you use an HTTP or HTTPS load balancer. Because load balancers intercept traffic between clients and servers, your server access logs contain only the IP address of the load balancer. To see the IP address of the client, use the X-Forwarded-For request header. Elastic Load Balancing stores the IP address of the client in the X-Forwarded-For request header and passes the header to your server. If the X-Forwarded-For request header is not included in the request, the load balancer creates one with the client IP address as the request value. Otherwise, the load balancer appends the client IP address to the existing header and passes the header to your server. The X-Forwarded-For request header may contain multiple IP addresses that are comma separated. The left-most address is the client IP where the request was first made. This is followed by any subsequent proxy identifiers, in a chain.

By using the X-Forwarded-For header, we can ensure that the source IP information is correctly passed through CloudFront, allowing my listener rules to make informed decisions based on the actual client IP addresses. This not only enables the effective use of CloudFront for caching and fast content delivery but also ensures the accurate identification of users during the Blue/Green Deployment process.

Conclusion

In conclusion, addressing these technical challenges through in-depth research and the implementation of practical solutions requires expertise in optimizing the deployment infrastructure for enhanced performance and functionality. This strategic approach introduces an additional layer of sophistication to my deployment strategy, demonstrating a meticulous implementation that effectively balances the benefits of utilizing a CDN with the imperative of precise traffic forwarding. The successful resolution of these challenges not only ensures a seamless transition during the Blue/Green Deployment but also underscores the importance of a well-considered and adaptable approach in the ever-evolving landscape of web application development.

I appreciate your engagement with the content. If you have any questions, need further clarification, or would like additional insights, feel free to share your thoughts below. I'm here to help!

Software Engineer

10 个月Great job and write up!