Databricks Logging and Debugging

Let’s talk about logging on Databricks, specifically in Notebooks, Spark, and Ray. Effective logging is critical for debugging, monitoring, and optimizing data engineering and machine learning workflows. I wanted to put forward some ideas that I think are worth considering.?

Notebooks and Jobs

Failures happen, and sometimes we need to diagnose them in more detail than our favorite AI Assistant can provide. This is where logging becomes essential. There are two common logs in most programs - stdout and stderr. They both originate in the C standard library and are on most operating systems. We use stdout for regular program output and stderr for errors, warnings, and diagnostics.

We use the Python ‘logging’ module as the cornerstone for application-level logging in Databricks environments. Its FileHandler class enables direct log persistence to Databricks File System (DBFS) or Unity Catalog Volumes, crucial for maintaining audit trails across ephemeral clusters. Here is a crash course in logging if you haven’t used it. Levels are important for differentiating logs - they can be numeric but usually people use DEBUG, INFO, WARNING, and ERROR in order of increasing importance. Formatting logs helps a lot as well - the name magic command gives you the module name which can be a life saver for nasty debugging. Finally, handlers are essential for controlling the flow of logs - you can handle a stream (like stdout) or a file as shown below.?

import logging

logger = logging.getLogger('notebook')

logger.setLevel(logging.INFO)

file_handler = logging.FileHandler('/Volumes/Catalog/Schema/Volume/')

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

file_handler.setFormatter(formatter)

logger.addHandler(file_handler)

One other tip - make sure you don’t keep adding loggers when running interactive notebooks by using the hasHandlers method. I always recommend saving your logs to volumes - clusters are ephemeral and should be treated as such. I also would recommend having consistent naming (%name), in my Python modules I usually use logger.get_logger(__file__) with good pathing.

if not logger.hasHandlers():

logger.addHandler(stream_handler)

logger.addHandler(file_handler)

Databricks collects stdout and stderr logs by default, but it is worth it to dump your logs to a volume, especially if you are using Serverless compute or want to bring together common jobs. It’s also worth noting that append writes to a file are limited in Volumes. Volumes uses Linux FUSE, which doesn’t support direct appends or random writes by design. So to properly write out notebook logs, you should set up a unique filename. Here’s how setup? ISO-8601 chronologically sorted logs:

import os

import datetime

notebook_path = (

dbutils.notebook.entry_point

.getDbutils().notebook().getContext()

.notebookPath().get()

)

#ISO-8601 Format

timestamp = datetime.datetime.now().strftime("%Y-%m-%dT%H:%M:%S")

log_file_path = f"{timestamp}-{notebook_path}.log"

# ... continue to adding file handler ... #

When it comes to jobs - Run IDs are essential for retrieving logs. Assuming you’ve done something similar to above and have logs going to stdout and stderr, then you can retrieve those logs using the Jobs API / SDK. If you haven’t used the WorkspaceClient before, I would highly recommend using it as a first class citizen in future workflows.

from databricks.sdk import WorkspaceClient

w = WorkspaceClient()

run_output = w.jobs.get_run_output(run_id=123456)

print(run_output.logs)

Spark

The Spark driver manages job orchestration and maintains the SparkContext. Driver logs capture exceptions, print statements, and lifecycle events. Spark uses Log4j as its core logging framework, which can be a bit nasty to debug. PySpark bridges Python’s native logging module with JVM-based Log4j through the pyspark.logger.PySparkLogger class. I also find them a little annoying and use this snippet to quiet them down a bit.

import logging

logging.getLogger("py4j.clientserver").setLevel(logging.ERROR)

You can specify a location to deliver the logs for the Spark driver node, worker nodes, and Events. When a cluster is terminated, Databricks guarantees to deliver all logs generated up until the cluster is terminated.?

To configure the log delivery location: 1. On the cluster configuration page, click the Advanced Options toggle. 2. Click the Logging tab. 3. Set the delivery location (e.g. dbfs:/cluster-custom-logs)

We can browse DBFS via the catalog tab → Browse DBFS. You can also use notebooks via dbutils or the magic %fs command

dbutils.fs.ls("/")

%fs ls /

os.listdir("/dbfs/")

# Prefix the path with /dbfs/ in python

You can get logs from failed executors using the user interface. This can be crucial when something goes awry with stages and tasks within your spark pipeline. We can also use the Spark Ganglia UI to see active worker tasks, as well as heap dumps etc. The thread dumps can be useful for diagnosing why a thread stalled or failed. As a data scientist, this is about as deep as I like to dive into Spark debugging, but if you are doing hardcore spark data engineering, it goes much deeper.

Ray

I’m really starting to love Ray and having the option of both Ray and Spark in Databricks. Ray excels at task-parallel execution whereas Spark excels in data-parallel scenarios. When running Ray on Spark, there are some things you should consider.?

First, the Ray logs are captured at /tmp/ray/session_*/logs by default, but we can configure where they go when setting up the Ray cluster. Worker nodes are stored similarly to spark per worker, so centralizing logs is a wise decision.

from ray.util.spark import setup_ray_cluster

setup_ray_cluster(

num_worker_nodes=4,

collect_log_to_path="/Volumes/prod/logs/ray_collected",

num_cpus_worker_node=8

)

You can also setup structured logging in JSON using LoggingConfig, which provides much better integration with Unity Catalog and Azure Log Analytics.

from ray import LoggingConfig

ray.init(

logging_config=LoggingConfig(

encoding="JSON",

log_level="INFO",

)

)

Once you have logs going to the right place, you can use the workspace client to manage them and enforce retention policies via a simple job.

from databricks.sdk import WorkspaceClient

from datetime import datetime, timedelta

w = WorkspaceClient()

cutoff = datetime.now() - timedelta(days=30)

for log_dir in w.dbfs.list("/logs/ray_collected"):

# Alternatively use Volumes, but same pattern.

if log_dir.modification_time < cutoff:

w.dbfs.delete(log_dir.path, recursive=True)

In Closing

Logging isn’t the most scintillating topic, but it makes a world of difference when moving applications to prod. And it doesn’t have to be hard. Here is a quick recap of some of the key concepts I presented.

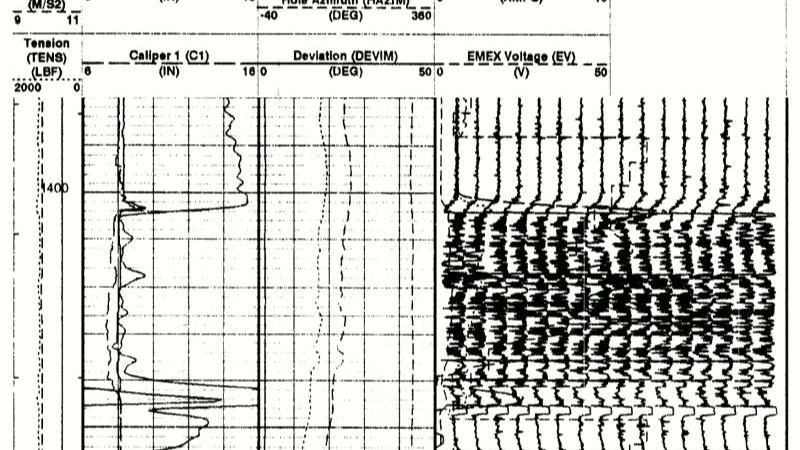

Scott McKean OK ... (also) being an ex G&G person -> you got me with the visual. I'd be keen on - in the abstract - corollaries you think one can draw between well logging and application logging ... and commensurate visualizations that would help one interpret such :-)

Solutions Architect @ Databricks | DataOps = DevOps for Data

3 周Does this include logging for serverless as well? I noticed the switch to Spark Connect changed the way logging is done.

Reservoir mapping w/ Danomics: Better, faster, stronger.

3 周I am so confused. Why are you talking about event logging and using and image of a raster log?

Azure Data and AI Architect| Principal Data Engineer| Lakehouse| Databricks| Fabric| MLOPs| DataOPs| Career Coach| Mentor

3 周Shankha Majumdar