Cutting Edge Tricks of Applying Large Language Models

Large language models (LLMs) are prominent innovation pillars in the ever-evolving landscape of artificial intelligence. These models, like?GPT-3, have showcased impressive?natural language processing?and content generation capabilities. Yet, harnessing their full potential requires understanding their intricate workings and employing effective techniques, like fine-tuning, for optimizing their performance.

As a?data scientist?with a penchant for digging into the depths of LLM research, I’ve embarked on a journey to unravel the tricks and strategies that make these models shine. In this article, I’ll walk you through some key aspects of creating high-quality data for LLMs, building effective models, and maximising their utility in real-world applications.

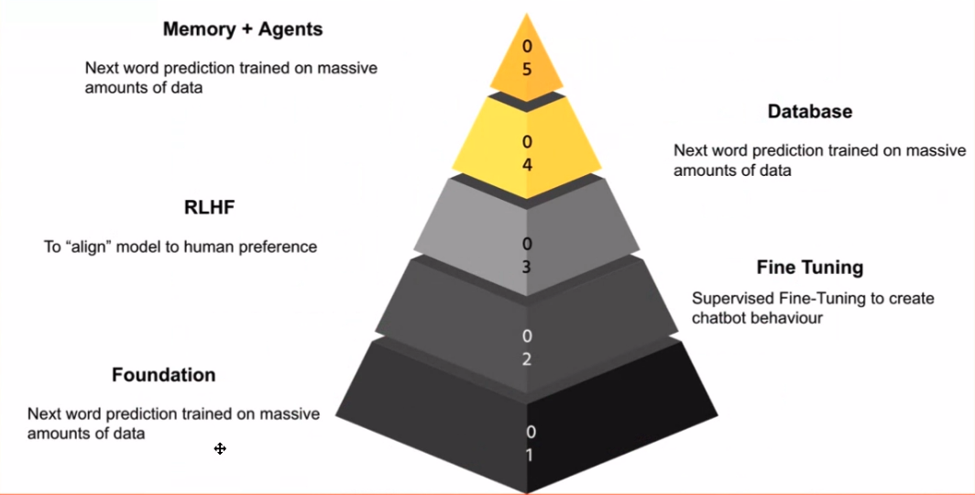

When delving into the realm of #LLMs, it’s important to recognise the stages of their #application. To me, these stages form a knowledge pyramid, each layer building on the one before. The foundational #model is the bedrock – it’s the #model that excels at predicting the next word, akin to your smartphone’s predictive keyboard.The magic happens when you take that foundational model and fine-tune it using data pertinent to your task. This is where chat #models come into play. By training the model on chat conversations or instructive examples, you can coax it to exhibit chatbot-like behaviour, which is a powerful tool for various applications.Safety is paramount, especially since the internet can be a rather uncouth place. The next step involves Reinforcement Learning from Human Feedback (RLHF). This stage aligns the model’s behaviour with human values and safeguards it from delivering inappropriate or inaccurate responses. Read more -> https://forum.devcircleafrica.com/forum/artificial-intelligence/cutting-edge-tricks-of-applying-large-language-models/