Creating a Convolutional Neural Network from Scratch

Introduction

Convolution is a primitive in Computer Vision. CNN a.k.a Convolutional Neural Network is a type of Deep Learning Architecture designed to analyze and interpret image data. CNNs learn in the same way as us Humans.. We were born without knowing what a cat or a bird looks like. As we mature, we learn that certain shapes and colors correspond to certain elements that collectively correspond to an another element. Once we learn what paws and feathers look like, we’re better able to differentiate between a cat and a bird. This is how CNN learns patterns from images and then applies that knowledge for Image Detection and Classification

CNN extract features from images using a process called Convolution. It is particularly useful for tasks like image classification and object detection. CNN learns patterns within an image by sliding a filter across the pixel data to identify key features like edges, shapes, and textures.

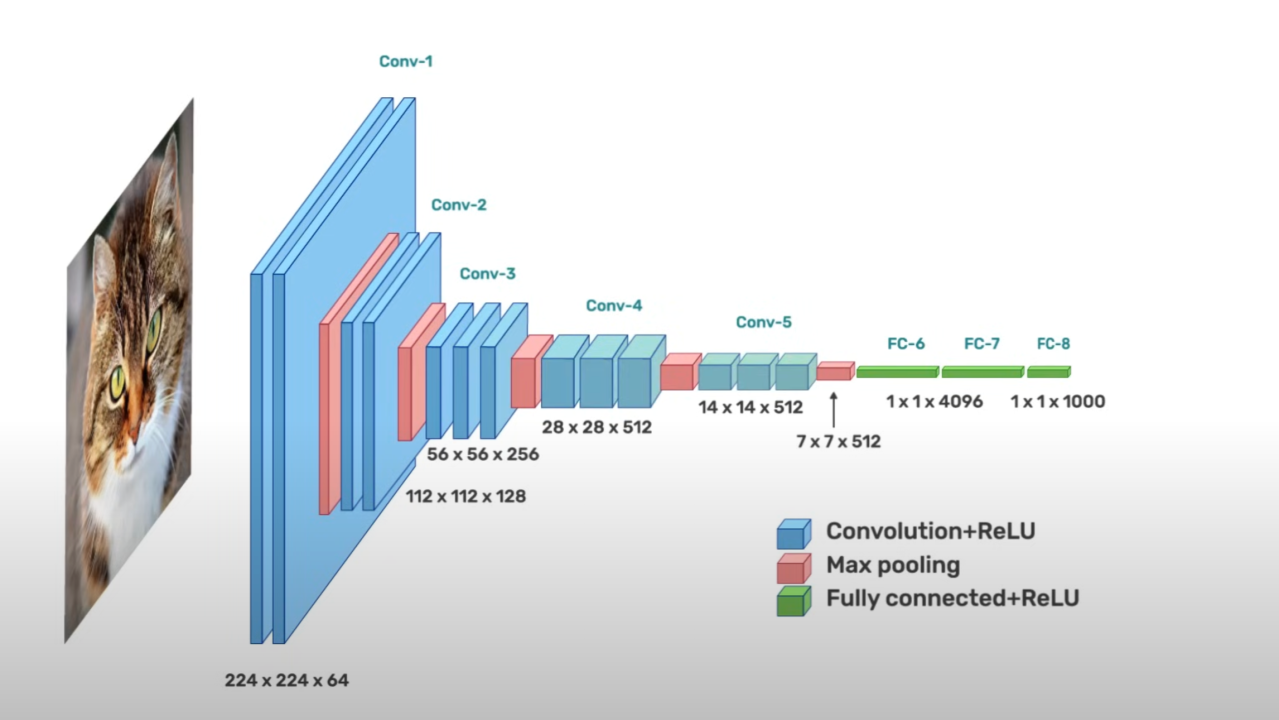

A CNN consists of three layers:

a) an input layer

b) an output layer

c) a hidden layer that includes multiple convolutional layers.

Within the hidden layers are pooling layers, fully connected layers, and normalization layers.

The Input layer captures basic features such as edges, color, gradient orientation, and geometric shapes.

The pooling layer progressively reduces the spatial size of the representation for more efficient computation. It operates on each feature map independently.

A common approach used in pooling is max pooling, in which the maximum value of an array is captured, reducing the number of values needed for calculation. Stacking convolutional layers allows the input to be decomposed into its fundamental elements.

Normalization layers regularize the data to improve the performance and stability of neural networks. It makes the inputs of each layer more manageable by converting all inputs to a mean of zero and a variance of one.

Fully connected layers connects every neuron in one layer to all the neurons in another layer.

Real World Example

Now that we have a basic understanding of a Convolutional Neural Network, lets dive into a real world use case and understand its applications. In this example, I will use publicly available HAM1000 Dataset and create a CNN from scratch using Keras and Tensorflow in the backend. This CNN will help us classify images of Skin Cancer Cells in Humans. . You can find the code for this example in my Github Repo Here.

Step 1. Importing Essential Library

Step 2. Dataset Preprocessing and Cleansing - Here I will profile the HAM1000 Dataset and perform data quality checks on nulls and missing values. This is a necessary step.

Step 3. Exploratory Data Analysis

Looking at the results, The cell type Melanecytic nevi is predominant in this dataset compared to other cell types

It seems that there are larger group of patients with age groups between 30 to 60

It appears Male Gender has a higher distribution.

Step 4. Split Data for Training and Testing - Data Split in 80-20 ratio.

Step 5. Apply Normalization - This is to ensure consistent model performance and prevent overfitting. Here we will introduce Bias. It also prevents data leakage as bias is introduced.

领英推荐

Step 6. One Hot Encoding - We have 7 different classes of Skin Cancer. This step performs one hot encoding on the labels.

Step 7. Splitting training and validation set - Now I chose 90% of this data to be used for Training our CNN and remaining 10% for Test and Validation

Step 8. Build our Model - This is where I define a CNN using Keras

Input Shape - This CNN expects each image to be 75 pixels High and 100 pixels wide and 3 represents channels RGB (Red , Blue, Green).

NumClasses represents the types of Cancer cells

The first layer in our CNN is the convolutional (Conv2D) layer. I chose 32 filters for the two conv2D layers and 64 filters for the two last ones. Each filter transforms a part of the image.CNN can isolate features that are useful everywhere from these transformed images.

The next important Layer is the pooling (MaxPool2D) layer. Combining these 2 layers, CNN is able to combine local features and learn more global features of the image.

Notice the Dropout. Dropout is a regularization technique , where a proportion of nodes in the layer are randomly ignored. This also prevents Overfitting and helps generalize.

'relu' is the rectifier (activation function max(0,x). The rectifier activation function is used to add non linearity to the network.

The Flatten layer is use to convert the final feature maps into a one single 1D vector. This flattening step is needed so that we can make use of fully connected layers after some convolutional/maxpool layers. It combines all the found local features of the previous convolutional layers.

Step 9. Define an Optimizer

Now the CNN layers are added to the model, here I will setup an optimizer function. This function will iteratively improve parameters (filters kernel values, weights and bias of neurons) in order to minimize the loss.

Step 10. Data Augmentation to avoid Overfitting the Model

To avoid overfitting problem, we need to artificially expand HAM 10000 dataset.The idea is to alter the training data with small transformations.

Step 11. Model Training

Now with everything ready, I will train the model x_train, y_train. In this step I have chosen batch size of 10 and 50 epochs .

Step 12. Model Evaluation

Looking at the Plot above, we see maximum incorrect predictions for Basal cell carcinoma which has code 3, then second most incorrectly classified type is Vascular lesions with code 5 followed by Melanocytic nevi code 0 where as Actinic keratoses code 4 has least incorrectly classified type.

We can also further fine-tune our model to achieve more accuracy but still this model is efficient in comparison to detection with human eyes.

Conclusion

We saw with a real world example, What is a convolutional neural network (CNN) and how to create CNN from scratch . How can we use CNN to solve an image classification problem in Healthcare.A convolutional neural network (CNN) approach can be used to implement a level 2 autonomous vehicle by mapping pixels from the camera input to the steering commands. The network automatically learns the maximum variable features from the camera input, hence requiring minimal human intervention. You can read more how can such a CNN be built from scratch that can classify CIFAR images. Dont forget to hit a Like if you liked this article.

Data Engineer | Applied AI/ML

2 个月Here's the link to the Dataset and the Paper https://paperswithcode.com/dataset/ham10000-1