Building Your Modern Data Lakehouse: A Deep Dive into Azure Data Factory

Vitor Raposo

Data Engineer | Azure/AWS | Python & SQL Specialist | ETL & Data Pipeline Expert

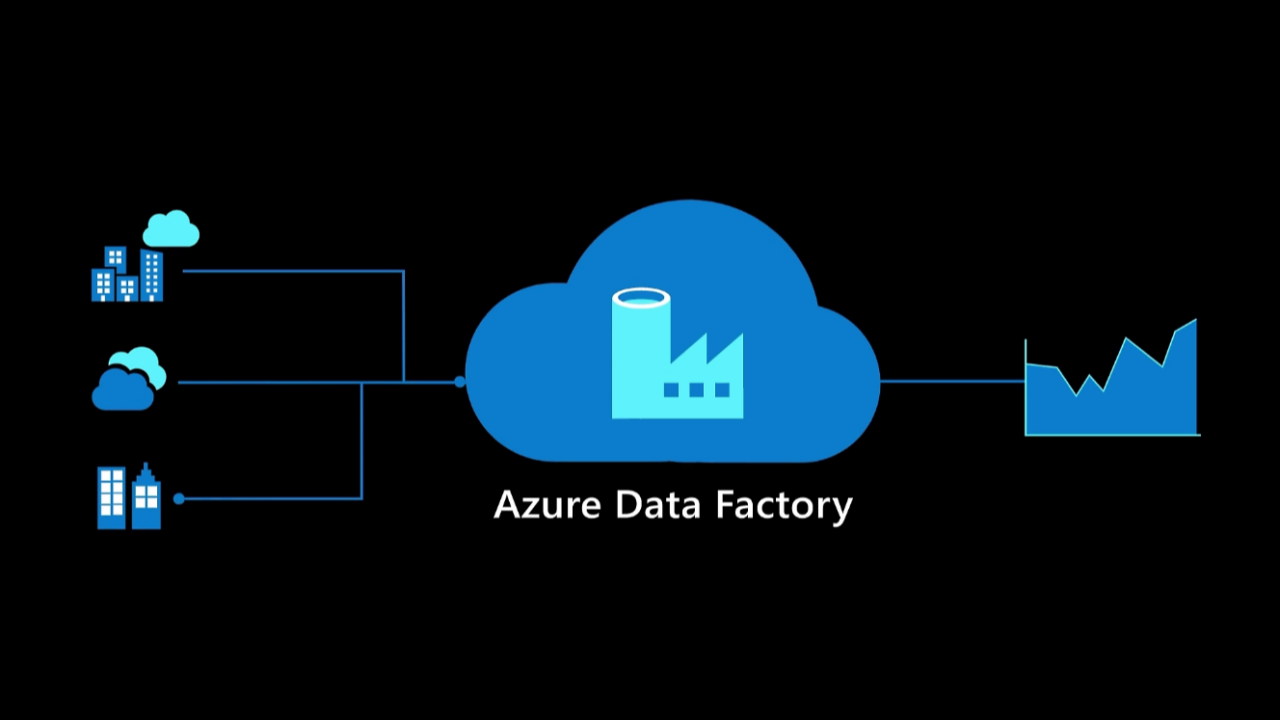

Continuing our exploration of our Azure Lakehouse architecture, this article delves into Azure Data Factory (ADF)—a pivotal tool for data ingestion and integration. In any modern data lakehouse, the seamless movement of data from various sources to the lakehouse is essential. ADF serves as the backbone of data ingestion, enabling businesses to automate workflows and connect to diverse data systems.

Below, we’ll unpack the essential features of ADF, how it integrates into the Azure ecosystem, and its role in building an efficient lakehouse architecture.

What is Azure Data Factory (ADF)?

Azure Data Factory is a cloud-based data integration service designed to orchestrate and automate the movement and transformation of data. It facilitates both ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes, making it suitable for various use cases across industries.

Key Features of ADF

1. Orchestration for Data Pipelines

2. Diverse Data Source Integration

3. Code-Free Data Transformation with Data Flows

4. Scalable, Pay-as-You-Go Model

ADF’s Role in the Azure Lakehouse

1. Ingesting Data into Azure Data Lake Storage Gen2

ADF plays a critical role in bringing data into Azure Data Lake Storage Gen2 (ADLS Gen2), the foundation of Azure’s lakehouse. It manages data ingestion from structured databases (like SQL Server or Oracle), semi-structured sources (like JSON or CSV), and unstructured sources (like logs and media files).

Example Use Case: ADF extracts transactional data from an on-premises SQL Server, transforms it in the cloud, and loads it into ADLS Gen2 for further analysis.

2. Integrating with Streaming Data Platforms

ADF complements real-time streaming platforms like Azure Event Hubs. While Event Hubs ingests streams in real time, ADF collects and aggregates this data periodically into the lakehouse for batch processing.

Example Use Case: A business monitors IoT device data using Event Hubs and employs ADF to run hourly aggregations, loading summarized data into ADLS Gen2.

ADF in Action: ETL/ELT Process within the Lakehouse

ETL Process:

ELT Process:

Integration with Azure Services for the Lakehouse

Example Workflow: A marketing team uses ADF to pull data from multiple advertising platforms, stores it in ADLS Gen2, and triggers Synapse Analytics for aggregations. Power BI connects to Synapse for real-time insights into campaign performance.

Monitoring and Error Handling in ADF

Operational Efficiency with ADF

Conclusion: Azure Data Factory as the Lakehouse Orchestrator

Azure Data Factory is the linchpin of the Azure Lakehouse, ensuring smooth data flow across various stages—from ingestion and transformation to storage and analytics. Its flexibility in supporting both batch and real-time workflows, coupled with broad integration capabilities, makes it an indispensable tool for modern data architectures.

In the next article, we’ll explore Azure Data Lake Gen2.

By mastering ADF, organizations can unlock the full potential of their data lakehouse, enabling them to make data-driven decisions with speed and confidence. Stay tuned as we continue building the complete Azure Lakehouse stack.

Senior Front-end Software Engineer | Mobile Developer | ReactJS | React Native | TypeScript | NodeJS

4 个月Very informative

UI/UX Designer | UI/UX Researcher | Figma | Wireframes |

4 个月Thanks for sharing!

.NET Software Engineer | Full Stack Developer | C# | Angular | AWS | Blazor

4 个月Useful, thanks Vitor Raposo

Software Engineer | Go (golang) | NodeJS (Javascrit) | AWS | Azure | CI/CD | Git | Devops | Terraform | IaC | Microservices | Solutions Architect

4 个月Very informative, Azure Data Factory has amazing tools! Thanks Vitor Raposo