The Building Blocks of NLP: Text Processing and Representation Explained

Introduction: Bridging the Gap Between Humans and Machines

Have you ever sent a text message and your phone suggested the exact word you were thinking? Or maybe you've asked Siri or Alexa a question, and they understood and responded appropriately. It's almost magical, isn't it? But how do machines, which operate on numbers and code, comprehend the rich, complex language that humans use every day?

Welcome to the fascinating world of Natural Language Processing (NLP)—a field of artificial intelligence that focuses on enabling machines to understand, interpret, and generate human language.

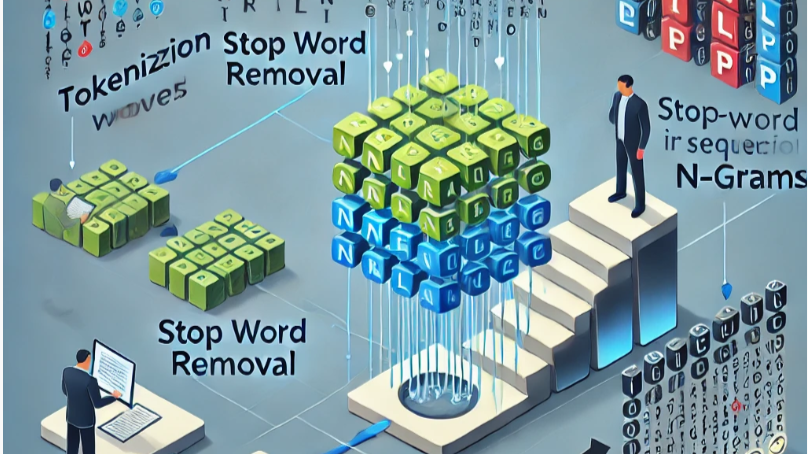

But before machines can do anything with our language, they need to prepare the text in a way they can process. This preparation involves several critical steps: tokenization, stop word removal, n-grams, and morphological analysis. Think of these steps as teaching a child how to read—starting with recognizing letters, forming words, understanding sentences, and grasping the meanings behind them.

Let's dive deeper into each of these foundational steps, exploring how they work and why they're essential.

1. Breaking Down Sentences: Tokenization

Imagine opening a book written in a foreign language with no spaces between words. How would you begin to understand it? The first step would be to separate the continuous stream of letters into individual words. This is essentially what tokenization does in NLP.

What is Tokenization?

Tokenization is the process of splitting text into smaller units called tokens. Tokens can be words, phrases, or even individual characters. By breaking text into tokens, we make it manageable for machines to process.

Why is Tokenization Important?

How Does Tokenization Work?

Let's take an example sentence:

"The quick brown fox jumps over the lazy dog."

Tokenizing this sentence at the word level gives us:

Now, the machine has individual words it can work with. But tokenization isn't always straightforward. Consider the sentence:

"Can't we meet at 7:00 p.m.?"

Tokenizing this sentence requires handling contractions and punctuations:

Challenges in Tokenization

Think of tokenization like cutting a loaf of bread into slices. You can't make a sandwich with the whole loaf—you need manageable pieces.

2. Cleaning Up the Noise: Stop Word Removal

Have you ever tried to focus on a conversation in a noisy room? Filtering out the background noise helps you concentrate on what's important. Similarly, in text processing, we remove words that don't carry significant meaning to focus on the essential parts.

What Are Stop Words?

Stop words are common words that appear frequently in a language but carry minimal semantic value in analysis. Examples in English include "the," "is," "at," "which," and "on."

Why Remove Stop Words?

How Does Stop Word Removal Work?

Continuing with our previous tokens:

Now, the machine focuses on words that carry more significant meaning.

Think of stop word removal as decluttering your workspace. By removing unnecessary items, you can focus better on the task at hand.

When Not to Remove Stop Words

Sometimes, stop words are essential for understanding context:

3. Understanding Word Relationships: N-Grams

Have you noticed how certain words often appear together? Phrases like "New York," "machine learning," or "peanut butter and jelly" are more meaningful together than individually.

What Are N-Grams?

An n-gram is a contiguous sequence of 'n' items from a given text. In NLP, these items are usually words.

Why Use N-Grams?

How Do N-Grams Work?

Using our sentence:

"The quick brown fox jumps over the lazy dog."

领英推荐

Applications of N-Grams

Think of n-grams as phrases or expressions. Recognizing common phrases helps in understanding the intended meaning better than analyzing words individually.

4. Getting to the Root: Stemming and Lemmatization

Have you ever wondered why "run," "runs," "running," and "ran" are treated as different words by machines? Humans easily understand they are variations of the same word, but machines need help to recognize this.

What is Stemming?

Stemming is the process of reducing words to their root form by removing prefixes or suffixes. It's like chopping off branches to get to the trunk.

What is Lemmatization?

Lemmatization reduces words to their base or dictionary form, called a lemma. It considers the context and grammatical rules.

Why Use Stemming and Lemmatization?

How Do They Differ?

Example Comparison

Think of stemming as using a machete to roughly cut words down, while lemmatization is like using a scalpel for precise trimming.

Bringing It All Together: From Text to Insights

Let's see how these steps work in harmony using a practical example.

Sample Text

"I am enjoying learning about Natural Language Processing!"

Step 1: Tokenization

Step 2: Stop Word Removal

Assuming "am", "about" are stop words:

Step 3: Stemming/Lemmatization

Applying lemmatization:

Step 4: N-Grams (Bigrams)

Now, the machine has a structured and meaningful representation of the text, ready for further analysis like sentiment detection or topic modeling.

Why These Building Blocks Matter

These foundational steps are critical because they:

Conclusion: The First Steps in Machine Language Understanding

By now, you should have a clearer picture of how machines begin to understand human language. It's a step-by-step process that transforms raw text into a structured format that machines can work with.

These building blocks—tokenization, stop word removal, n-grams, and stemming/lemmatization—are essential for anyone venturing into NLP. They set the stage for more advanced tasks like sentiment analysis, machine translation, and even conversational AI.

So the next time you interact with a smart assistant or use predictive text, remember the foundational steps that make these technologies possible.

Ready to Dive Deeper?

If this has piqued your interest, consider exploring how these processed texts feed into machine learning models or how advanced techniques like neural networks build upon these foundations to achieve even more impressive feats in NLP.

Let's continue this exciting journey into the world of machines and language together!