Build Your Own Real-Time Multimodal RAG Applications!

Pavan Belagatti

GenAI Evangelist (62K+)| Developer Advocate | Tech Content Creator | AI/ML Influencer | 28k Newsletter Subscribers | Supporting AI Innovations

In the rapidly evolving world of artificial intelligence, multimodal RAG (Retrieval-Augmented Generation) has emerged as a game-changer. This innovative approach combines the power of language models with the ability to process and understand various data types, including text, images, and audio. By leveraging real-time data and vector stores, multimodal RAG enhances the capabilities of Large Language Models (LLMs), enabling more comprehensive and context-aware responses.

This guide will walk you through the process of building a multimodal RAG application using SingleStore, a high-performance database designed to handle complex queries and real-time analytics. It covers the essentials of setting up a development environment like a Notebook, constructing a multimodal RAG pipeline, and integrating it with a multimodal language model. By the end, you will have the know-how to create a powerful application that can process and respond to diverse data inputs, opening up new possibilities in AI-driven applications and services.

What is Multimodal RAG?

Multimodal Retrieval-Augmented Generation (RAG) is an advanced approach that enhances the capabilities of LLMs by incorporating diverse data types, including text, images, audio, and video. This innovative technique extends the core concepts of RAG—indexing, retrieval, and synthesis—into a multifaceted framework. Unlike traditional RAG pipelines that handle only text, multimodal RAG can process and understand various data formats, mirroring human-like perception in machines.

The primary advantage of multimodal RAG lies in its ability to work with a wide variety of data types, such as tables, graphs, charts, diagrams, etc. This versatility allows for a more comprehensive interpretation of information, understanding the context, improving the accuracy and robustness of predictions. Multimodal RAG leads to more context-aware and informative responses.

Multimodal RAG applications span across various domains and tasks:

These applications demonstrate the versatility of multimodal RAG in handling complex, real-world scenarios where information is spread across different modalities. By integrating various data types, such as text and images, into LLMs, multimodal RAG enhances their ability to generate more holistic responses to user queries. This approach represents a significant step towards creating AI systems that can perceive and interact with the world in a manner more akin to human cognition.

Multi-Modal RAG Tutorial

Let’s create a multimodal RAG setup. We will be using a publicly available csv file that has both text and images. First, we will create a vector store, we will be using SingleStore as our vector store. To get started, sign up to SingleStore and get your free account.

We will then fetch images from our document, create embeddings and store these embeddings in our database. Finally, we will query the? database using natural language? and use multimodal LLM to interpret images.

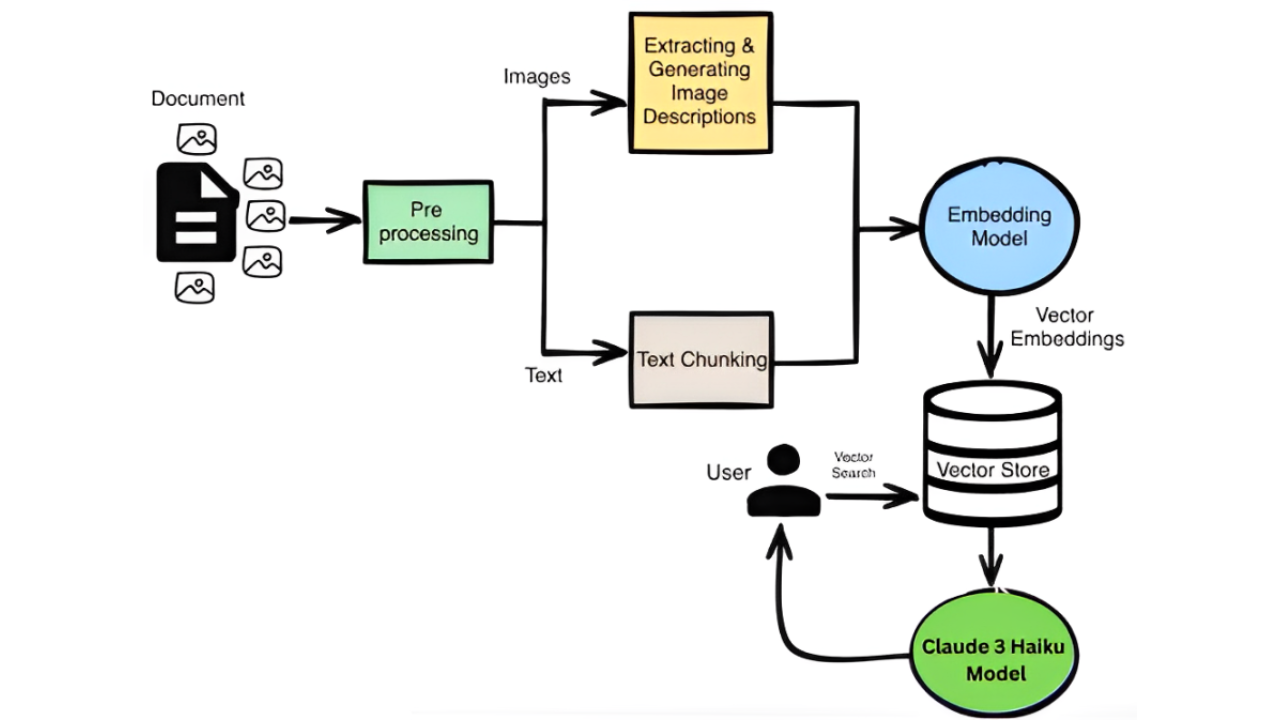

Below is a simple multimodal RAG workflow diagram

Once you sign up for SingleStore, you will have the workspace and a database ready by default. If you sign up with free credits then you would have to create your own workspace and database.

Go to ‘Data Studio’ and create a new Notebook. A Notebook in SingleStore is a Jupyter Notebook where you can write code in Python and SQL to interact with the databases.

Give your notebook a name and this is where we will add work with our code.

Once you have created the notebook and land on your notebook dashboard, make sure to select the workspace and the related database you just created from the top menu.

Let’s start working with our multimodal RAG setup. Follow along to understand clearly.

You can find the complete notebook code here.

First things first, install the required libraries, make the connection to the database and then store your Anthropic API key as a secret.?

Install the required libraries

Make connection to the database

Store the Anthopic API key as a secret

How to store secrets in SingleStore and use them in your Notebooks?

It is easy. Go to your data studio, you will find the secret tab and that is where we can keep our secret keys securely, as shown below.

Using LangChain and mentioning the embedding model and vector store

See the table name created

See the indexes created

Download the images, puth them in a dataframe and create vector embeddings and store them in our vector database.

You can try this tutorial, the complete notebook code is here.

Here is my complete video on building multi-modal RAG setup.

Machine Learning Engineer | NLP | Generative AI | RAG | Product Development | Problem Solving

1 个月Could you explain how would the vector store differentiate the embeddings of an image and a text? Do we require meta data to classify them?

Optimistic | Explorer | Personal Development | Tech Enthusiastic | Quantum Computing

1 个月Hi Pavan I love your videos on YouTube. Open ai api key is a paid one right. Can you please use open source tools to build Multimodal this is my request.

Building DevologyX & DFT Community | Providing Top Tier Software Talent Solutions across Europe ????| Helping Developers enhance their Career & Quality of Life | Offices in:????????????| #DFTCommunity #WeAreOne ??

1 个月Yo, mixing text and images for that RAG magic? Sounds dope. Who's diving into the code first? Let’s see what you make Pavan Belagatti

This article evoked my desire to try building my application with the usage of RAG. It's very nice and informative, I'm grateful for your work!