Binary Quantization

Rohan Paul

I build & write AI stuff. → Join 46K+ others on my X / Twitter. AI Engineer and Entrepreneur (Ex Investment Banking).

The buzz surrounding Binary Quantization has been impossible to ignore, especially if you've been keeping tabs on recent discussions in tech circles. ??

The concept itself isn't new, but what's reignited interest is the announcement from Cohere regarding their latest support enhancements for int8 and binary embeddings in their Cohere embed v3.

?? First let's quickly see why we need Embeddings?

Embeddings are one of the most versatile tools in natural language processing, supporting a wide variety of settings and use cases. In essence, embeddings are numerical representations of more complex objects, like text, images, audio, etc. Specifically, the objects are represented as n-dimensional vectors.

After transforming the complex objects, you can determine their similarity by calculating the similarity of the respective embeddings! This is crucial for many use cases: it serves as the backbone for recommendation systems, retrieval, one-shot or few-shot learning, outlier detection, similarity search, paraphrase detection, clustering, classification, and much more.

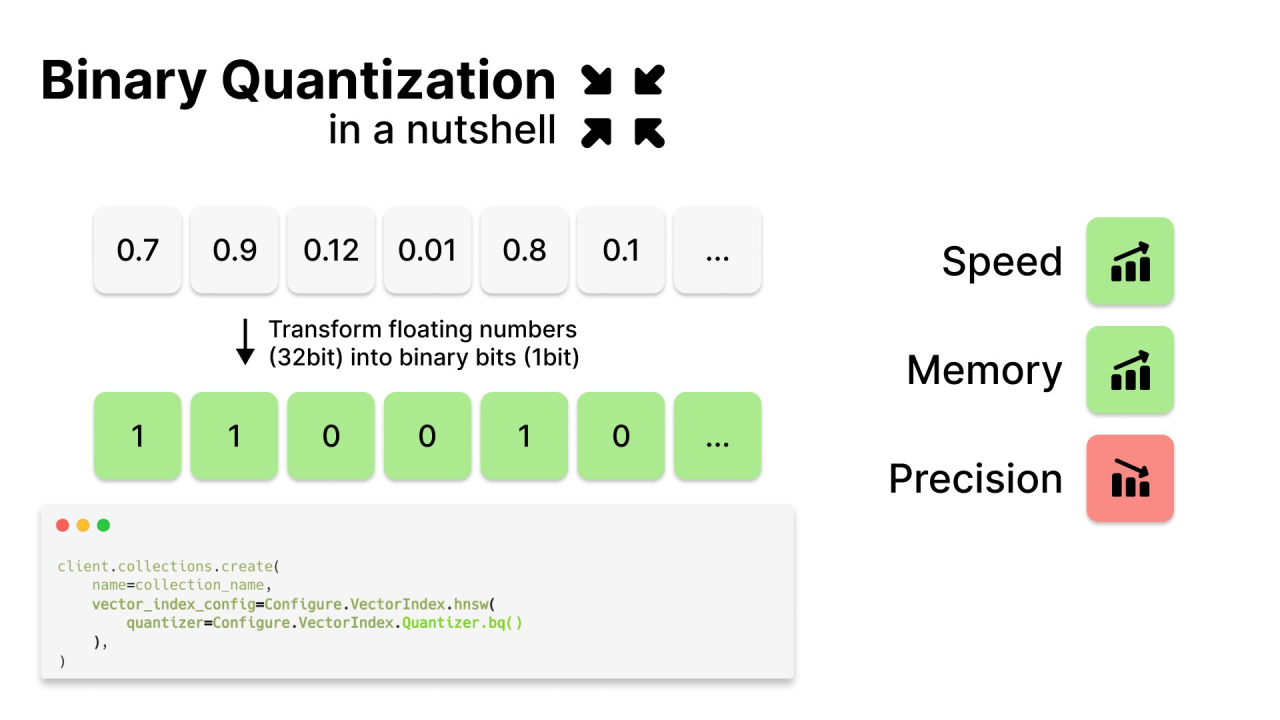

?? Binary Quantization for embeddings

Unlike quantization in models where you reduce the precision of weights, quantization for embeddings refers to a post-processing step for the embeddings themselves. In particular, binary quantization refers to the conversion of the float32 values in an embedding to 1-bit values, resulting in a 32x reduction in memory and storage usage.

? Binary quantization example

Vector embeddings are usually generated by embedding models, such as Cohere’s embed v3, and a single vector embeddings will in the following form.

[0.056, -0.128, -0.029, 0.047, …, 0.135]

To quantize float32 embeddings to binary, we simply threshold normalized embeddings at 0

That is, because these embeddings have very small absolute numbers close to zero, you can turn them into a binary vector:

1: If the value is greater or equal to 0.

0: If the value is smaller than 0.

So that you get something like this.

[1, 0, 0, …, 1]

Here an example of Binary quantization with sentence transformers.

?? So basically why does binary quantization reduce vector embedding size soo much?

It's kind of like turning a colored image into a black and white image.

领英推荐

By converting the floating point numbers, which are stored in 32 bits, into a single bit, you only need 1/32nd of memory space to store a binarized vector. This can lead to increased search speed and reduced storage costs.

And because vector embeddings are usually high-dimensional, you can still get meaningful similarity measures for vector search. ??

Now the question is how to calculate the similarity of vectors which has been binarized ?

?? We can use the Hamming Distance to efficiently perform retrieval with these binary embeddings. This is simply the number of positions at which the bits of two binary embeddings differ. The lower the Hamming Distance, the closer the embeddings, and thus the more relevant the document. A huge advantage of the Hamming Distance is that it can be easily calculated with 2 CPU cycles, allowing for blazingly fast performance.

?? Why Binary Quantization (BQ) is particularly suitable for high-dimensional vectors.

Simply because, in higher dimensional space, even with BQ, the vector can retain a high degree of information.

First, noting the basics, the number of elements in a single vector represents the total dimensionality of that vector. Each element of a vector represents a coordinate in a particular dimension, so a vector with n elements is said to inhabit an n-dimensional space.

When we refer to a vector's dimensionality, we are essentially describing how many degrees of freedom or independent directions of information it contains. For example, a 3-dimensional vector might represent a point in 3D space with coordinates along the X, Y, and Z axes.

?? In high-dimensional spaces, vectors possess a large number of elements. Despite each element being aggressively quantized to a single bit, the overall vector retains substantial aggregate information. The high dimensionality ensures that, even in binary form, the relationships and structures inherent to the data can be preserved to a useful extent.

?? This is on the assumption that the essential information of the vector is distributed across its many dimensions, allowing the binary-reduced vector to approximate the original's informational content in aggregate, despite the severe reduction in precision per dimension.

? What are the drawbacks ?

Firstly, the adoption of binary quantization impacts the accuracy and precision of your search results. Although you can still retrieve relevant outcomes, the nuance and detail provided by higher-resolution data can be lost, leading to less precise results.

Furthermore, binary quantization is a one-way street—once you've converted your data into binary form, there's no turning back. This process is a form of lossy compression, meaning once the data has undergone quantization, the original, detailed information is irretrievably lost.

? Is there anyway to reduce the Lossiness from BQ

Party yes, e.g. Weaviate compensates for this by overfetching vectors from the index, and then rescoring the vectors in the uncompressed space. And this technique has been found to compensate to some extent for the lossiness of BQ

.