Behind the Rankings: LLM Model Evaluation in Benchmark Datasets

Jayant Kumar

Principal ML Scientist at Adobe | Technical Advisor at Preffect | Multimodal AI | Large language models and Knowledge Graph applications

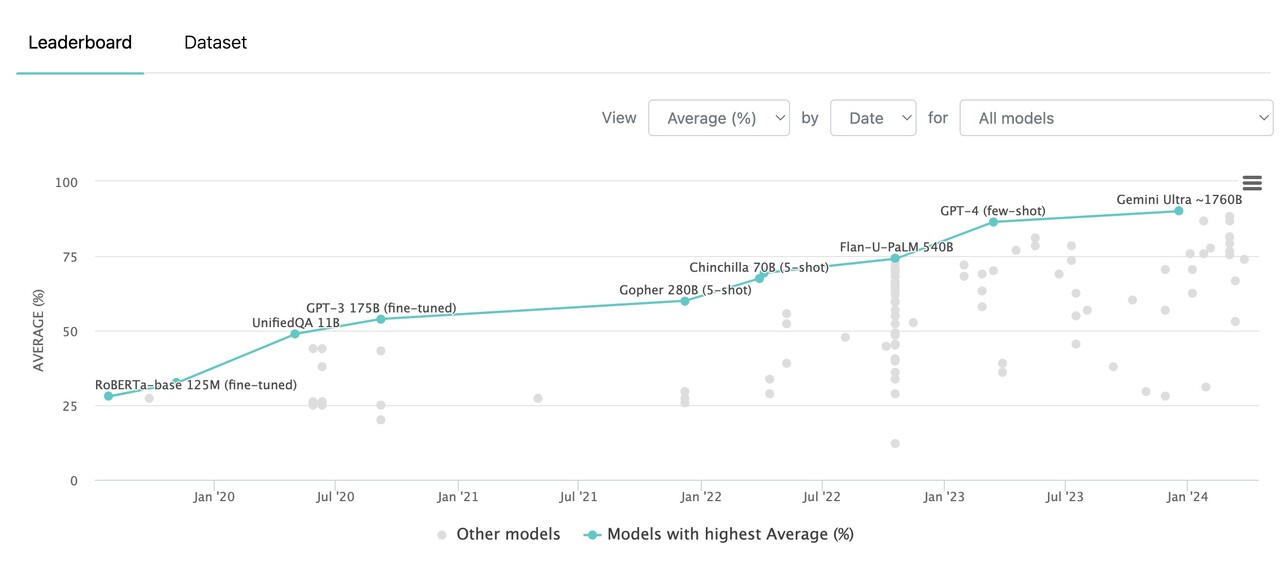

Over the past few days, there's been a flurry of posts discussing the newly unveiled Llama 3 model and its impressive performance on benchmark datasets like MMLU and HumanEval. It's worth delving into these benchmarks and exploring some of the nuances and potential pitfalls associated with relying too heavily on them and the reported scores from various companies.

MMLU, for instance, encompasses a wide array of 57 tasks spanning mathematics, history, computer science, and even law [Ref1, Ref2].

Each task presents several questions with four answer choices, one of which is correct. LLMs receive scores based on their accuracy in providing correct answers.

While the accessibility of this dataset and its detailed topic breakdown may seem advantageous, it raises concerns about potential manipulation by companies to optimize LLM performance.

Moreover, literature highlights additional concerns. A recent paper [Ref3] delves into the sensitivity of LLM leaderboards and cautions against using benchmark rankings as the sole basis for model selection. It elucidates how minor alterations in the design and evaluation of the MMLU dataset can lead to significant shifts in rankings. Additionally, LLMs exhibit biases toward specific scoring methods for answer choices in multiple-choice questions (MCQs).

By acknowledging these issues and engaging in critical discourse, we can foster a more nuanced understanding of the strengths and limitations of benchmark datasets and better inform our approach to evaluating AI models.