Applications of Neural Network Activation Functions in Various Industries

I have explored the various sectors where these activation functions can be effectively utilized, highlighting specific examples.

1. Healthcare

Activation Functions: ReLU, ELU, and GELU

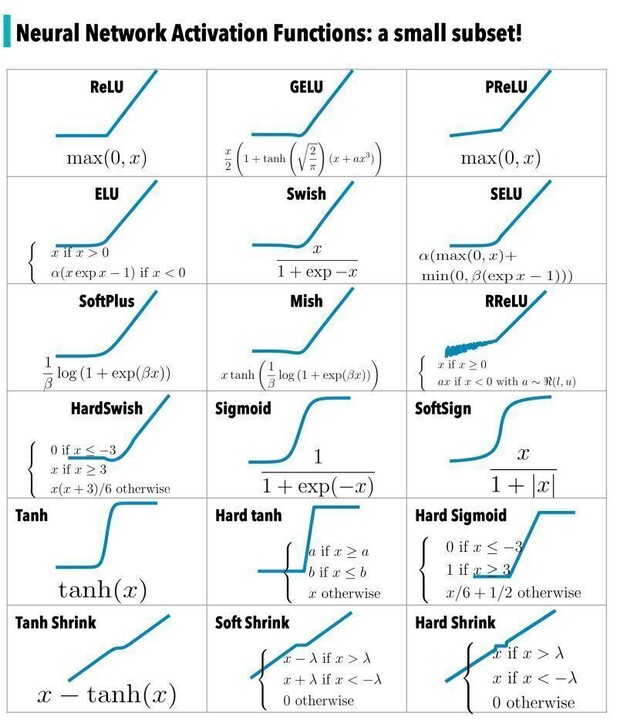

In healthcare, activation functions such as ReLU (Rectified Linear Unit) and ELU (Exponential Linear Unit) are often employed in deep learning models for medical image analysis. For instance, convolutional neural networks (CNNs) use ReLU to enhance feature extraction from MRI scans or X-rays, improving diagnostic accuracy. GELU (Gaussian Error Linear Unit) is particularly useful in natural language processing (NLP) applications for clinical text analysis, helping to extract meaningful insights from unstructured data.

2. Finance

Activation Functions: Sigmoid and SoftPlus

In the finance sector, activation functions like Sigmoid and SoftPlus are frequently used in credit scoring models. The Sigmoid function is particularly suitable for binary classification tasks, such as determining whether a loan should be approved or denied based on applicant data. SoftPlus can be employed in risk assessment models to predict potential losses, providing a smooth approximation of the ReLU function, which can be beneficial in gradient-based optimization.

3. Retail

Activation Functions: Swish and Mish

The retail industry leverages activation functions like Swish and Mish in recommendation systems. These functions help improve the performance of algorithms that analyze customer behavior and preferences. Swish, for example, can enhance the accuracy of personalized marketing strategies by modeling complex relationships between various customer attributes. Mish can also be applied in demand forecasting, allowing retailers to better predict inventory needs based on historical sales data.

领英推荐

4. Automotive

Activation Functions: Tanh and Hard Sigmoid

In the automotive sector, particularly in autonomous driving technology, activation functions like Tanh and Hard Sigmoid are utilized in sensor fusion and control systems. Tanh can be effective in processing data from various sensors, providing a normalized output that enhances decision-making processes. Hard Sigmoid is often used in real-time systems where computational efficiency is crucial, enabling rapid responses to environmental changes.

5. Telecommunications

Activation Functions: PReLU and RReLU

Telecommunication companies utilize activation functions such as PReLU (Parametric ReLU) and RReLU (Randomized ReLU) in network optimization and predictive maintenance models. PReLU can help in modeling non-linear relationships in network traffic data, enabling better resource allocation. RReLU adds a layer of randomness that can improve robustness in predictive models for system failures.

6. Energy Sector

Activation Functions: SoftSign and Tanh Shrink

In the energy sector, particularly in smart grid applications, activation functions like SoftSign and Tanh Shrink are used in predictive analytics for energy consumption forecasting. SoftSign can provide smoother gradients, which is beneficial in training models that predict energy demand based on various factors, including weather and historical usage patterns. Tanh Shrink can help in refining the outputs of models used for anomaly detection in energy consumption.