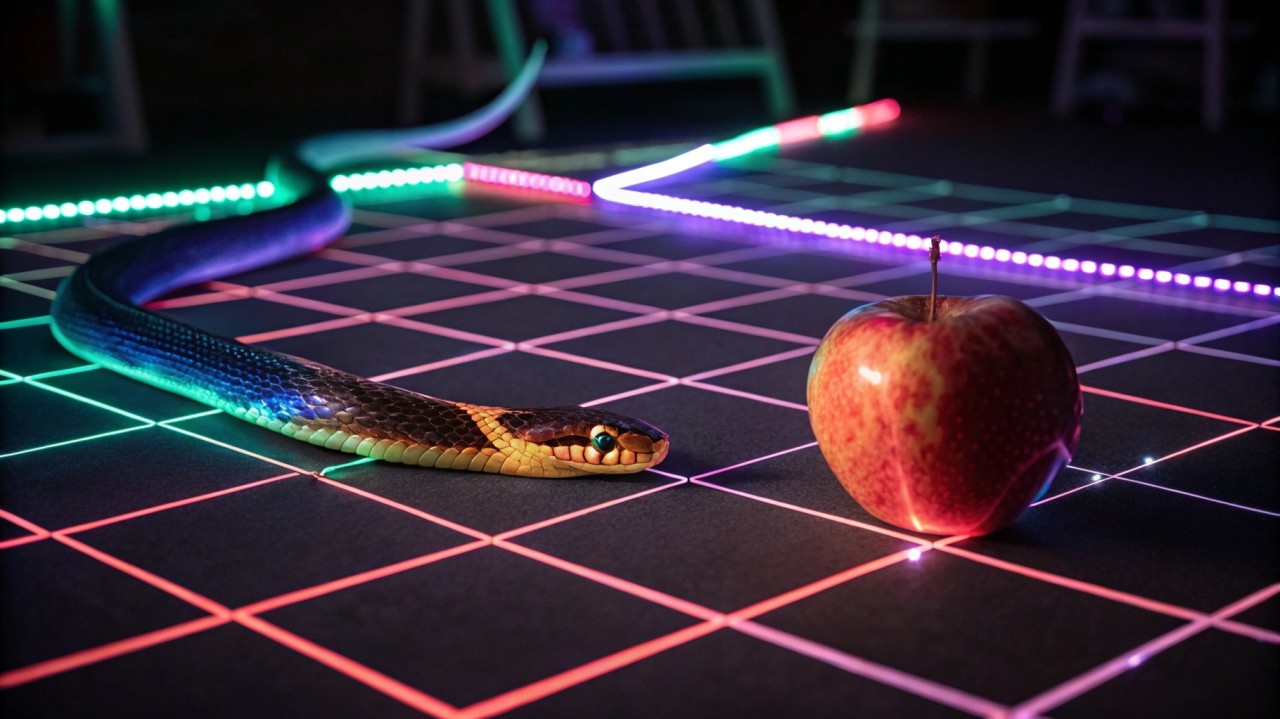

A.I Snake Game Using Reinforcement Learning

?? Introduction

Reinforcement Learning (RL) is a branch of machine learning where an agent learns by interacting with an environment to maximize rewards. In this project, we will use RL to train an AI agent to play the classic Snake game.

?? Goal: Train an AI agent to play Snake using Deep Q-Learning (DQN), a neural network-based reinforcement learning algorithm.

?? 1?? Understanding Reinforcement Learning in Snake Game

? Agent: The snake in the game.

? Environment: The game board.

? State: Current position of the snake, food, and obstacles.

? Action: Move (Up, Down, Left, Right).

? Reward:

?? 2?? Steps to Build an AI Snake Game Using RL

?? Step 1: Install Dependencies

!pip install pygame numpy torch matplotlib gym

?? Step 2: Import Libraries

import pygame

import random

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

from collections import deque

?? Step 3: Create Snake Game Environment

class SnakeGame:

def __init__(self, width=400, height=400):

self.width = width

self.height = height

self.reset()

def reset(self):

self.snake = [(100, 100), (90, 100), (80, 100)]

self.food = self.place_food()

self.direction = (10, 0)

self.score = 0

def place_food(self):

return (random.randint(0, self.width//10 - 1) * 10, random.randint(0, self.height//10 - 1) * 10)

def step(self, action):

x, y = self.snake[0]

dx, dy = action

new_head = (x + dx, y + dy)

if new_head == self.food:

self.food = self.place_food()

self.snake.insert(0, new_head)

self.score += 10

elif new_head in self.snake or not (0 <= new_head[0] < self.width and 0 <= new_head[1] < self.height):

return -10, True # Game over

else:

self.snake.insert(0, new_head)

self.snake.pop()

return 1, False # Reward and game status

def get_state(self):

head_x, head_y = self.snake[0]

food_x, food_y = self.food

return np.array([head_x, head_y, food_x, food_y])

def render(self):

pygame.init()

screen = pygame.display.set_mode((self.width, self.height))

screen.fill((0, 0, 0))

for segment in self.snake:

pygame.draw.rect(screen, (0, 255, 0), (*segment, 10, 10))

pygame.draw.rect(screen, (255, 0, 0), (*self.food, 10, 10))

pygame.display.flip()

?? Step 4: Create the DQN Model

class DQN(nn.Module):

def __init__(self):

super(DQN, self).__init__()

self.fc1 = nn.Linear(4, 64)

self.fc2 = nn.Linear(64, 64)

self.fc3 = nn.Linear(64, 4)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = torch.relu(self.fc2(x))

return self.fc3(x)

?? Step 5: Train the AI Snake Agent

class DQNAgent:

def __init__(self):

self.model = DQN()

self.optimizer = optim.Adam(self.model.parameters(), lr=0.001)

self.memory = deque(maxlen=1000)

self.epsilon = 1.0 # Exploration rate

self.gamma = 0.9 # Discount factor

def select_action(self, state):

if random.random() < self.epsilon:

return random.choice([(10, 0), (-10, 0), (0, 10), (0, -10)])

state = torch.tensor(state, dtype=torch.float32)

with torch.no_grad():

return [(10, 0), (-10, 0), (0, 10), (0, -10)][torch.argmax(self.model(state)).item()]

def train(self):

if len(self.memory) < 32:

return

batch = random.sample(self.memory, 32)

for state, action, reward, next_state, done in batch:

state = torch.tensor(state, dtype=torch.float32)

next_state = torch.tensor(next_state, dtype=torch.float32)

reward = torch.tensor(reward, dtype=torch.float32)

target = reward + (1 - done) * self.gamma * torch.max(self.model(next_state))

output = self.model(state)[[(10, 0), (-10, 0), (0, 10), (0, -10)].index(action)]

loss = (output - target).pow(2).mean()

self.optimizer.zero_grad()

loss.backward()

self.optimizer.step()

def update_epsilon(self):

self.epsilon = max(0.1, self.epsilon * 0.99)

?? Step 6: Train the Model

game = SnakeGame()

agent = DQNAgent()

episodes = 1000

for episode in range(episodes):

state = game.get_state()

done = False

total_reward = 0

while not done:

action = agent.select_action(state)

reward, done = game.step(action)

next_state = game.get_state()

agent.memory.append((state, action, reward, next_state, done))

agent.train()

state = next_state

total_reward += reward

agent.update_epsilon()

print(f"Episode {episode+1}, Score: {game.score}, Epsilon: {agent.epsilon:.2f}")

game.reset()

?? 3?? Results & Observations

? Initially, the snake moves randomly because it is exploring.

? Over time, the AI learns to move toward food efficiently.

? It avoids hitting the walls and itself, improving survival time.

? The reward system ensures the snake optimizes its movement strategy.

?? 4?? Future Enhancements

? Train using a larger neural network (Deep Q-Networks with CNNs).

? Use reinforcement learning libraries like Stable-Baselines3.

? Deploy AI-powered Snake as a web-based game.

Graphic Designer at Copy Code Community | B.Tech CSE @ Jamia Hamdard

1 天前Interesting