AI in a Nutshell: Understanding Deep Learning Models

Alexsandro Souza

Tech lead | Author | Instructor | Speaker | Opensource contributor | Agile coach | DevOps | AI enthusiast

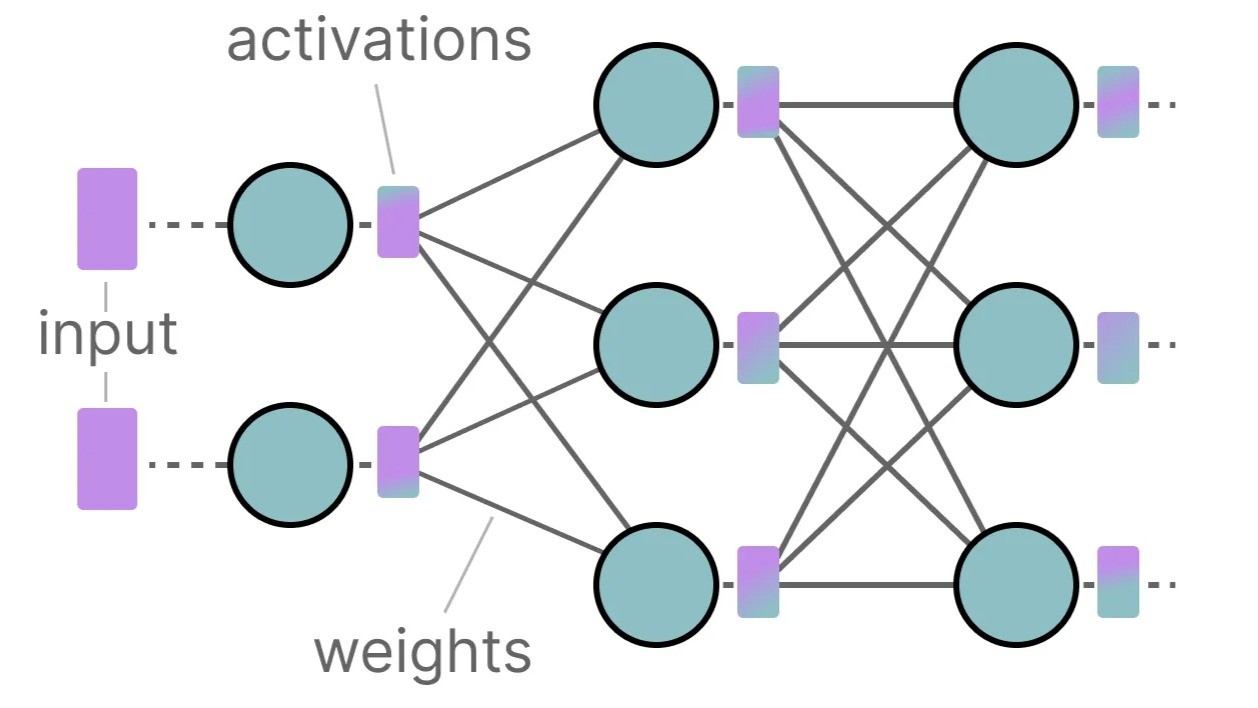

At its core, a deep learning model is simply a file filled with multi-dimensional vectors of float values. These values are set through a process called training, where inputs are fed into a pipeline of processes (neural networks). At the end of this pipeline, outputs are compared to the expected results, and adjustments are made to minimise the difference. This process repeats until the outputs are satisfactory.

If you’d like to dive deeper, check out my FREE course on Udemy, where I develop a vanilla neural network in JavaScript from scratch, no frameworks!

How are models trained?

There are several ways:

I cover this in my FREE course on Udemy, where I build and train models to play games using vanilla JavaScript – no frameworks!

?? Fine-Tuning Pre-Trained Models

You can also take pre-trained models and adapt them to your specific dataset by adjusting their weights. I teach this technique in my CNN for Developers course – ideal for anyone looking to customise AI models for unique applications.

领英推荐

Deploying Models

Once your model is trained (multi-dimensional matrices with float values), you can load it into a program to perform inference. To ensure compatibility, knowing the model’s training framework is critical. This is where the ONNX format shines – enabling interoperability between frameworks.

Now, thanks to ONNX Runtime (developed by Microsoft), you can even run models in the browser! Combine this with WebAssembly, and tools like Transformers.js from Hugging Face, and deploying deep learning models on the client side has never been easier. Check out some hands-on examples here

Scaling Models for Consumer Hardware

Many deep learning models are too large for consumer hardware, requiring GPUs and significant VRAM. Enter quantization – a technique that reduces model size by lowering precision (e.g., 32-bit floats to 8-bit integers).

Looking Ahead to 2025

The pace of innovation in AI is astounding, and I believe 2025 will be another breakthrough year. Whether you’re just starting or are a seasoned developer, the time to learn and build in AI is now!

Happy learning! ??